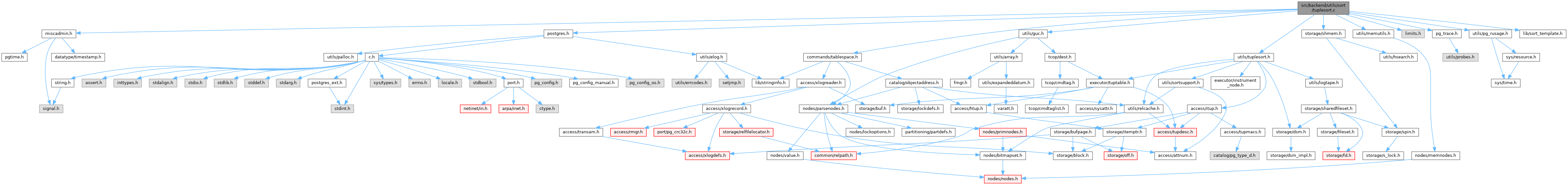

#include "postgres.h"#include <limits.h>#include "commands/tablespace.h"#include "miscadmin.h"#include "pg_trace.h"#include "storage/shmem.h"#include "utils/guc.h"#include "utils/memutils.h"#include "utils/pg_rusage.h"#include "utils/tuplesort.h"#include "lib/sort_template.h"

Go to the source code of this file.

Data Structures | |

| union | SlabSlot |

| struct | Tuplesortstate |

| struct | Sharedsort |

| struct | RadixSortInfo |

Typedefs | |

| typedef union SlabSlot | SlabSlot |

| typedef struct RadixSortInfo | RadixSortInfo |

Enumerations | |

| enum | TupSortStatus { TSS_INITIAL , TSS_BOUNDED , TSS_BUILDRUNS , TSS_SORTEDINMEM , TSS_SORTEDONTAPE , TSS_FINALMERGE } |

Variables | |

| bool | trace_sort = false |

Macro Definition Documentation

◆ COMPARETUP

Definition at line 393 of file tuplesort.c.

◆ FREEMEM

Definition at line 399 of file tuplesort.c.

◆ FREESTATE

| #define FREESTATE | ( | state | ) | ((state)->base.freestate ? (*(state)->base.freestate) (state) : (void) 0) |

Definition at line 396 of file tuplesort.c.

◆ INITIAL_MEMTUPSIZE

| #define INITIAL_MEMTUPSIZE |

Definition at line 118 of file tuplesort.c.

◆ IS_SLAB_SLOT

Definition at line 372 of file tuplesort.c.

◆ LACKMEM

Definition at line 397 of file tuplesort.c.

◆ LEADER

Definition at line 402 of file tuplesort.c.

◆ MAXORDER

Definition at line 175 of file tuplesort.c.

◆ MERGE_BUFFER_SIZE

Definition at line 177 of file tuplesort.c.

◆ MINORDER

Definition at line 174 of file tuplesort.c.

◆ QSORT_THRESHOLD

| #define QSORT_THRESHOLD 40 |

Definition at line 523 of file tuplesort.c.

◆ READTUP

Definition at line 395 of file tuplesort.c.

◆ RELEASE_SLAB_SLOT

Definition at line 380 of file tuplesort.c.

◆ REMOVEABBREV

Definition at line 392 of file tuplesort.c.

◆ SERIAL

◆ SLAB_SLOT_SIZE

| #define SLAB_SLOT_SIZE 1024 |

Definition at line 140 of file tuplesort.c.

◆ ST_CHECK_FOR_INTERRUPTS [1/2]

| #define ST_CHECK_FOR_INTERRUPTS |

Definition at line 492 of file tuplesort.c.

◆ ST_CHECK_FOR_INTERRUPTS [2/2]

| #define ST_CHECK_FOR_INTERRUPTS |

Definition at line 492 of file tuplesort.c.

◆ ST_COMPARE

Definition at line 500 of file tuplesort.c.

◆ ST_COMPARE_ARG_TYPE [1/2]

| #define ST_COMPARE_ARG_TYPE Tuplesortstate |

Definition at line 491 of file tuplesort.c.

◆ ST_COMPARE_ARG_TYPE [2/2]

| #define ST_COMPARE_ARG_TYPE SortSupportData |

Definition at line 491 of file tuplesort.c.

◆ ST_COMPARE_RUNTIME_POINTER

| #define ST_COMPARE_RUNTIME_POINTER |

Definition at line 490 of file tuplesort.c.

◆ ST_DECLARE

| #define ST_DECLARE |

Definition at line 494 of file tuplesort.c.

◆ ST_DEFINE [1/2]

| #define ST_DEFINE |

Definition at line 495 of file tuplesort.c.

◆ ST_DEFINE [2/2]

| #define ST_DEFINE |

Definition at line 495 of file tuplesort.c.

◆ ST_ELEMENT_TYPE [1/2]

Definition at line 489 of file tuplesort.c.

◆ ST_ELEMENT_TYPE [2/2]

Definition at line 489 of file tuplesort.c.

◆ ST_SCOPE [1/2]

Definition at line 493 of file tuplesort.c.

◆ ST_SCOPE [2/2]

Definition at line 493 of file tuplesort.c.

◆ ST_SORT [1/2]

| #define ST_SORT qsort_tuple |

Definition at line 488 of file tuplesort.c.

◆ ST_SORT [2/2]

| #define ST_SORT qsort_ssup |

Definition at line 488 of file tuplesort.c.

◆ TAPE_BUFFER_OVERHEAD

Definition at line 176 of file tuplesort.c.

◆ USEMEM

Definition at line 398 of file tuplesort.c.

◆ WORKER

Definition at line 401 of file tuplesort.c.

◆ WRITETUP

Definition at line 394 of file tuplesort.c.

Typedef Documentation

◆ RadixSortInfo

| typedef struct RadixSortInfo RadixSortInfo |

◆ SlabSlot

Enumeration Type Documentation

◆ TupSortStatus

| Enumerator | |

|---|---|

| TSS_INITIAL | |

| TSS_BOUNDED | |

| TSS_BUILDRUNS | |

| TSS_SORTEDINMEM | |

| TSS_SORTEDONTAPE | |

| TSS_FINALMERGE | |

Definition at line 152 of file tuplesort.c.

Function Documentation

◆ beginmerge()

|

static |

Definition at line 2156 of file tuplesort.c.

References Assert, fb(), mergereadnext(), Min, SortTuple::srctape, and tuplesort_heap_insert().

Referenced by mergeonerun(), and mergeruns().

◆ consider_abort_common()

|

static |

Definition at line 1215 of file tuplesort.c.

References Assert, fb(), and TSS_INITIAL.

Referenced by tuplesort_puttuple_common().

◆ current_byte()

Definition at line 2573 of file tuplesort.c.

References BITS_PER_BYTE.

Referenced by radix_sort_recursive().

◆ dumptuples()

|

static |

Definition at line 2203 of file tuplesort.c.

References Assert, elog, ereport, errcode(), errmsg(), ERROR, fb(), FREEMEM, i, LACKMEM, LOG, markrunend(), MemoryContextReset(), pg_rusage_show(), selectnewtape(), trace_sort, TSS_BUILDRUNS, tuplesort_sort_memtuples(), and WRITETUP.

Referenced by tuplesort_performsort(), and tuplesort_puttuple_common().

◆ free_sort_tuple()

|

static |

Definition at line 3400 of file tuplesort.c.

References fb(), FREEMEM, GetMemoryChunkSpace(), and pfree().

Referenced by make_bounded_heap(), and tuplesort_puttuple_common().

◆ getlen()

|

static |

Definition at line 3128 of file tuplesort.c.

References elog, ERROR, fb(), len, and LogicalTapeRead().

Referenced by mergereadnext(), and tuplesort_gettuple_common().

◆ grow_memtuples()

|

static |

Definition at line 948 of file tuplesort.c.

References elog, ERROR, fb(), FREEMEM, GetMemoryChunkSpace(), LACKMEM, MaxAllocHugeSize, repalloc_huge(), and USEMEM.

Referenced by tuplesort_puttuple_common().

◆ init_slab_allocator()

|

static |

Definition at line 1877 of file tuplesort.c.

References fb(), i, palloc(), SLAB_SLOT_SIZE, and USEMEM.

Referenced by mergeruns().

◆ inittapes()

|

static |

Definition at line 1761 of file tuplesort.c.

References Assert, elog, fb(), inittapestate(), LEADER, LOG, LogicalTapeSetCreate(), mergeruns(), MINORDER, palloc0(), pg_rusage_show(), selectnewtape(), trace_sort, TSS_BUILDRUNS, tuplesort_merge_order(), and WORKER.

Referenced by tuplesort_performsort(), and tuplesort_puttuple_common().

◆ inittapestate()

|

static |

Definition at line 1810 of file tuplesort.c.

References fb(), GetMemoryChunkSpace(), PrepareTempTablespaces(), TAPE_BUFFER_OVERHEAD, and USEMEM.

Referenced by inittapes(), and leader_takeover_tapes().

◆ leader_takeover_tapes()

|

static |

Definition at line 3341 of file tuplesort.c.

References Assert, elog, ERROR, fb(), Sharedsort::fileset, inittapestate(), j, LEADER, LogicalTapeImport(), LogicalTapeSetCreate(), Sharedsort::mutex, palloc0(), SpinLockAcquire, SpinLockRelease, Sharedsort::tapes, TSS_BUILDRUNS, and Sharedsort::workersFinished.

Referenced by tuplesort_performsort().

◆ make_bounded_heap()

|

static |

Definition at line 2483 of file tuplesort.c.

References Assert, CHECK_FOR_INTERRUPTS, COMPARETUP, fb(), free_sort_tuple(), i, reversedirection(), SERIAL, TSS_BOUNDED, TSS_INITIAL, tuplesort_heap_insert(), and tuplesort_heap_replace_top().

Referenced by tuplesort_puttuple_common().

◆ markrunend()

|

static |

Definition at line 3141 of file tuplesort.c.

References fb(), len, and LogicalTapeWrite().

Referenced by dumptuples(), and mergeonerun().

◆ merge_read_buffer_size()

|

static |

Definition at line 1729 of file tuplesort.c.

References fb(), Max, Min, and TAPE_BUFFER_OVERHEAD.

Referenced by mergeruns().

◆ mergeonerun()

|

static |

Definition at line 2096 of file tuplesort.c.

References Assert, beginmerge(), fb(), markrunend(), mergereadnext(), RELEASE_SLAB_SLOT, tuplesort_heap_delete_top(), tuplesort_heap_replace_top(), and WRITETUP.

Referenced by mergeruns().

◆ mergereadnext()

|

static |

Definition at line 2184 of file tuplesort.c.

References fb(), getlen(), and READTUP.

Referenced by beginmerge(), mergeonerun(), and tuplesort_gettuple_common().

◆ mergeruns()

|

static |

Definition at line 1913 of file tuplesort.c.

References Assert, beginmerge(), elog, fb(), FREEMEM, GetMemoryChunkSpace(), init_slab_allocator(), INT64_FORMAT, LOG, LogicalTapeClose(), LogicalTapeFreeze(), LogicalTapeRewindForRead(), LogicalTapeSetForgetFreeSpace(), MemoryContextAlloc(), MemoryContextResetOnly(), merge_read_buffer_size(), mergeonerun(), palloc0(), pfree(), pg_rusage_show(), selectnewtape(), trace_sort, TSS_BUILDRUNS, TSS_FINALMERGE, TSS_SORTEDONTAPE, TUPLESORT_RANDOMACCESS, USEMEM, WORKER, and worker_freeze_result_tape().

Referenced by inittapes(), and tuplesort_performsort().

◆ normalize_datum()

|

inlinestatic |

Definition at line 2585 of file tuplesort.c.

References Assert, SortSupportData::comparator, DatumGetUInt32(), fb(), PG_INT32_MAX, PG_INT64_MAX, ssup_datum_int32_cmp(), ssup_datum_signed_cmp(), ssup_datum_unsigned_cmp(), SortSupportData::ssup_reverse, and UInt32GetDatum().

Referenced by radix_sort_recursive().

◆ radix_sort_recursive()

|

static |

Definition at line 2654 of file tuplesort.c.

References Assert, CHECK_FOR_INTERRUPTS, current_byte(), fb(), i, idx(), normalize_datum(), partitions, QSORT_THRESHOLD, and radix_sort_recursive().

Referenced by radix_sort_recursive(), and radix_sort_tuple().

◆ radix_sort_tuple()

|

static |

Definition at line 2803 of file tuplesort.c.

References Assert, CHECK_FOR_INTERRUPTS, COMPARETUP, data, fb(), i, j, QSORT_THRESHOLD, and radix_sort_recursive().

Referenced by tuplesort_sort_memtuples().

◆ reversedirection()

|

static |

Definition at line 3110 of file tuplesort.c.

References fb().

Referenced by make_bounded_heap(), and sort_bounded_heap().

◆ selectnewtape()

|

static |

Definition at line 1844 of file tuplesort.c.

References Assert, fb(), and LogicalTapeCreate().

Referenced by dumptuples(), inittapes(), and mergeruns().

◆ sort_bounded_heap()

|

static |

Definition at line 2532 of file tuplesort.c.

References Assert, fb(), reversedirection(), SERIAL, TSS_BOUNDED, TSS_SORTEDINMEM, and tuplesort_heap_delete_top().

Referenced by tuplesort_performsort().

◆ ssup_datum_int32_cmp()

| int ssup_datum_int32_cmp | ( | Datum | x, |

| Datum | y, | ||

| SortSupport | ssup | ||

| ) |

Definition at line 3436 of file tuplesort.c.

References DatumGetInt32(), fb(), x, and y.

Referenced by btint4sortsupport(), date_sortsupport(), normalize_datum(), and tuplesort_sort_memtuples().

◆ ssup_datum_signed_cmp()

| int ssup_datum_signed_cmp | ( | Datum | x, |

| Datum | y, | ||

| SortSupport | ssup | ||

| ) |

Definition at line 3422 of file tuplesort.c.

References DatumGetInt64(), fb(), x, and y.

Referenced by btint8sortsupport(), normalize_datum(), timestamp_sortsupport(), and tuplesort_sort_memtuples().

◆ ssup_datum_unsigned_cmp()

| int ssup_datum_unsigned_cmp | ( | Datum | x, |

| Datum | y, | ||

| SortSupport | ssup | ||

| ) |

Definition at line 3411 of file tuplesort.c.

Referenced by bytea_sortsupport(), gist_point_sortsupport(), macaddr_sortsupport(), network_sortsupport(), normalize_datum(), tuplesort_sort_memtuples(), uuid_sortsupport(), and varstr_sortsupport().

◆ tuplesort_attach_shared()

| void tuplesort_attach_shared | ( | Sharedsort * | shared, |

| dsm_segment * | seg | ||

| ) |

Definition at line 3233 of file tuplesort.c.

References Sharedsort::fileset, and SharedFileSetAttach().

Referenced by _brin_parallel_build_main(), _bt_parallel_build_main(), and _gin_parallel_build_main().

◆ tuplesort_begin_batch()

|

static |

Definition at line 652 of file tuplesort.c.

References ALLOCSET_DEFAULT_SIZES, AllocSetContextCreate, BumpContextCreate(), elog, ERROR, fb(), GetMemoryChunkSpace(), INITIAL_MEMTUPSIZE, LACKMEM, MemoryContextSwitchTo(), palloc(), pfree(), TSS_INITIAL, TupleSortUseBumpTupleCxt, and USEMEM.

Referenced by tuplesort_begin_common(), and tuplesort_reset().

◆ tuplesort_begin_common()

| Tuplesortstate * tuplesort_begin_common | ( | int | workMem, |

| SortCoordinate | coordinate, | ||

| int | sortopt | ||

| ) |

Definition at line 546 of file tuplesort.c.

References ALLOCSET_DEFAULT_SIZES, AllocSetContextCreate, Assert, CurrentMemoryContext, elog, ERROR, fb(), INITIAL_MEMTUPSIZE, Max, MemoryContextSwitchTo(), palloc0_object, pg_rusage_init(), trace_sort, tuplesort_begin_batch(), TUPLESORT_RANDOMACCESS, and worker_get_identifier().

Referenced by tuplesort_begin_cluster(), tuplesort_begin_datum(), tuplesort_begin_heap(), tuplesort_begin_index_brin(), tuplesort_begin_index_btree(), tuplesort_begin_index_gin(), tuplesort_begin_index_gist(), and tuplesort_begin_index_hash().

◆ tuplesort_end()

| void tuplesort_end | ( | Tuplesortstate * | state | ) |

Definition at line 847 of file tuplesort.c.

References MemoryContextDelete(), and tuplesort_free().

Referenced by _brin_parallel_merge(), _brin_parallel_scan_and_build(), _bt_parallel_scan_and_sort(), _bt_spooldestroy(), _gin_parallel_merge(), _gin_parallel_scan_and_build(), _gin_process_worker_data(), _h_spooldestroy(), array_sort_internal(), ExecEndAgg(), ExecEndIncrementalSort(), ExecEndSort(), ExecReScanAgg(), ExecReScanSort(), gistbuild(), heapam_relation_copy_for_cluster(), initialize_aggregate(), initialize_phase(), ordered_set_shutdown(), process_ordered_aggregate_multi(), process_ordered_aggregate_single(), and validate_index().

◆ tuplesort_estimate_shared()

Definition at line 3189 of file tuplesort.c.

References add_size(), Assert, fb(), MAXALIGN, and mul_size().

Referenced by _brin_begin_parallel(), _bt_begin_parallel(), and _gin_begin_parallel().

◆ tuplesort_free()

|

static |

Definition at line 793 of file tuplesort.c.

References elog, fb(), FREESTATE, LOG, LogicalTapeSetBlocks(), LogicalTapeSetClose(), MemoryContextReset(), MemoryContextSwitchTo(), pg_rusage_show(), SERIAL, and trace_sort.

Referenced by tuplesort_end(), and tuplesort_reset().

◆ tuplesort_get_stats()

| void tuplesort_get_stats | ( | Tuplesortstate * | state, |

| TuplesortInstrumentation * | stats | ||

| ) |

Definition at line 2395 of file tuplesort.c.

References SORT_SPACE_TYPE_DISK, SORT_SPACE_TYPE_MEMORY, SORT_TYPE_EXTERNAL_MERGE, SORT_TYPE_EXTERNAL_SORT, SORT_TYPE_QUICKSORT, SORT_TYPE_STILL_IN_PROGRESS, SORT_TYPE_TOP_N_HEAPSORT, TuplesortInstrumentation::sortMethod, TuplesortInstrumentation::spaceType, TuplesortInstrumentation::spaceUsed, TSS_FINALMERGE, TSS_SORTEDINMEM, TSS_SORTEDONTAPE, and tuplesort_updatemax().

Referenced by ExecSort(), instrumentSortedGroup(), and show_sort_info().

◆ tuplesort_gettuple_common()

| bool tuplesort_gettuple_common | ( | Tuplesortstate * | state, |

| bool | forward, | ||

| SortTuple * | stup | ||

| ) |

Definition at line 1366 of file tuplesort.c.

References Assert, elog, ERROR, fb(), getlen(), LogicalTapeBackspace(), LogicalTapeClose(), mergereadnext(), READTUP, RELEASE_SLAB_SLOT, TSS_FINALMERGE, TSS_SORTEDINMEM, TSS_SORTEDONTAPE, tuplesort_heap_delete_top(), tuplesort_heap_replace_top(), TUPLESORT_RANDOMACCESS, and WORKER.

Referenced by tuplesort_getbrintuple(), tuplesort_getdatum(), tuplesort_getgintuple(), tuplesort_getheaptuple(), tuplesort_getindextuple(), tuplesort_gettupleslot(), and tuplesort_skiptuples().

◆ tuplesort_heap_delete_top()

|

static |

Definition at line 3046 of file tuplesort.c.

References tuplesort_heap_replace_top().

Referenced by mergeonerun(), sort_bounded_heap(), and tuplesort_gettuple_common().

◆ tuplesort_heap_insert()

|

static |

Definition at line 3011 of file tuplesort.c.

References Assert, CHECK_FOR_INTERRUPTS, COMPARETUP, i, and j.

Referenced by beginmerge(), and make_bounded_heap().

◆ tuplesort_heap_replace_top()

|

static |

Definition at line 3070 of file tuplesort.c.

References Assert, CHECK_FOR_INTERRUPTS, COMPARETUP, i, and j.

Referenced by make_bounded_heap(), mergeonerun(), tuplesort_gettuple_common(), tuplesort_heap_delete_top(), and tuplesort_puttuple_common().

◆ tuplesort_initialize_shared()

| void tuplesort_initialize_shared | ( | Sharedsort * | shared, |

| int | nWorkers, | ||

| dsm_segment * | seg | ||

| ) |

Definition at line 3210 of file tuplesort.c.

References Assert, Sharedsort::currentWorker, fb(), Sharedsort::fileset, TapeShare::firstblocknumber, i, Sharedsort::mutex, Sharedsort::nTapes, SharedFileSetInit(), SpinLockInit, Sharedsort::tapes, and Sharedsort::workersFinished.

Referenced by _brin_begin_parallel(), _bt_begin_parallel(), and _gin_begin_parallel().

◆ tuplesort_markpos()

| void tuplesort_markpos | ( | Tuplesortstate * | state | ) |

Definition at line 2331 of file tuplesort.c.

References Assert, elog, ERROR, LogicalTapeTell(), MemoryContextSwitchTo(), TSS_SORTEDINMEM, TSS_SORTEDONTAPE, and TUPLESORT_RANDOMACCESS.

Referenced by ExecSortMarkPos().

◆ tuplesort_merge_order()

Definition at line 1674 of file tuplesort.c.

References fb(), Max, MAXORDER, MERGE_BUFFER_SIZE, Min, MINORDER, and TAPE_BUFFER_OVERHEAD.

Referenced by cost_tuplesort(), and inittapes().

◆ tuplesort_method_name()

| const char * tuplesort_method_name | ( | TuplesortMethod | m | ) |

Definition at line 2439 of file tuplesort.c.

References SORT_TYPE_EXTERNAL_MERGE, SORT_TYPE_EXTERNAL_SORT, SORT_TYPE_QUICKSORT, SORT_TYPE_STILL_IN_PROGRESS, and SORT_TYPE_TOP_N_HEAPSORT.

Referenced by show_incremental_sort_group_info(), and show_sort_info().

◆ tuplesort_performsort()

| void tuplesort_performsort | ( | Tuplesortstate * | state | ) |

Definition at line 1259 of file tuplesort.c.

References dumptuples(), elog, ERROR, fb(), inittapes(), leader_takeover_tapes(), LOG, MemoryContextSwitchTo(), mergeruns(), pg_rusage_show(), SERIAL, sort_bounded_heap(), trace_sort, TSS_BOUNDED, TSS_BUILDRUNS, TSS_FINALMERGE, TSS_INITIAL, TSS_SORTEDINMEM, TSS_SORTEDONTAPE, tuplesort_sort_memtuples(), WORKER, and worker_nomergeruns().

Referenced by _brin_parallel_merge(), _brin_parallel_scan_and_build(), _bt_leafbuild(), _bt_parallel_scan_and_sort(), _gin_parallel_merge(), _gin_parallel_scan_and_build(), _gin_process_worker_data(), _h_indexbuild(), array_sort_internal(), ExecIncrementalSort(), ExecSort(), gistbuild(), heapam_relation_copy_for_cluster(), hypothetical_dense_rank_final(), hypothetical_rank_common(), initialize_phase(), mode_final(), percentile_cont_final_common(), percentile_cont_multi_final_common(), percentile_disc_final(), percentile_disc_multi_final(), process_ordered_aggregate_multi(), process_ordered_aggregate_single(), switchToPresortedPrefixMode(), and validate_index().

◆ tuplesort_puttuple_common()

| void tuplesort_puttuple_common | ( | Tuplesortstate * | state, |

| SortTuple * | tuple, | ||

| bool | useAbbrev, | ||

| Size | tuplen | ||

| ) |

Definition at line 1065 of file tuplesort.c.

References Assert, CHECK_FOR_INTERRUPTS, COMPARETUP, consider_abort_common(), SortTuple::datum1, dumptuples(), elog, ERROR, fb(), free_sort_tuple(), grow_memtuples(), inittapes(), LACKMEM, LEADER, LOG, make_bounded_heap(), MemoryContextSwitchTo(), pg_rusage_show(), REMOVEABBREV, trace_sort, TSS_BOUNDED, TSS_BUILDRUNS, TSS_INITIAL, tuplesort_heap_replace_top(), and USEMEM.

Referenced by tuplesort_putbrintuple(), tuplesort_putdatum(), tuplesort_putgintuple(), tuplesort_putheaptuple(), tuplesort_putindextuplevalues(), and tuplesort_puttupleslot().

◆ tuplesort_readtup_alloc()

| void * tuplesort_readtup_alloc | ( | Tuplesortstate * | state, |

| Size | tuplen | ||

| ) |

Definition at line 3155 of file tuplesort.c.

References Assert, buf, MemoryContextAlloc(), and SLAB_SLOT_SIZE.

Referenced by readtup_cluster(), readtup_datum(), readtup_heap(), readtup_index(), readtup_index_brin(), and readtup_index_gin().

◆ tuplesort_rescan()

| void tuplesort_rescan | ( | Tuplesortstate * | state | ) |

Definition at line 2298 of file tuplesort.c.

References Assert, elog, ERROR, fb(), LogicalTapeRewindForRead(), MemoryContextSwitchTo(), TSS_SORTEDINMEM, TSS_SORTEDONTAPE, and TUPLESORT_RANDOMACCESS.

Referenced by ExecReScanSort(), mode_final(), percentile_cont_final_common(), percentile_cont_multi_final_common(), percentile_disc_final(), and percentile_disc_multi_final().

◆ tuplesort_reset()

| void tuplesort_reset | ( | Tuplesortstate * | state | ) |

Definition at line 915 of file tuplesort.c.

References fb(), tuplesort_begin_batch(), tuplesort_free(), and tuplesort_updatemax().

Referenced by ExecIncrementalSort(), ExecReScanIncrementalSort(), and switchToPresortedPrefixMode().

◆ tuplesort_restorepos()

| void tuplesort_restorepos | ( | Tuplesortstate * | state | ) |

Definition at line 2362 of file tuplesort.c.

References Assert, elog, ERROR, LogicalTapeSeek(), MemoryContextSwitchTo(), TSS_SORTEDINMEM, TSS_SORTEDONTAPE, and TUPLESORT_RANDOMACCESS.

Referenced by ExecSortRestrPos().

◆ tuplesort_set_bound()

| void tuplesort_set_bound | ( | Tuplesortstate * | state, |

| int64 | bound | ||

| ) |

Definition at line 734 of file tuplesort.c.

References Assert, fb(), LEADER, TSS_INITIAL, TUPLESORT_ALLOWBOUNDED, and WORKER.

Referenced by ExecIncrementalSort(), ExecSort(), and switchToPresortedPrefixMode().

◆ tuplesort_skiptuples()

| bool tuplesort_skiptuples | ( | Tuplesortstate * | state, |

| int64 | ntuples, | ||

| bool | forward | ||

| ) |

Definition at line 1606 of file tuplesort.c.

References Assert, CHECK_FOR_INTERRUPTS, elog, ERROR, fb(), MemoryContextSwitchTo(), TSS_FINALMERGE, TSS_SORTEDINMEM, TSS_SORTEDONTAPE, tuplesort_gettuple_common(), and WORKER.

Referenced by percentile_cont_final_common(), percentile_cont_multi_final_common(), percentile_disc_final(), and percentile_disc_multi_final().

◆ tuplesort_sort_memtuples()

|

static |

Definition at line 2958 of file tuplesort.c.

References Assert, SortSupportData::comparator, fb(), LEADER, QSORT_THRESHOLD, radix_sort_tuple(), ssup_datum_int32_cmp(), ssup_datum_signed_cmp(), ssup_datum_unsigned_cmp(), and verify_memtuples_sorted().

Referenced by dumptuples(), and tuplesort_performsort().

◆ tuplesort_space_type_name()

| const char * tuplesort_space_type_name | ( | TuplesortSpaceType | t | ) |

Definition at line 2462 of file tuplesort.c.

References Assert, SORT_SPACE_TYPE_DISK, and SORT_SPACE_TYPE_MEMORY.

Referenced by show_incremental_sort_group_info(), and show_sort_info().

◆ tuplesort_updatemax()

|

static |

Definition at line 864 of file tuplesort.c.

References fb(), and LogicalTapeSetBlocks().

Referenced by tuplesort_get_stats(), and tuplesort_reset().

◆ tuplesort_used_bound()

| bool tuplesort_used_bound | ( | Tuplesortstate * | state | ) |

Definition at line 782 of file tuplesort.c.

Referenced by ExecIncrementalSort().

◆ verify_memtuples_sorted()

|

static |

Definition at line 2941 of file tuplesort.c.

References Assert, and COMPARETUP.

Referenced by tuplesort_sort_memtuples().

◆ worker_freeze_result_tape()

|

static |

Definition at line 3281 of file tuplesort.c.

References Assert, fb(), LogicalTapeFreeze(), Sharedsort::mutex, output, pfree(), SpinLockAcquire, SpinLockRelease, Sharedsort::tapes, WORKER, and Sharedsort::workersFinished.

Referenced by mergeruns(), and worker_nomergeruns().

◆ worker_get_identifier()

|

static |

Definition at line 3253 of file tuplesort.c.

References Assert, Sharedsort::currentWorker, Sharedsort::mutex, SpinLockAcquire, SpinLockRelease, and WORKER.

Referenced by tuplesort_begin_common().

◆ worker_nomergeruns()

|

static |

Definition at line 3319 of file tuplesort.c.

References Assert, fb(), WORKER, and worker_freeze_result_tape().

Referenced by tuplesort_performsort().

Variable Documentation

◆ trace_sort

Definition at line 122 of file tuplesort.c.

Referenced by bytea_abbrev_abort(), dumptuples(), inittapes(), macaddr_abbrev_abort(), mergeruns(), network_abbrev_abort(), numeric_abbrev_abort(), tuplesort_begin_cluster(), tuplesort_begin_common(), tuplesort_begin_datum(), tuplesort_begin_heap(), tuplesort_begin_index_brin(), tuplesort_begin_index_btree(), tuplesort_begin_index_gin(), tuplesort_begin_index_gist(), tuplesort_begin_index_hash(), tuplesort_free(), tuplesort_performsort(), tuplesort_puttuple_common(), uuid_abbrev_abort(), and varstr_abbrev_abort().