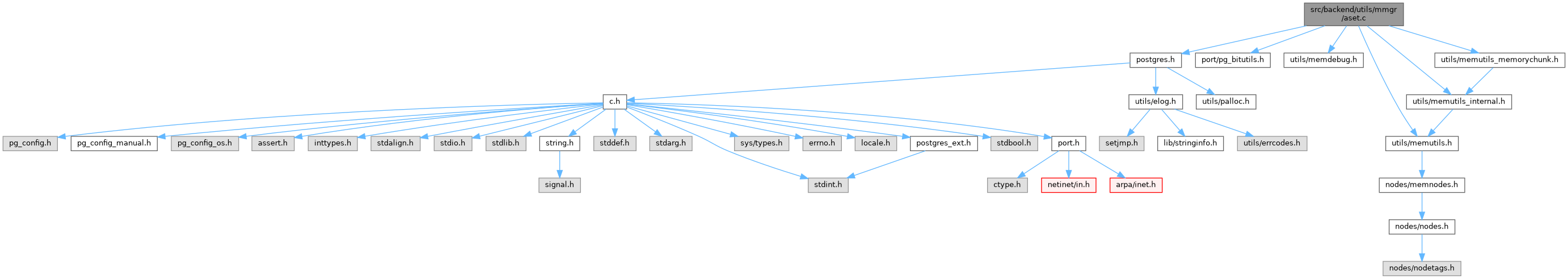

#include "postgres.h"#include "port/pg_bitutils.h"#include "utils/memdebug.h"#include "utils/memutils.h"#include "utils/memutils_internal.h"#include "utils/memutils_memorychunk.h"

Go to the source code of this file.

Data Structures | |

| struct | AllocFreeListLink |

| struct | AllocSetContext |

| struct | AllocBlockData |

| struct | AllocSetFreeList |

Variables | |

| static AllocSetFreeList | context_freelists [2] |

Macro Definition Documentation

◆ ALLOC_BLOCKHDRSZ

◆ ALLOC_CHUNK_FRACTION

◆ ALLOC_CHUNK_LIMIT

| #define ALLOC_CHUNK_LIMIT (1 << (ALLOCSET_NUM_FREELISTS-1+ALLOC_MINBITS)) |

◆ ALLOC_CHUNKHDRSZ

| #define ALLOC_CHUNKHDRSZ sizeof(MemoryChunk) |

◆ ALLOC_MINBITS

◆ AllocBlockIsValid

| #define AllocBlockIsValid | ( | block | ) | ((block) && AllocSetIsValid((block)->aset)) |

Definition at line 203 of file aset.c.

◆ ALLOCSET_NUM_FREELISTS

◆ AllocSetIsValid

| #define AllocSetIsValid | ( | set | ) | ((set) && IsA(set, AllocSetContext)) |

◆ ExternalChunkGetBlock

| #define ExternalChunkGetBlock | ( | chunk | ) | (AllocBlock) ((char *) chunk - ALLOC_BLOCKHDRSZ) |

◆ FIRST_BLOCKHDRSZ

| #define FIRST_BLOCKHDRSZ |

◆ FreeListIdxIsValid

| #define FreeListIdxIsValid | ( | fidx | ) | ((fidx) >= 0 && (fidx) < ALLOCSET_NUM_FREELISTS) |

◆ GetChunkSizeFromFreeListIdx

| #define GetChunkSizeFromFreeListIdx | ( | fidx | ) | ((((Size) 1) << ALLOC_MINBITS) << (fidx)) |

◆ GetFreeListLink

| #define GetFreeListLink | ( | chkptr | ) | (AllocFreeListLink *) ((char *) (chkptr) + ALLOC_CHUNKHDRSZ) |

◆ IsKeeperBlock

| #define IsKeeperBlock | ( | set, | |

| block | |||

| ) | ((block) == (KeeperBlock(set))) |

◆ KeeperBlock

| #define KeeperBlock | ( | set | ) | ((AllocBlock) (((char *) set) + MAXALIGN(sizeof(AllocSetContext)))) |

◆ MAX_FREE_CONTEXTS

Typedef Documentation

◆ AllocBlock

◆ AllocBlockData

◆ AllocFreeListLink

◆ AllocPointer

◆ AllocSet

◆ AllocSetContext

◆ AllocSetFreeList

Function Documentation

◆ AllocSetAlloc()

| void * AllocSetAlloc | ( | MemoryContext | context, |

| Size | size, | ||

| int | flags | ||

| ) |

Definition at line 1014 of file aset.c.

References ALLOC_CHUNKHDRSZ, AllocSetContext::allocChunkLimit, AllocSetAllocChunkFromBlock(), AllocSetAllocFromNewBlock(), AllocSetAllocLarge(), AllocSetFreeIndex(), AllocSetIsValid, Assert, AllocSetContext::blocks, AllocBlockData::endptr, fb(), AllocSetContext::freelist, AllocBlockData::freeptr, GetChunkSizeFromFreeListIdx, GetFreeListLink, MemoryChunkGetPointer, MemoryChunkGetValue(), unlikely, VALGRIND_MAKE_MEM_DEFINED, and VALGRIND_MAKE_MEM_NOACCESS.

Referenced by AllocSetRealloc().

◆ AllocSetAllocChunkFromBlock()

|

inlinestatic |

Definition at line 818 of file aset.c.

References ALLOC_CHUNKHDRSZ, Assert, AllocBlockData::endptr, fb(), AllocBlockData::freeptr, MCTX_ASET_ID, MemoryChunkGetPointer, MemoryChunkSetHdrMask(), VALGRIND_MAKE_MEM_NOACCESS, and VALGRIND_MAKE_MEM_UNDEFINED.

Referenced by AllocSetAlloc(), and AllocSetAllocFromNewBlock().

◆ AllocSetAllocFromNewBlock()

|

static |

Definition at line 863 of file aset.c.

References ALLOC_BLOCKHDRSZ, ALLOC_CHUNKHDRSZ, ALLOC_MINBITS, AllocSetAllocChunkFromBlock(), AllocSetFreeIndex(), AllocBlockData::aset, Assert, AllocSetContext::blocks, AllocBlockData::endptr, fb(), AllocSetContext::freelist, AllocBlockData::freeptr, GetChunkSizeFromFreeListIdx, GetFreeListLink, InvalidAllocSize, malloc, AllocSetContext::maxBlockSize, MCTX_ASET_ID, MemoryContextData::mem_allocated, MemoryChunkSetHdrMask(), MemoryContextAllocationFailure(), AllocBlockData::next, AllocSetContext::nextBlockSize, AllocBlockData::prev, VALGRIND_MAKE_MEM_DEFINED, VALGRIND_MAKE_MEM_NOACCESS, VALGRIND_MAKE_MEM_UNDEFINED, and VALGRIND_MEMPOOL_ALLOC.

Referenced by AllocSetAlloc().

◆ AllocSetAllocLarge()

|

static |

Definition at line 737 of file aset.c.

References ALLOC_BLOCKHDRSZ, ALLOC_CHUNKHDRSZ, AllocBlockData::aset, Assert, AllocSetContext::blocks, AllocBlockData::endptr, fb(), AllocBlockData::freeptr, malloc, MAXALIGN, MCTX_ASET_ID, MemoryContextData::mem_allocated, MemoryChunkGetPointer, MemoryChunkSetHdrMaskExternal(), MemoryContextAllocationFailure(), MemoryContextCheckSize(), AllocBlockData::next, AllocBlockData::prev, VALGRIND_MAKE_MEM_NOACCESS, and VALGRIND_MEMPOOL_ALLOC.

Referenced by AllocSetAlloc().

◆ AllocSetContextCreateInternal()

| MemoryContext AllocSetContextCreateInternal | ( | MemoryContext | parent, |

| const char * | name, | ||

| Size | minContextSize, | ||

| Size | initBlockSize, | ||

| Size | maxBlockSize | ||

| ) |

Definition at line 343 of file aset.c.

References ALLOC_BLOCKHDRSZ, ALLOC_CHUNK_FRACTION, ALLOC_CHUNK_LIMIT, ALLOC_CHUNKHDRSZ, ALLOC_MINBITS, AllocSetContext::allocChunkLimit, AllocHugeSizeIsValid, ALLOCSET_DEFAULT_INITSIZE, ALLOCSET_DEFAULT_MINSIZE, ALLOCSET_SEPARATE_THRESHOLD, ALLOCSET_SMALL_INITSIZE, ALLOCSET_SMALL_MINSIZE, AllocBlockData::aset, Assert, AllocSetContext::blocks, context_freelists, AllocBlockData::endptr, ereport, errcode(), errdetail(), errmsg(), ERROR, fb(), FIRST_BLOCKHDRSZ, AllocSetFreeList::first_free, AllocSetContext::freelist, AllocSetContext::freeListIndex, AllocBlockData::freeptr, AllocSetContext::header, AllocSetContext::initBlockSize, KeeperBlock, malloc, Max, MAXALIGN, AllocSetContext::maxBlockSize, MCTX_ASET_ID, MEMORYCHUNK_MAX_BLOCKOFFSET, MemoryContextCreate(), MemoryContextStats(), MemSetAligned, name, AllocBlockData::next, AllocSetContext::nextBlockSize, MemoryContextData::nextchild, AllocSetFreeList::num_free, AllocBlockData::prev, StaticAssertDecl, StaticAssertStmt, TopMemoryContext, VALGRIND_CREATE_MEMPOOL, VALGRIND_MAKE_MEM_NOACCESS, and VALGRIND_MEMPOOL_ALLOC.

◆ AllocSetDelete()

| void AllocSetDelete | ( | MemoryContext | context | ) |

Definition at line 634 of file aset.c.

References AllocSetIsValid, Assert, AllocSetContext::blocks, context_freelists, AllocBlockData::endptr, fb(), AllocSetFreeList::first_free, free, AllocSetContext::freeListIndex, AllocBlockData::freeptr, AllocSetContext::header, IsKeeperBlock, MemoryContextData::isReset, KeeperBlock, MAX_FREE_CONTEXTS, MemoryContextData::mem_allocated, MemoryContextResetOnly(), next, AllocBlockData::next, MemoryContextData::nextchild, AllocSetFreeList::num_free, PG_USED_FOR_ASSERTS_ONLY, VALGRIND_DESTROY_MEMPOOL, and VALGRIND_MEMPOOL_FREE.

◆ AllocSetFree()

Definition at line 1109 of file aset.c.

References ALLOC_CHUNKHDRSZ, AllocBlockIsValid, AllocBlockData::aset, Assert, AllocSetContext::blocks, elog, AllocBlockData::endptr, ERROR, ExternalChunkGetBlock, fb(), free, AllocSetContext::freelist, FreeListIdxIsValid, AllocBlockData::freeptr, GetChunkSizeFromFreeListIdx, GetFreeListLink, AllocSetContext::header, InvalidAllocSize, MemoryContextData::mem_allocated, MemoryChunkGetBlock(), MemoryChunkGetValue(), MemoryChunkIsExternal(), MemoryContextData::name, AllocBlockData::next, PointerGetMemoryChunk, AllocBlockData::prev, VALGRIND_MAKE_MEM_DEFINED, VALGRIND_MAKE_MEM_NOACCESS, VALGRIND_MEMPOOL_FREE, and WARNING.

Referenced by AllocSetRealloc().

◆ AllocSetFreeIndex()

Definition at line 273 of file aset.c.

References ALLOC_CHUNK_LIMIT, ALLOC_MINBITS, ALLOCSET_NUM_FREELISTS, Assert, fb(), idx(), pg_leftmost_one_pos, pg_leftmost_one_pos32(), and StaticAssertDecl.

Referenced by AllocSetAlloc(), and AllocSetAllocFromNewBlock().

◆ AllocSetGetChunkContext()

| MemoryContext AllocSetGetChunkContext | ( | void * | pointer | ) |

Definition at line 1492 of file aset.c.

References ALLOC_CHUNKHDRSZ, AllocBlockIsValid, AllocBlockData::aset, Assert, ExternalChunkGetBlock, fb(), AllocSetContext::header, MemoryChunkGetBlock(), MemoryChunkIsExternal(), PointerGetMemoryChunk, VALGRIND_MAKE_MEM_DEFINED, and VALGRIND_MAKE_MEM_NOACCESS.

◆ AllocSetGetChunkSpace()

Definition at line 1521 of file aset.c.

References ALLOC_CHUNKHDRSZ, AllocBlockIsValid, Assert, AllocBlockData::endptr, ExternalChunkGetBlock, fb(), FreeListIdxIsValid, GetChunkSizeFromFreeListIdx, MemoryChunkGetValue(), MemoryChunkIsExternal(), PointerGetMemoryChunk, VALGRIND_MAKE_MEM_DEFINED, and VALGRIND_MAKE_MEM_NOACCESS.

◆ AllocSetIsEmpty()

| bool AllocSetIsEmpty | ( | MemoryContext | context | ) |

Definition at line 1555 of file aset.c.

References AllocSetIsValid, Assert, and MemoryContextData::isReset.

◆ AllocSetRealloc()

Definition at line 1220 of file aset.c.

References ALLOC_BLOCKHDRSZ, ALLOC_CHUNKHDRSZ, AllocBlockIsValid, AllocSetAlloc(), AllocSetFree(), AllocBlockData::aset, Assert, elog, AllocBlockData::endptr, ERROR, ExternalChunkGetBlock, fb(), FreeListIdxIsValid, AllocBlockData::freeptr, GetChunkSizeFromFreeListIdx, AllocSetContext::header, MAXALIGN, MemoryContextData::mem_allocated, MemoryChunkGetBlock(), MemoryChunkGetPointer, MemoryChunkGetValue(), MemoryChunkIsExternal(), MemoryContextAllocationFailure(), MemoryContextCheckSize(), Min, MemoryContextData::name, AllocBlockData::next, PointerGetMemoryChunk, AllocBlockData::prev, realloc, VALGRIND_MAKE_MEM_DEFINED, VALGRIND_MAKE_MEM_NOACCESS, VALGRIND_MAKE_MEM_UNDEFINED, VALGRIND_MEMPOOL_CHANGE, and WARNING.

◆ AllocSetReset()

| void AllocSetReset | ( | MemoryContext | context | ) |

Definition at line 548 of file aset.c.

References ALLOC_BLOCKHDRSZ, AllocSetIsValid, Assert, AllocSetContext::blocks, AllocBlockData::endptr, fb(), FIRST_BLOCKHDRSZ, free, AllocSetContext::freelist, AllocBlockData::freeptr, AllocSetContext::initBlockSize, IsKeeperBlock, KeeperBlock, MemoryContextData::mem_allocated, MemSetAligned, next, AllocBlockData::next, AllocSetContext::nextBlockSize, PG_USED_FOR_ASSERTS_ONLY, AllocBlockData::prev, VALGRIND_MAKE_MEM_NOACCESS, VALGRIND_MEMPOOL_FREE, and VALGRIND_MEMPOOL_TRIM.

◆ AllocSetStats()

| void AllocSetStats | ( | MemoryContext | context, |

| MemoryStatsPrintFunc | printfunc, | ||

| void * | passthru, | ||

| MemoryContextCounters * | totals, | ||

| bool | print_to_stderr | ||

| ) |

Definition at line 1580 of file aset.c.

References ALLOC_CHUNKHDRSZ, ALLOCSET_NUM_FREELISTS, AllocSetIsValid, Assert, AllocSetContext::blocks, AllocBlockData::endptr, fb(), AllocSetContext::freelist, AllocBlockData::freeptr, GetChunkSizeFromFreeListIdx, GetFreeListLink, MAXALIGN, MemoryChunkGetValue(), AllocBlockData::next, snprintf, VALGRIND_MAKE_MEM_DEFINED, and VALGRIND_MAKE_MEM_NOACCESS.

Variable Documentation

◆ context_freelists

|

static |

Definition at line 253 of file aset.c.

Referenced by AllocSetContextCreateInternal(), and AllocSetDelete().