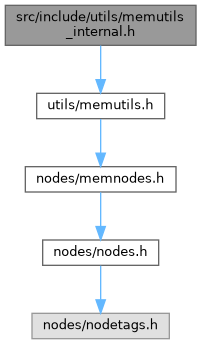

#include "utils/memutils.h"

Go to the source code of this file.

Macros | |

| #define | PallocAlignedExtraBytes(alignto) ((alignto) + (sizeof(MemoryChunk) - MAXIMUM_ALIGNOF)) |

| #define | MEMORY_CONTEXT_METHODID_BITS 4 |

| #define | MEMORY_CONTEXT_METHODID_MASK ((((uint64) 1) << MEMORY_CONTEXT_METHODID_BITS) - 1) |

Typedefs | |

| typedef enum MemoryContextMethodID | MemoryContextMethodID |

Macro Definition Documentation

◆ MEMORY_CONTEXT_METHODID_BITS

| #define MEMORY_CONTEXT_METHODID_BITS 4 |

Definition at line 145 of file memutils_internal.h.

◆ MEMORY_CONTEXT_METHODID_MASK

| #define MEMORY_CONTEXT_METHODID_MASK ((((uint64) 1) << MEMORY_CONTEXT_METHODID_BITS) - 1) |

Definition at line 146 of file memutils_internal.h.

◆ PallocAlignedExtraBytes

| #define PallocAlignedExtraBytes | ( | alignto | ) | ((alignto) + (sizeof(MemoryChunk) - MAXIMUM_ALIGNOF)) |

Definition at line 104 of file memutils_internal.h.

Typedef Documentation

◆ MemoryContextMethodID

Enumeration Type Documentation

◆ MemoryContextMethodID

Definition at line 121 of file memutils_internal.h.

Function Documentation

◆ AlignedAllocFree()

Definition at line 29 of file alignedalloc.c.

References Assert, elog, fb(), GetMemoryChunkContext(), MemoryChunkGetBlock(), MemoryChunkIsExternal(), name, pfree(), PointerGetMemoryChunk, VALGRIND_MAKE_MEM_DEFINED, VALGRIND_MEMPOOL_ALLOC, and WARNING.

◆ AlignedAllocGetChunkContext()

|

extern |

Definition at line 154 of file alignedalloc.c.

References Assert, fb(), GetMemoryChunkContext(), MemoryChunkGetBlock(), MemoryChunkIsExternal(), PointerGetMemoryChunk, VALGRIND_MAKE_MEM_DEFINED, and VALGRIND_MAKE_MEM_NOACCESS.

◆ AlignedAllocGetChunkSpace()

Definition at line 176 of file alignedalloc.c.

References fb(), GetMemoryChunkSpace(), MemoryChunkGetBlock(), PointerGetMemoryChunk, VALGRIND_MAKE_MEM_DEFINED, and VALGRIND_MAKE_MEM_NOACCESS.

◆ AlignedAllocRealloc()

Definition at line 70 of file alignedalloc.c.

References Assert, fb(), GetMemoryChunkContext(), GetMemoryChunkSpace(), MemoryChunkGetBlock(), MemoryChunkGetValue(), MemoryContextAllocAligned(), MemoryContextAllocationFailure(), Min, PallocAlignedExtraBytes, pfree(), PointerGetMemoryChunk, unlikely, VALGRIND_MAKE_MEM_DEFINED, VALGRIND_MAKE_MEM_NOACCESS, and VALGRIND_MEMPOOL_ALLOC.

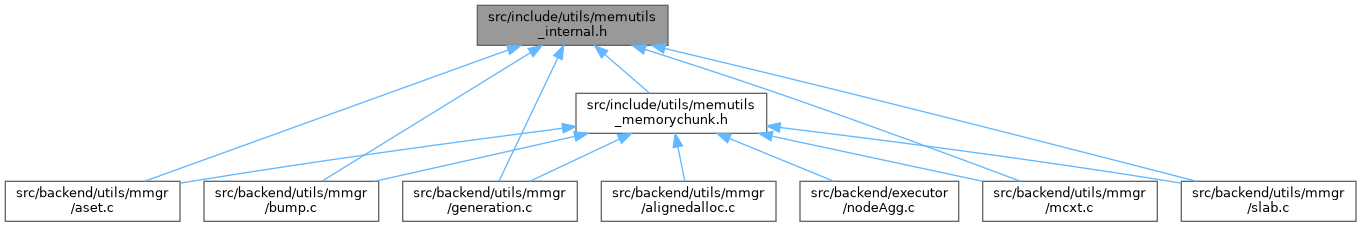

◆ AllocSetAlloc()

|

extern |

Definition at line 1012 of file aset.c.

References ALLOC_CHUNKHDRSZ, AllocSetContext::allocChunkLimit, AllocSetAllocChunkFromBlock(), AllocSetAllocFromNewBlock(), AllocSetAllocLarge(), AllocSetFreeIndex(), AllocSetIsValid, Assert, AllocSetContext::blocks, AllocBlockData::endptr, fb(), AllocSetContext::freelist, AllocBlockData::freeptr, GetChunkSizeFromFreeListIdx, GetFreeListLink, MemoryChunkGetPointer, MemoryChunkGetValue(), unlikely, VALGRIND_MAKE_MEM_DEFINED, and VALGRIND_MAKE_MEM_NOACCESS.

Referenced by AllocSetRealloc().

◆ AllocSetDelete()

|

extern |

Definition at line 632 of file aset.c.

References AllocSetIsValid, Assert, AllocSetContext::blocks, context_freelists, AllocBlockData::endptr, fb(), AllocSetFreeList::first_free, free, AllocSetContext::freeListIndex, AllocBlockData::freeptr, AllocSetContext::header, IsKeeperBlock, MemoryContextData::isReset, KeeperBlock, MAX_FREE_CONTEXTS, MemoryContextData::mem_allocated, MemoryContextResetOnly(), next, AllocBlockData::next, MemoryContextData::nextchild, AllocSetFreeList::num_free, PG_USED_FOR_ASSERTS_ONLY, VALGRIND_DESTROY_MEMPOOL, and VALGRIND_MEMPOOL_FREE.

◆ AllocSetFree()

Definition at line 1107 of file aset.c.

References ALLOC_CHUNKHDRSZ, AllocBlockIsValid, AllocBlockData::aset, Assert, AllocSetContext::blocks, elog, AllocBlockData::endptr, ERROR, ExternalChunkGetBlock, fb(), free, AllocSetContext::freelist, FreeListIdxIsValid, AllocBlockData::freeptr, GetChunkSizeFromFreeListIdx, GetFreeListLink, AllocSetContext::header, InvalidAllocSize, MemoryContextData::mem_allocated, MemoryChunkGetBlock(), MemoryChunkGetValue(), MemoryChunkIsExternal(), MemoryContextData::name, AllocBlockData::next, PointerGetMemoryChunk, AllocBlockData::prev, VALGRIND_MAKE_MEM_DEFINED, VALGRIND_MAKE_MEM_NOACCESS, VALGRIND_MEMPOOL_FREE, and WARNING.

Referenced by AllocSetRealloc().

◆ AllocSetGetChunkContext()

|

extern |

Definition at line 1490 of file aset.c.

References ALLOC_CHUNKHDRSZ, AllocBlockIsValid, AllocBlockData::aset, Assert, ExternalChunkGetBlock, fb(), AllocSetContext::header, MemoryChunkGetBlock(), MemoryChunkIsExternal(), PointerGetMemoryChunk, VALGRIND_MAKE_MEM_DEFINED, and VALGRIND_MAKE_MEM_NOACCESS.

◆ AllocSetGetChunkSpace()

Definition at line 1519 of file aset.c.

References ALLOC_CHUNKHDRSZ, AllocBlockIsValid, Assert, AllocBlockData::endptr, ExternalChunkGetBlock, fb(), FreeListIdxIsValid, GetChunkSizeFromFreeListIdx, MemoryChunkGetValue(), MemoryChunkIsExternal(), PointerGetMemoryChunk, VALGRIND_MAKE_MEM_DEFINED, and VALGRIND_MAKE_MEM_NOACCESS.

◆ AllocSetIsEmpty()

|

extern |

Definition at line 1553 of file aset.c.

References AllocSetIsValid, Assert, and MemoryContextData::isReset.

◆ AllocSetRealloc()

Definition at line 1218 of file aset.c.

References ALLOC_BLOCKHDRSZ, ALLOC_CHUNKHDRSZ, AllocBlockIsValid, AllocSetAlloc(), AllocSetFree(), AllocBlockData::aset, Assert, elog, AllocBlockData::endptr, ERROR, ExternalChunkGetBlock, fb(), FreeListIdxIsValid, AllocBlockData::freeptr, GetChunkSizeFromFreeListIdx, AllocSetContext::header, MAXALIGN, MemoryContextData::mem_allocated, MemoryChunkGetBlock(), MemoryChunkGetPointer, MemoryChunkGetValue(), MemoryChunkIsExternal(), MemoryContextAllocationFailure(), MemoryContextCheckSize(), Min, MemoryContextData::name, AllocBlockData::next, PointerGetMemoryChunk, AllocBlockData::prev, realloc, VALGRIND_MAKE_MEM_DEFINED, VALGRIND_MAKE_MEM_NOACCESS, VALGRIND_MAKE_MEM_UNDEFINED, VALGRIND_MEMPOOL_CHANGE, and WARNING.

◆ AllocSetReset()

|

extern |

Definition at line 546 of file aset.c.

References ALLOC_BLOCKHDRSZ, AllocSetIsValid, Assert, AllocSetContext::blocks, AllocBlockData::endptr, fb(), FIRST_BLOCKHDRSZ, free, AllocSetContext::freelist, AllocBlockData::freeptr, AllocSetContext::initBlockSize, IsKeeperBlock, KeeperBlock, MemoryContextData::mem_allocated, MemSetAligned, next, AllocBlockData::next, AllocSetContext::nextBlockSize, PG_USED_FOR_ASSERTS_ONLY, AllocBlockData::prev, VALGRIND_MAKE_MEM_NOACCESS, VALGRIND_MEMPOOL_FREE, and VALGRIND_MEMPOOL_TRIM.

◆ AllocSetStats()

|

extern |

Definition at line 1578 of file aset.c.

References ALLOC_CHUNKHDRSZ, ALLOCSET_NUM_FREELISTS, AllocSetIsValid, Assert, AllocSetContext::blocks, AllocBlockData::endptr, fb(), AllocSetContext::freelist, AllocBlockData::freeptr, GetChunkSizeFromFreeListIdx, GetFreeListLink, MAXALIGN, MemoryChunkGetValue(), AllocBlockData::next, snprintf, VALGRIND_MAKE_MEM_DEFINED, and VALGRIND_MAKE_MEM_NOACCESS.

◆ BumpAlloc()

|

extern |

Definition at line 517 of file bump.c.

References BumpContext::allocChunkLimit, Assert, BumpContext::blocks, Bump_CHUNKHDRSZ, BumpAllocChunkFromBlock(), BumpAllocFromNewBlock(), BumpAllocLarge(), BumpBlockFreeBytes(), BumpIsValid, dlist_container, dlist_head_node(), fb(), and MAXALIGN.

◆ BumpDelete()

|

extern |

Definition at line 294 of file bump.c.

References BumpReset(), free, and VALGRIND_DESTROY_MEMPOOL.

◆ BumpFree()

◆ BumpGetChunkContext()

|

extern |

◆ BumpGetChunkSpace()

◆ BumpIsEmpty()

|

extern |

Definition at line 689 of file bump.c.

References Assert, BumpContext::blocks, BumpBlockIsEmpty(), BumpIsValid, dlist_iter::cur, dlist_container, and dlist_foreach.

◆ BumpRealloc()

◆ BumpReset()

|

extern |

Definition at line 251 of file bump.c.

References Assert, BumpContext::blocks, BumpBlockFree(), BumpBlockMarkEmpty(), BumpIsValid, dlist_container, dlist_foreach_modify, dlist_has_next(), dlist_head_node(), dlist_is_empty(), fb(), FIRST_BLOCKHDRSZ, BumpContext::initBlockSize, IsKeeperBlock, BumpContext::nextBlockSize, and VALGRIND_MEMPOOL_TRIM.

Referenced by BumpDelete().

◆ BumpStats()

|

extern |

Definition at line 717 of file bump.c.

References Assert, BumpContext::blocks, BumpIsValid, dlist_iter::cur, dlist_container, dlist_foreach, BumpBlock::endptr, fb(), BumpBlock::freeptr, and snprintf.

◆ GenerationAlloc()

|

extern |

Definition at line 553 of file generation.c.

References GenerationContext::allocChunkLimit, Assert, GenerationContext::block, fb(), GenerationContext::freeblock, Generation_CHUNKHDRSZ, GenerationAllocChunkFromBlock(), GenerationAllocFromNewBlock(), GenerationAllocLarge(), GenerationBlockFreeBytes(), GenerationBlockIsEmpty, GenerationIsValid, MAXALIGN, and unlikely.

Referenced by GenerationRealloc().

◆ GenerationDelete()

|

extern |

Definition at line 344 of file generation.c.

References free, GenerationReset(), and VALGRIND_DESTROY_MEMPOOL.

◆ GenerationFree()

Definition at line 718 of file generation.c.

References Assert, GenerationContext::block, GenerationBlock::context, elog, GenerationBlock::endptr, ERROR, ExternalChunkGetBlock, fb(), GenerationContext::freeblock, Generation_CHUNKHDRSZ, GenerationBlockFree(), GenerationBlockIsValid, GenerationBlockMarkEmpty(), InvalidAllocSize, IsKeeperBlock, likely, MemoryChunkGetBlock(), MemoryChunkGetValue(), MemoryChunkIsExternal(), GenerationBlock::nchunks, GenerationBlock::nfree, PointerGetMemoryChunk, VALGRIND_MAKE_MEM_DEFINED, and WARNING.

Referenced by GenerationRealloc().

◆ GenerationGetChunkContext()

|

extern |

Definition at line 976 of file generation.c.

References Assert, GenerationBlock::context, ExternalChunkGetBlock, fb(), Generation_CHUNKHDRSZ, GenerationBlockIsValid, GenerationContext::header, MemoryChunkGetBlock(), MemoryChunkIsExternal(), PointerGetMemoryChunk, VALGRIND_MAKE_MEM_DEFINED, and VALGRIND_MAKE_MEM_NOACCESS.

◆ GenerationGetChunkSpace()

Definition at line 1002 of file generation.c.

References Assert, GenerationBlock::endptr, ExternalChunkGetBlock, fb(), Generation_CHUNKHDRSZ, GenerationBlockIsValid, MemoryChunkGetValue(), MemoryChunkIsExternal(), PointerGetMemoryChunk, VALGRIND_MAKE_MEM_DEFINED, and VALGRIND_MAKE_MEM_NOACCESS.

◆ GenerationIsEmpty()

|

extern |

Definition at line 1031 of file generation.c.

References Assert, GenerationContext::blocks, dlist_iter::cur, dlist_container, dlist_foreach, GenerationIsValid, and GenerationBlock::nchunks.

◆ GenerationRealloc()

Definition at line 829 of file generation.c.

References Assert, GenerationBlock::context, elog, GenerationBlock::endptr, ERROR, ExternalChunkGetBlock, fb(), Generation_CHUNKHDRSZ, GenerationAlloc(), GenerationBlockIsValid, GenerationFree(), MemoryChunkGetBlock(), MemoryChunkGetValue(), MemoryChunkIsExternal(), MemoryContextAllocationFailure(), name, PointerGetMemoryChunk, VALGRIND_MAKE_MEM_DEFINED, VALGRIND_MAKE_MEM_NOACCESS, VALGRIND_MAKE_MEM_UNDEFINED, and WARNING.

◆ GenerationReset()

|

extern |

Definition at line 291 of file generation.c.

References Assert, GenerationContext::block, GenerationContext::blocks, dlist_container, dlist_foreach_modify, dlist_has_next(), dlist_head_node(), dlist_is_empty(), fb(), FIRST_BLOCKHDRSZ, GenerationContext::freeblock, GenerationBlockFree(), GenerationBlockMarkEmpty(), GenerationIsValid, GenerationContext::initBlockSize, IsKeeperBlock, KeeperBlock, GenerationContext::nextBlockSize, and VALGRIND_MEMPOOL_TRIM.

Referenced by GenerationDelete().

◆ GenerationStats()

|

extern |

Definition at line 1062 of file generation.c.

References Assert, GenerationBlock::blksize, GenerationContext::blocks, dlist_iter::cur, dlist_container, dlist_foreach, GenerationBlock::endptr, fb(), GenerationBlock::freeptr, GenerationIsValid, MAXALIGN, GenerationBlock::nchunks, GenerationBlock::nfree, and snprintf.

◆ MemoryContextAllocationFailure()

|

extern |

Definition at line 1198 of file mcxt.c.

References ereport, errcode(), errdetail(), errmsg(), ERROR, fb(), MCXT_ALLOC_NO_OOM, MemoryContextStats(), MemoryContextData::name, and TopMemoryContext.

Referenced by AlignedAllocRealloc(), AllocSetAllocFromNewBlock(), AllocSetAllocLarge(), AllocSetRealloc(), BumpAllocFromNewBlock(), BumpAllocLarge(), GenerationAllocFromNewBlock(), GenerationAllocLarge(), GenerationRealloc(), and SlabAllocFromNewBlock().

◆ MemoryContextCheckSize()

|

inlinestatic |

Definition at line 167 of file memutils_internal.h.

References AllocHugeSizeIsValid, AllocSizeIsValid, MCXT_ALLOC_HUGE, MemoryContextSizeFailure(), and unlikely.

Referenced by AllocSetAllocLarge(), AllocSetRealloc(), BumpAllocLarge(), and GenerationAllocLarge().

◆ MemoryContextCreate()

|

extern |

Definition at line 1149 of file mcxt.c.

References MemoryContextData::allowInCritSection, Assert, CritSectionCount, fb(), MemoryContextData::firstchild, MemoryContextData::ident, MemoryContextData::isReset, mcxt_methods, MemoryContextData::mem_allocated, MemoryContextIsValid, MemoryContextData::methods, name, MemoryContextData::name, MemoryContextData::nextchild, MemoryContextData::parent, MemoryContextData::prevchild, and MemoryContextData::reset_cbs.

Referenced by AllocSetContextCreateInternal(), BumpContextCreate(), GenerationContextCreate(), and SlabContextCreate().

◆ MemoryContextSizeFailure()

|

extern |

◆ SlabAlloc()

|

extern |

Definition at line 658 of file slab.c.

References Assert, SlabContext::blocklist, SlabContext::chunkSize, SlabContext::chunksPerBlock, SlabContext::curBlocklistIndex, dlist_delete_from(), dlist_head_element, dlist_is_empty(), dlist_push_head(), fb(), SlabBlock::nfree, SlabBlock::node, SlabAllocFromNewBlock(), SlabAllocInvalidSize(), SlabAllocSetupNewChunk(), SlabBlocklistIndex(), SlabFindNextBlockListIndex(), SlabGetNextFreeChunk(), SlabIsValid, and unlikely.

◆ SlabDelete()

|

extern |

Definition at line 506 of file slab.c.

References free, SlabReset(), and VALGRIND_DESTROY_MEMPOOL.

◆ SlabFree()

Definition at line 729 of file slab.c.

References Assert, SlabContext::blocklist, SlabContext::blockSize, SlabContext::chunkSize, SlabContext::chunksPerBlock, SlabContext::curBlocklistIndex, dclist_count(), dclist_push_head(), dlist_delete_from(), dlist_is_empty(), dlist_push_head(), elog, SlabContext::emptyblocks, fb(), free, SlabBlock::freehead, SlabContext::fullChunkSize, SlabContext::header, MemoryContextData::mem_allocated, MemoryChunkGetBlock(), MemoryContextData::name, SlabBlock::nfree, SlabBlock::node, PointerGetMemoryChunk, SlabBlock::slab, Slab_CHUNKHDRSZ, SLAB_MAXIMUM_EMPTY_BLOCKS, SlabBlockIsValid, SlabBlocklistIndex(), SlabFindNextBlockListIndex(), unlikely, VALGRIND_MAKE_MEM_DEFINED, VALGRIND_MEMPOOL_FREE, and WARNING.

◆ SlabGetChunkContext()

|

extern |

Definition at line 895 of file slab.c.

References Assert, fb(), SlabContext::header, MemoryChunkGetBlock(), PointerGetMemoryChunk, SlabBlock::slab, Slab_CHUNKHDRSZ, SlabBlockIsValid, VALGRIND_MAKE_MEM_DEFINED, and VALGRIND_MAKE_MEM_NOACCESS.

◆ SlabGetChunkSpace()

Definition at line 919 of file slab.c.

References Assert, fb(), SlabContext::fullChunkSize, MemoryChunkGetBlock(), PointerGetMemoryChunk, SlabBlock::slab, Slab_CHUNKHDRSZ, SlabBlockIsValid, VALGRIND_MAKE_MEM_DEFINED, and VALGRIND_MAKE_MEM_NOACCESS.

◆ SlabIsEmpty()

|

extern |

Definition at line 944 of file slab.c.

References Assert, MemoryContextData::mem_allocated, and SlabIsValid.

◆ SlabRealloc()

Definition at line 858 of file slab.c.

References SlabContext::chunkSize, elog, ERROR, fb(), MemoryChunkGetBlock(), PointerGetMemoryChunk, SlabBlock::slab, Slab_CHUNKHDRSZ, SlabBlockIsValid, VALGRIND_MAKE_MEM_DEFINED, and VALGRIND_MAKE_MEM_NOACCESS.

◆ SlabReset()

|

extern |

Definition at line 436 of file slab.c.

References Assert, SlabContext::blocklist, SlabContext::blockSize, SlabContext::curBlocklistIndex, dclist_delete_from(), dclist_foreach_modify, dlist_container, dlist_delete(), dlist_foreach_modify, SlabContext::emptyblocks, fb(), free, i, MemoryContextData::mem_allocated, SLAB_BLOCKLIST_COUNT, SlabIsValid, VALGRIND_MEMPOOL_FREE, and VALGRIND_MEMPOOL_TRIM.

Referenced by SlabDelete().

◆ SlabStats()

|

extern |

Definition at line 961 of file slab.c.

References Assert, SlabContext::blocklist, SlabContext::blockSize, SlabContext::chunksPerBlock, dlist_iter::cur, dclist_count(), dlist_container, dlist_foreach, SlabContext::emptyblocks, fb(), SlabContext::fullChunkSize, i, SlabBlock::nfree, SLAB_BLOCKLIST_COUNT, Slab_CONTEXT_HDRSZ, SlabIsValid, and snprintf.