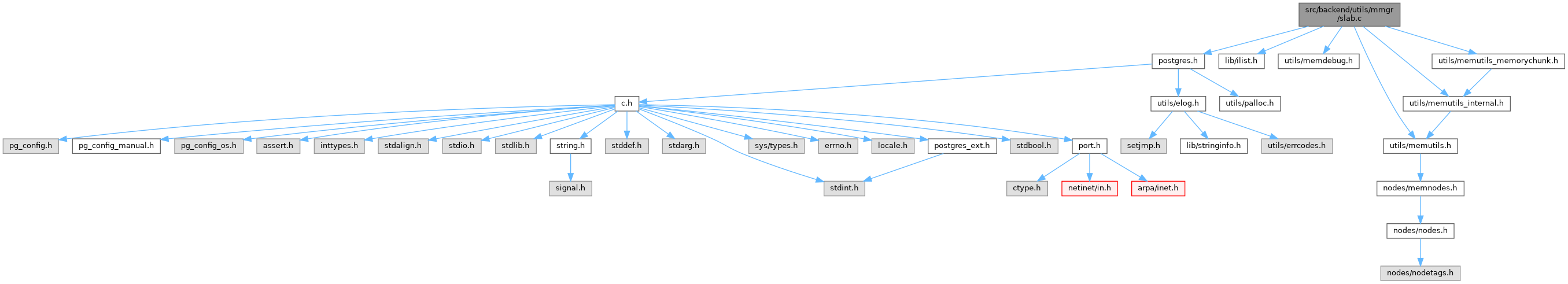

#include "postgres.h"#include "lib/ilist.h"#include "utils/memdebug.h"#include "utils/memutils.h"#include "utils/memutils_internal.h"#include "utils/memutils_memorychunk.h"

Go to the source code of this file.

Data Structures | |

| struct | SlabContext |

| struct | SlabBlock |

Macros | |

| #define | Slab_BLOCKHDRSZ MAXALIGN(sizeof(SlabBlock)) |

| #define | Slab_CONTEXT_HDRSZ(chunksPerBlock) sizeof(SlabContext) |

| #define | SLAB_BLOCKLIST_COUNT 3 |

| #define | SLAB_MAXIMUM_EMPTY_BLOCKS 10 |

| #define | Slab_CHUNKHDRSZ sizeof(MemoryChunk) |

| #define | SlabChunkGetPointer(chk) ((void *) (((char *) (chk)) + sizeof(MemoryChunk))) |

| #define | SlabBlockGetChunk(slab, block, n) |

| #define | SlabIsValid(set) ((set) && IsA(set, SlabContext)) |

| #define | SlabBlockIsValid(block) ((block) && SlabIsValid((block)->slab)) |

Typedefs | |

| typedef struct SlabContext | SlabContext |

| typedef struct SlabBlock | SlabBlock |

Macro Definition Documentation

◆ Slab_BLOCKHDRSZ

◆ SLAB_BLOCKLIST_COUNT

◆ Slab_CHUNKHDRSZ

| #define Slab_CHUNKHDRSZ sizeof(MemoryChunk) |

◆ Slab_CONTEXT_HDRSZ

| #define Slab_CONTEXT_HDRSZ | ( | chunksPerBlock | ) | sizeof(SlabContext) |

◆ SLAB_MAXIMUM_EMPTY_BLOCKS

◆ SlabBlockGetChunk

| #define SlabBlockGetChunk | ( | slab, | |

| block, | |||

| n | |||

| ) |

Definition at line 165 of file slab.c.

◆ SlabBlockIsValid

| #define SlabBlockIsValid | ( | block | ) | ((block) && SlabIsValid((block)->slab)) |

◆ SlabChunkGetPointer

◆ SlabIsValid

| #define SlabIsValid | ( | set | ) | ((set) && IsA(set, SlabContext)) |

Typedef Documentation

◆ SlabBlock

◆ SlabContext

Function Documentation

◆ SlabAlloc()

| void * SlabAlloc | ( | MemoryContext | context, |

| Size | size, | ||

| int | flags | ||

| ) |

Definition at line 658 of file slab.c.

References Assert, SlabContext::blocklist, SlabContext::chunkSize, SlabContext::chunksPerBlock, SlabContext::curBlocklistIndex, dlist_delete_from(), dlist_head_element, dlist_is_empty(), dlist_push_head(), fb(), SlabBlock::nfree, SlabBlock::node, SlabAllocFromNewBlock(), SlabAllocInvalidSize(), SlabAllocSetupNewChunk(), SlabBlocklistIndex(), SlabFindNextBlockListIndex(), SlabGetNextFreeChunk(), SlabIsValid, and unlikely.

◆ SlabAllocFromNewBlock()

|

static |

Definition at line 564 of file slab.c.

References Assert, SlabContext::blocklist, SlabContext::blockSize, SlabContext::chunksPerBlock, SlabContext::curBlocklistIndex, dclist_count(), dclist_pop_head_node(), dlist_container, dlist_is_empty(), dlist_push_head(), SlabContext::emptyblocks, fb(), SlabBlock::freehead, malloc, MemoryContextData::mem_allocated, MemoryContextAllocationFailure(), SlabBlock::nfree, SlabBlock::node, SlabBlock::nunused, SlabBlock::slab, Slab_BLOCKHDRSZ, SlabAllocSetupNewChunk(), SlabBlockGetChunk, SlabBlocklistIndex(), SlabGetNextFreeChunk(), unlikely, SlabBlock::unused, and VALGRIND_MEMPOOL_ALLOC.

Referenced by SlabAlloc().

◆ SlabAllocInvalidSize()

|

static |

Definition at line 634 of file slab.c.

References SlabContext::chunkSize, elog, and ERROR.

Referenced by SlabAlloc().

◆ SlabAllocSetupNewChunk()

|

inlinestatic |

Definition at line 523 of file slab.c.

References Assert, SlabContext::chunkSize, SlabContext::chunksPerBlock, fb(), SlabContext::fullChunkSize, MAXALIGN, MCTX_SLAB_ID, MemoryChunkGetPointer, MemoryChunkSetHdrMask(), Slab_CHUNKHDRSZ, SlabBlockGetChunk, VALGRIND_MAKE_MEM_NOACCESS, and VALGRIND_MAKE_MEM_UNDEFINED.

Referenced by SlabAlloc(), and SlabAllocFromNewBlock().

◆ SlabBlocklistIndex()

|

inlinestatic |

Definition at line 211 of file slab.c.

References Assert, SlabContext::blocklist_shift, fb(), and SLAB_BLOCKLIST_COUNT.

Referenced by SlabAlloc(), SlabAllocFromNewBlock(), and SlabFree().

◆ SlabContextCreate()

| MemoryContext SlabContextCreate | ( | MemoryContext | parent, |

| const char * | name, | ||

| Size | blockSize, | ||

| Size | chunkSize | ||

| ) |

Definition at line 322 of file slab.c.

References Assert, SlabContext::blocklist, SlabContext::blocklist_shift, SlabContext::blockSize, SlabContext::chunkSize, SlabContext::chunksPerBlock, SlabContext::curBlocklistIndex, dclist_init(), dlist_init(), elog, SlabContext::emptyblocks, ereport, errcode(), errdetail(), errmsg(), ERROR, fb(), SlabContext::fullChunkSize, i, malloc, MAXALIGN, MCTX_SLAB_ID, MEMORYCHUNK_MAX_BLOCKOFFSET, MEMORYCHUNK_MAX_VALUE, MemoryContextCreate(), MemoryContextStats(), name, Slab_BLOCKHDRSZ, SLAB_BLOCKLIST_COUNT, Slab_CHUNKHDRSZ, Slab_CONTEXT_HDRSZ, StaticAssertDecl, TopMemoryContext, VALGRIND_CREATE_MEMPOOL, and VALGRIND_MEMPOOL_ALLOC.

Referenced by for(), ReorderBufferAllocate(), and test_random().

◆ SlabDelete()

| void SlabDelete | ( | MemoryContext | context | ) |

Definition at line 506 of file slab.c.

References free, SlabReset(), and VALGRIND_DESTROY_MEMPOOL.

◆ SlabFindNextBlockListIndex()

|

static |

Definition at line 251 of file slab.c.

References SlabContext::blocklist, dlist_is_empty(), i, and SLAB_BLOCKLIST_COUNT.

Referenced by SlabAlloc(), and SlabFree().

◆ SlabFree()

Definition at line 729 of file slab.c.

References Assert, SlabContext::blocklist, SlabContext::blockSize, SlabContext::chunkSize, SlabContext::chunksPerBlock, SlabContext::curBlocklistIndex, dclist_count(), dclist_push_head(), dlist_delete_from(), dlist_is_empty(), dlist_push_head(), elog, SlabContext::emptyblocks, fb(), free, SlabBlock::freehead, SlabContext::fullChunkSize, SlabContext::header, MemoryContextData::mem_allocated, MemoryChunkGetBlock(), MemoryContextData::name, SlabBlock::nfree, SlabBlock::node, PointerGetMemoryChunk, SlabBlock::slab, Slab_CHUNKHDRSZ, SLAB_MAXIMUM_EMPTY_BLOCKS, SlabBlockIsValid, SlabBlocklistIndex(), SlabFindNextBlockListIndex(), unlikely, VALGRIND_MAKE_MEM_DEFINED, VALGRIND_MEMPOOL_FREE, and WARNING.

◆ SlabGetChunkContext()

| MemoryContext SlabGetChunkContext | ( | void * | pointer | ) |

Definition at line 895 of file slab.c.

References Assert, fb(), SlabContext::header, MemoryChunkGetBlock(), PointerGetMemoryChunk, SlabBlock::slab, Slab_CHUNKHDRSZ, SlabBlockIsValid, VALGRIND_MAKE_MEM_DEFINED, and VALGRIND_MAKE_MEM_NOACCESS.

◆ SlabGetChunkSpace()

Definition at line 919 of file slab.c.

References Assert, fb(), SlabContext::fullChunkSize, MemoryChunkGetBlock(), PointerGetMemoryChunk, SlabBlock::slab, Slab_CHUNKHDRSZ, SlabBlockIsValid, VALGRIND_MAKE_MEM_DEFINED, and VALGRIND_MAKE_MEM_NOACCESS.

◆ SlabGetNextFreeChunk()

|

inlinestatic |

Definition at line 271 of file slab.c.

References Assert, SlabContext::chunksPerBlock, fb(), SlabBlock::freehead, SlabContext::fullChunkSize, SlabBlock::nfree, SlabBlock::nunused, SlabBlockGetChunk, SlabChunkGetPointer, SlabBlock::unused, and VALGRIND_MAKE_MEM_DEFINED.

Referenced by SlabAlloc(), and SlabAllocFromNewBlock().

◆ SlabIsEmpty()

| bool SlabIsEmpty | ( | MemoryContext | context | ) |

Definition at line 944 of file slab.c.

References Assert, MemoryContextData::mem_allocated, and SlabIsValid.

◆ SlabRealloc()

Definition at line 858 of file slab.c.

References SlabContext::chunkSize, elog, ERROR, fb(), MemoryChunkGetBlock(), PointerGetMemoryChunk, SlabBlock::slab, Slab_CHUNKHDRSZ, SlabBlockIsValid, VALGRIND_MAKE_MEM_DEFINED, and VALGRIND_MAKE_MEM_NOACCESS.

◆ SlabReset()

| void SlabReset | ( | MemoryContext | context | ) |

Definition at line 436 of file slab.c.

References Assert, SlabContext::blocklist, SlabContext::blockSize, SlabContext::curBlocklistIndex, dclist_delete_from(), dclist_foreach_modify, dlist_container, dlist_delete(), dlist_foreach_modify, SlabContext::emptyblocks, fb(), free, i, MemoryContextData::mem_allocated, SLAB_BLOCKLIST_COUNT, SlabIsValid, VALGRIND_MEMPOOL_FREE, and VALGRIND_MEMPOOL_TRIM.

Referenced by SlabDelete().

◆ SlabStats()

| void SlabStats | ( | MemoryContext | context, |

| MemoryStatsPrintFunc | printfunc, | ||

| void * | passthru, | ||

| MemoryContextCounters * | totals, | ||

| bool | print_to_stderr | ||

| ) |

Definition at line 961 of file slab.c.

References Assert, SlabContext::blocklist, SlabContext::blockSize, SlabContext::chunksPerBlock, dlist_iter::cur, dclist_count(), dlist_container, dlist_foreach, SlabContext::emptyblocks, fb(), SlabContext::fullChunkSize, i, SlabBlock::nfree, SLAB_BLOCKLIST_COUNT, Slab_CONTEXT_HDRSZ, SlabIsValid, and snprintf.