Loading...

Searching...

No Matches

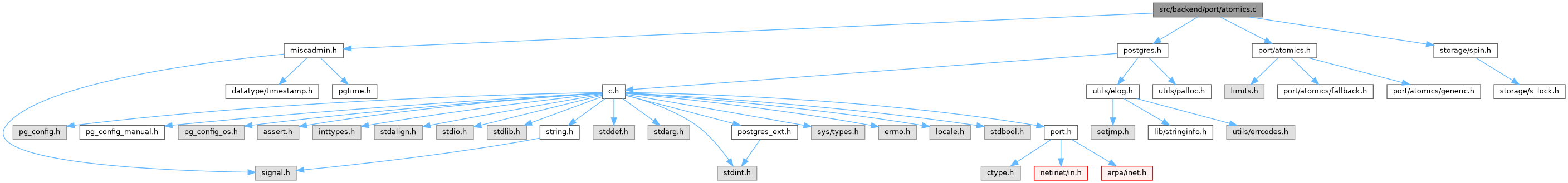

atomics.c File Reference

Include dependency graph for atomics.c:

Go to the source code of this file.

Functions | |

| void | pg_atomic_init_u64_impl (volatile pg_atomic_uint64 *ptr, uint64 val_) |

| bool | pg_atomic_compare_exchange_u64_impl (volatile pg_atomic_uint64 *ptr, uint64 *expected, uint64 newval) |

| uint64 | pg_atomic_fetch_add_u64_impl (volatile pg_atomic_uint64 *ptr, int64 add_) |

Function Documentation

◆ pg_atomic_compare_exchange_u64_impl()

| bool pg_atomic_compare_exchange_u64_impl | ( | volatile pg_atomic_uint64 * | ptr, |

| uint64 * | expected, | ||

| uint64 | newval | ||

| ) |

Definition at line 34 of file atomics.c.

36{

37 bool ret;

38

39 /*

40 * Do atomic op under a spinlock. It might look like we could just skip

41 * the cmpxchg if the lock isn't available, but that'd just emulate a

42 * 'weak' compare and swap. I.e. one that allows spurious failures. Since

43 * several algorithms rely on a strong variant and that is efficiently

44 * implementable on most major architectures let's emulate it here as

45 * well.

46 */

48

49 /* perform compare/exchange logic */

50 ret = ptr->value == *expected;

51 *expected = ptr->value;

52 if (ret)

53 ptr->value = newval;

54

55 /* and release lock */

57

58 return ret;

59}

#define newval

References fb(), newval, pg_atomic_uint64::sema, SpinLockAcquire, SpinLockRelease, and pg_atomic_uint64::value.

Referenced by pg_atomic_compare_exchange_u64(), and pg_atomic_read_u64_impl().

◆ pg_atomic_fetch_add_u64_impl()

| uint64 pg_atomic_fetch_add_u64_impl | ( | volatile pg_atomic_uint64 * | ptr, |

| int64 | add_ | ||

| ) |

Definition at line 62 of file atomics.c.

References fb(), pg_atomic_uint64::sema, SpinLockAcquire, SpinLockRelease, and pg_atomic_uint64::value.

Referenced by pg_atomic_fetch_add_u64().

◆ pg_atomic_init_u64_impl()

| void pg_atomic_init_u64_impl | ( | volatile pg_atomic_uint64 * | ptr, |

| uint64 | val_ | ||

| ) |

Definition at line 24 of file atomics.c.

References fb(), pg_atomic_uint64::sema, SpinLockInit, StaticAssertDecl, and pg_atomic_uint64::value.

Referenced by pg_atomic_init_u64().