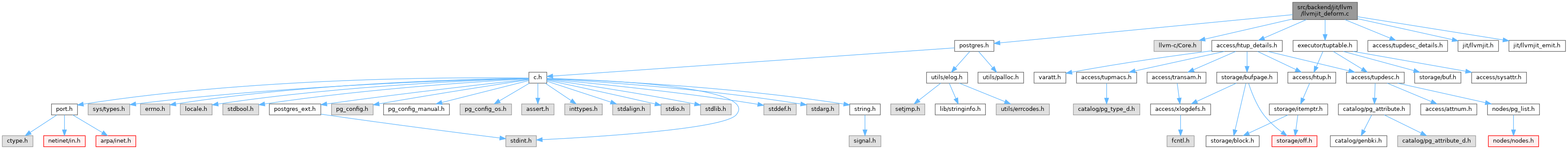

Definition at line 34 of file llvmjit_deform.c.

36{

38

42

45

49

58

60

69

71

77

79

81

82

84

85

87

88

90

92

93

96

97

101

104

106

107

108

109

110

112 {

114

115

116

117

118

119

120

121

122

123

124

125

127 !

att->atthasmissing &&

130 }

131

132

133 {

135

137

139 param_types,

lengthof(param_types), 0);

140 }

145

156

158

165

167

169

170

172

174

177 "tts_values");

180 "tts_ISNULL");

183

185 {

187

192 "heapslot");

196 "tupleheader");

197 }

199 {

201

206 "minimalslot");

216 "tupleheader");

217 }

218 else

219 {

220

222 }

223

229 "tuple");

236 ""),

238 "t_bits");

244 "infomask1");

249 "infomask2");

250

251

258 "hasnulls");

259

260

264 "maxatt");

265

266

267

268

269

276 ""),

278

284 ""),

286 "v_tupdata_base");

287

288

289

290

291

292 {

294

298 }

299

300

302 {

315 }

316

317

318

319

320

321

322

323

324

326 {

327

331 }

332 else

333 {

336

337

342 ""),

345

346

348

357 }

358

360

362

363

364

365

366

367

368

369 if (true)

370 {

373

375 {

377

379 }

380 }

381 else

382 {

383

385 }

386

389

390

391

392

393

395 {

402

403

405

406

407

408

409

411 {

413 }

414

415

416

417

418

419

421 {

423 }

424 else

425 {

427

431 "heap_natts");

433 }

435

436

437

438

439

440

442 {

451

454

457 else

459

463

468 "attisnull");

469

471

473

475

476

480

484

487 }

488 else

489 {

490

494 }

496

497

498

499

500

501

502

503

504

505

508 {

509

510

511

512

513

514

515

516

517

518 if (

att->attlen == -1)

519 {

523

524

526

528

534 "ispadbyte");

538 }

539 else

540 {

542 }

543

545

546

547 {

550

551

553

554

556

557

559

561

563 }

564

565

566

567

568

569

571 {

574 }

575

578 }

579 else

580 {

585 }

587

588

589

590

591

592

593

595 {

598 }

599

600

602 {

603

606 }

609 {

610

611

612

613

614

617 }

620 {

621

622

623

624

625

630 }

631 else

632 {

635 }

636

637

638

639 {

641

644 }

645

646

648

649

652

653

654

655

656

658 {

662

667

669 }

670 else

671 {

673

674

679 "attr_ptr");

681 }

682

683

685 {

687 }

688 else if (

att->attlen == -1)

689 {

694 "varsize_any");

697 }

698 else if (

att->attlen == -2)

699 {

704

706

707

709 }

710 else

711 {

714 }

715

717 {

720 }

721 else

722 {

724

727 }

728

729

730

731

732

734 {

735

737 }

738 else

739 {

741 }

742 }

743

744

745

747

748 {

751

759 }

760

762

764}

#define TYPEALIGN(ALIGNVAL, LEN)

#define Assert(condition)

const TupleTableSlotOps TTSOpsVirtual

const TupleTableSlotOps TTSOpsBufferHeapTuple

const TupleTableSlotOps TTSOpsHeapTuple

const TupleTableSlotOps TTSOpsMinimalTuple

#define palloc_array(type, count)

#define FIELDNO_HEAPTUPLEDATA_DATA

#define FIELDNO_HEAPTUPLEHEADERDATA_INFOMASK

#define FIELDNO_HEAPTUPLEHEADERDATA_HOFF

#define FIELDNO_HEAPTUPLEHEADERDATA_BITS

#define FIELDNO_HEAPTUPLEHEADERDATA_INFOMASK2

LLVMTypeRef StructMinimalTupleTableSlot

LLVMValueRef llvm_pg_func(LLVMModuleRef mod, const char *funcname)

char * llvm_expand_funcname(struct LLVMJitContext *context, const char *basename)

LLVMTypeRef llvm_pg_var_func_type(const char *varname)

LLVMTypeRef StructTupleTableSlot

LLVMTypeRef TypeStorageBool

LLVMTypeRef StructHeapTupleTableSlot

LLVMModuleRef llvm_mutable_module(LLVMJitContext *context)

LLVMValueRef AttributeTemplate

LLVMTypeRef StructHeapTupleHeaderData

LLVMTypeRef StructHeapTupleData

void llvm_copy_attributes(LLVMValueRef v_from, LLVMValueRef v_to)

LLVMTypeRef LLVMGetFunctionType(LLVMValueRef r)

#define ATTNULLABLE_VALID

static CompactAttribute * TupleDescCompactAttr(TupleDesc tupdesc, int i)

#define FIELDNO_HEAPTUPLETABLESLOT_OFF

#define FIELDNO_HEAPTUPLETABLESLOT_TUPLE

#define FIELDNO_TUPLETABLESLOT_ISNULL

#define FIELDNO_MINIMALTUPLETABLESLOT_TUPLE

#define FIELDNO_MINIMALTUPLETABLESLOT_OFF

#define FIELDNO_TUPLETABLESLOT_VALUES

#define FIELDNO_TUPLETABLESLOT_FLAGS

#define FIELDNO_TUPLETABLESLOT_NVALID

References Assert, ATTNULLABLE_VALID, attnum, AttributeTemplate, b, fb(), FIELDNO_HEAPTUPLEDATA_DATA, FIELDNO_HEAPTUPLEHEADERDATA_BITS, FIELDNO_HEAPTUPLEHEADERDATA_HOFF, FIELDNO_HEAPTUPLEHEADERDATA_INFOMASK, FIELDNO_HEAPTUPLEHEADERDATA_INFOMASK2, FIELDNO_HEAPTUPLETABLESLOT_OFF, FIELDNO_HEAPTUPLETABLESLOT_TUPLE, FIELDNO_MINIMALTUPLETABLESLOT_OFF, FIELDNO_MINIMALTUPLETABLESLOT_TUPLE, FIELDNO_TUPLETABLESLOT_FLAGS, FIELDNO_TUPLETABLESLOT_ISNULL, FIELDNO_TUPLETABLESLOT_NVALID, FIELDNO_TUPLETABLESLOT_VALUES, funcname, HEAP_HASNULL, HEAP_NATTS_MASK, lengthof, llvm_copy_attributes(), llvm_expand_funcname(), llvm_mutable_module(), llvm_pg_func(), llvm_pg_var_func_type(), LLVMGetFunctionType(), TupleDescData::natts, palloc_array, pg_unreachable, StructHeapTupleData, StructHeapTupleHeaderData, StructHeapTupleTableSlot, StructMinimalTupleTableSlot, StructTupleTableSlot, TTS_FLAG_SLOW, TTSOpsBufferHeapTuple, TTSOpsHeapTuple, TTSOpsMinimalTuple, TTSOpsVirtual, TupleDescCompactAttr(), TYPEALIGN, TypeDatum, TypeSizeT, and TypeStorageBool.

Referenced by llvm_compile_expr().