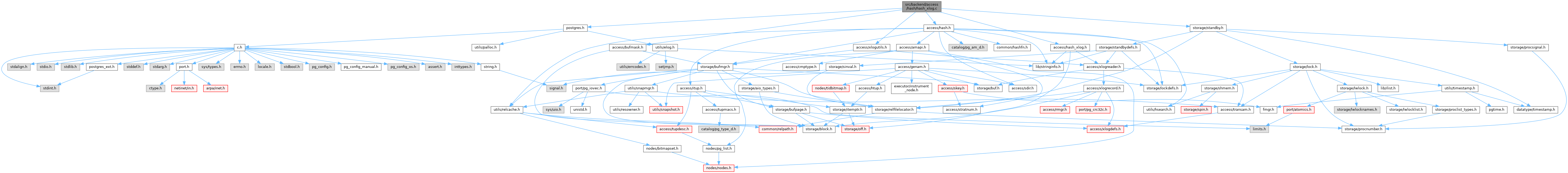

#include "postgres.h"#include "access/bufmask.h"#include "access/hash.h"#include "access/hash_xlog.h"#include "access/xlogutils.h"#include "storage/standby.h"

Go to the source code of this file.

Functions | |

| static void | hash_xlog_init_meta_page (XLogReaderState *record) |

| static void | hash_xlog_init_bitmap_page (XLogReaderState *record) |

| static void | hash_xlog_insert (XLogReaderState *record) |

| static void | hash_xlog_add_ovfl_page (XLogReaderState *record) |

| static void | hash_xlog_split_allocate_page (XLogReaderState *record) |

| static void | hash_xlog_split_page (XLogReaderState *record) |

| static void | hash_xlog_split_complete (XLogReaderState *record) |

| static void | hash_xlog_move_page_contents (XLogReaderState *record) |

| static void | hash_xlog_squeeze_page (XLogReaderState *record) |

| static void | hash_xlog_delete (XLogReaderState *record) |

| static void | hash_xlog_split_cleanup (XLogReaderState *record) |

| static void | hash_xlog_update_meta_page (XLogReaderState *record) |

| static void | hash_xlog_vacuum_one_page (XLogReaderState *record) |

| void | hash_redo (XLogReaderState *record) |

| void | hash_mask (char *pagedata, BlockNumber blkno) |

Function Documentation

◆ hash_mask()

| void hash_mask | ( | char * | pagedata, |

| BlockNumber | blkno | ||

| ) |

Definition at line 1117 of file hash_xlog.c.

References fb(), HashPageOpaqueData::hasho_flag, HashPageGetOpaque, LH_BUCKET_PAGE, LH_OVERFLOW_PAGE, LH_PAGE_TYPE, LH_UNUSED_PAGE, mask_lp_flags(), mask_page_content(), mask_page_hint_bits(), mask_page_lsn_and_checksum(), and mask_unused_space().

◆ hash_redo()

| void hash_redo | ( | XLogReaderState * | record | ) |

Definition at line 1063 of file hash_xlog.c.

References elog, fb(), hash_xlog_add_ovfl_page(), hash_xlog_delete(), hash_xlog_init_bitmap_page(), hash_xlog_init_meta_page(), hash_xlog_insert(), hash_xlog_move_page_contents(), hash_xlog_split_allocate_page(), hash_xlog_split_cleanup(), hash_xlog_split_complete(), hash_xlog_split_page(), hash_xlog_squeeze_page(), hash_xlog_update_meta_page(), hash_xlog_vacuum_one_page(), PANIC, XLOG_HASH_ADD_OVFL_PAGE, XLOG_HASH_DELETE, XLOG_HASH_INIT_BITMAP_PAGE, XLOG_HASH_INIT_META_PAGE, XLOG_HASH_INSERT, XLOG_HASH_MOVE_PAGE_CONTENTS, XLOG_HASH_SPLIT_ALLOCATE_PAGE, XLOG_HASH_SPLIT_CLEANUP, XLOG_HASH_SPLIT_COMPLETE, XLOG_HASH_SPLIT_PAGE, XLOG_HASH_SQUEEZE_PAGE, XLOG_HASH_UPDATE_META_PAGE, XLOG_HASH_VACUUM_ONE_PAGE, and XLogRecGetInfo.

◆ hash_xlog_add_ovfl_page()

|

static |

Definition at line 172 of file hash_xlog.c.

References _hash_initbitmapbuffer(), _hash_initbuf(), Assert, BLK_NEEDS_REDO, BlockNumberIsValid(), BufferGetBlockNumber(), BufferGetPage(), BufferIsValid(), data, XLogReaderState::EndRecPtr, fb(), HashPageGetBitmap, HashPageGetMeta, HashPageGetOpaque, InvalidBlockNumber, LH_OVERFLOW_PAGE, MarkBufferDirty(), PageSetLSN(), PG_USED_FOR_ASSERTS_ONLY, SETBIT, UnlockReleaseBuffer(), XLogInitBufferForRedo(), XLogReadBufferForRedo(), XLogRecGetBlockData(), XLogRecGetBlockTag(), XLogRecGetData, and XLogRecHasBlockRef.

Referenced by hash_redo().

◆ hash_xlog_delete()

|

static |

Definition at line 857 of file hash_xlog.c.

References BLK_NEEDS_REDO, BufferGetPage(), BufferIsValid(), XLogReaderState::EndRecPtr, fb(), HashPageGetOpaque, InvalidBuffer, len, MarkBufferDirty(), PageIndexMultiDelete(), PageSetLSN(), RBM_NORMAL, UnlockReleaseBuffer(), XLogReadBufferForRedo(), XLogReadBufferForRedoExtended(), XLogRecGetBlockData(), and XLogRecGetData.

Referenced by hash_redo().

◆ hash_xlog_init_bitmap_page()

|

static |

Definition at line 63 of file hash_xlog.c.

References _hash_initbitmapbuffer(), BLK_NEEDS_REDO, BufferGetPage(), BufferIsValid(), XLogReaderState::EndRecPtr, fb(), FlushOneBuffer(), HashPageGetMeta, INIT_FORKNUM, MarkBufferDirty(), PageSetLSN(), UnlockReleaseBuffer(), XLogInitBufferForRedo(), XLogReadBufferForRedo(), XLogRecGetBlockTag(), and XLogRecGetData.

Referenced by hash_redo().

◆ hash_xlog_init_meta_page()

|

static |

Definition at line 27 of file hash_xlog.c.

References _hash_init_metabuffer(), Assert, BufferGetPage(), BufferIsValid(), XLogReaderState::EndRecPtr, fb(), FlushOneBuffer(), INIT_FORKNUM, MarkBufferDirty(), PageSetLSN(), UnlockReleaseBuffer(), XLogInitBufferForRedo(), XLogRecGetBlockTag(), and XLogRecGetData.

Referenced by hash_redo().

◆ hash_xlog_insert()

|

static |

Definition at line 125 of file hash_xlog.c.

References BLK_NEEDS_REDO, BufferGetPage(), BufferIsValid(), elog, XLogReaderState::EndRecPtr, fb(), HashPageGetMeta, InvalidOffsetNumber, MarkBufferDirty(), PageAddItem, PageSetLSN(), PANIC, UnlockReleaseBuffer(), XLogReadBufferForRedo(), XLogRecGetBlockData(), and XLogRecGetData.

Referenced by hash_redo().

◆ hash_xlog_move_page_contents()

|

static |

Definition at line 499 of file hash_xlog.c.

References Assert, BLK_NEEDS_REDO, BufferGetPage(), BufferIsValid(), data, elog, XLogReaderState::EndRecPtr, ERROR, fb(), IndexTupleSize(), InvalidBuffer, InvalidOffsetNumber, len, MarkBufferDirty(), MAXALIGN, PageAddItem, PageIndexMultiDelete(), PageSetLSN(), RBM_NORMAL, UnlockReleaseBuffer(), XLogReadBufferForRedo(), XLogReadBufferForRedoExtended(), XLogRecGetBlockData(), and XLogRecGetData.

Referenced by hash_redo().

◆ hash_xlog_split_allocate_page()

|

static |

Definition at line 310 of file hash_xlog.c.

References _hash_initbuf(), BLK_NEEDS_REDO, BLK_RESTORED, BufferGetPage(), BufferIsValid(), data, XLogReaderState::EndRecPtr, fb(), HashPageGetMeta, HashPageGetOpaque, MarkBufferDirty(), PageSetLSN(), RBM_NORMAL, RBM_ZERO_AND_CLEANUP_LOCK, UnlockReleaseBuffer(), XLH_SPLIT_META_UPDATE_MASKS, XLH_SPLIT_META_UPDATE_SPLITPOINT, XLogReadBufferForRedo(), XLogReadBufferForRedoExtended(), XLogRecGetBlockData(), and XLogRecGetData.

Referenced by hash_redo().

◆ hash_xlog_split_cleanup()

|

static |

Definition at line 935 of file hash_xlog.c.

References BLK_NEEDS_REDO, BufferGetPage(), BufferIsValid(), XLogReaderState::EndRecPtr, fb(), HashPageGetOpaque, MarkBufferDirty(), PageSetLSN(), UnlockReleaseBuffer(), and XLogReadBufferForRedo().

Referenced by hash_redo().

◆ hash_xlog_split_complete()

|

static |

Definition at line 440 of file hash_xlog.c.

References BLK_NEEDS_REDO, BLK_RESTORED, BufferGetPage(), BufferIsValid(), XLogReaderState::EndRecPtr, fb(), HashPageGetOpaque, MarkBufferDirty(), PageSetLSN(), UnlockReleaseBuffer(), XLogReadBufferForRedo(), and XLogRecGetData.

Referenced by hash_redo().

◆ hash_xlog_split_page()

|

static |

Definition at line 426 of file hash_xlog.c.

References BLK_RESTORED, buf, elog, ERROR, UnlockReleaseBuffer(), and XLogReadBufferForRedo().

Referenced by hash_redo().

◆ hash_xlog_squeeze_page()

|

static |

Definition at line 624 of file hash_xlog.c.

References _hash_pageinit(), Assert, BLK_NEEDS_REDO, BLK_NOTFOUND, BufferGetPage(), BufferGetPageSize(), BufferIsValid(), CLRBIT, data, elog, XLogReaderState::EndRecPtr, ERROR, fb(), HASHO_PAGE_ID, HashPageGetBitmap, HashPageGetMeta, HashPageGetOpaque, IndexTupleSize(), InvalidBlockNumber, InvalidBucket, InvalidBuffer, InvalidOffsetNumber, LH_UNUSED_PAGE, MarkBufferDirty(), MAXALIGN, PageAddItem, PageSetLSN(), RBM_NORMAL, UnlockReleaseBuffer(), XLogReadBufferForRedo(), XLogReadBufferForRedoExtended(), XLogRecGetBlockData(), XLogRecGetData, and XLogRecHasBlockRef.

Referenced by hash_redo().

◆ hash_xlog_update_meta_page()

|

static |

Definition at line 960 of file hash_xlog.c.

References BLK_NEEDS_REDO, BufferGetPage(), BufferIsValid(), XLogReaderState::EndRecPtr, fb(), HashPageGetMeta, MarkBufferDirty(), PageSetLSN(), UnlockReleaseBuffer(), XLogReadBufferForRedo(), and XLogRecGetData.

Referenced by hash_redo().

◆ hash_xlog_vacuum_one_page()

|

static |

Definition at line 987 of file hash_xlog.c.

References BLK_NEEDS_REDO, BufferGetPage(), BufferIsValid(), XLogReaderState::EndRecPtr, fb(), HashPageGetMeta, HashPageGetOpaque, InHotStandby, MarkBufferDirty(), PageIndexMultiDelete(), PageSetLSN(), RBM_NORMAL, ResolveRecoveryConflictWithSnapshot(), UnlockReleaseBuffer(), XLogReadBufferForRedo(), XLogReadBufferForRedoExtended(), XLogRecGetBlockTag(), and XLogRecGetData.

Referenced by hash_redo().