#include "postgres.h"#include "access/hash.h"#include "access/hash_xlog.h"#include "access/xloginsert.h"#include "miscadmin.h"#include "utils/rel.h"

Go to the source code of this file.

Functions | |

| static uint32 | _hash_firstfreebit (uint32 map) |

| static BlockNumber | bitno_to_blkno (HashMetaPage metap, uint32 ovflbitnum) |

| uint32 | _hash_ovflblkno_to_bitno (HashMetaPage metap, BlockNumber ovflblkno) |

| Buffer | _hash_addovflpage (Relation rel, Buffer metabuf, Buffer buf, bool retain_pin) |

| BlockNumber | _hash_freeovflpage (Relation rel, Buffer bucketbuf, Buffer ovflbuf, Buffer wbuf, IndexTuple *itups, OffsetNumber *itup_offsets, Size *tups_size, uint16 nitups, BufferAccessStrategy bstrategy) |

| void | _hash_initbitmapbuffer (Buffer buf, uint16 bmsize, bool initpage) |

| void | _hash_squeezebucket (Relation rel, Bucket bucket, BlockNumber bucket_blkno, Buffer bucket_buf, BufferAccessStrategy bstrategy) |

Function Documentation

◆ _hash_addovflpage()

Definition at line 112 of file hashovfl.c.

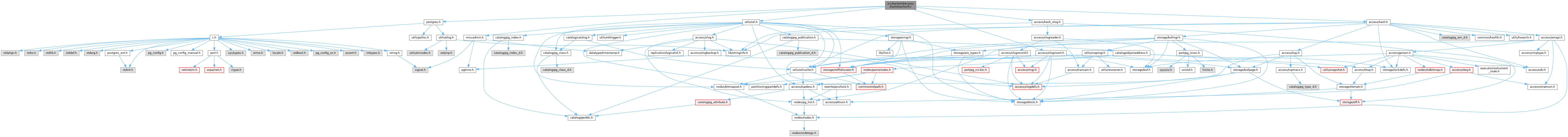

References _hash_checkpage(), _hash_firstfreebit(), _hash_getbuf(), _hash_getinitbuf(), _hash_getnewbuf(), _hash_initbitmapbuffer(), _hash_relbuf(), ALL_SET, Assert, bit(), bitno_to_blkno(), BITS_PER_MAP, BlockNumberIsValid(), xl_hash_add_ovfl_page::bmpage_found, BMPG_MASK, BMPG_SHIFT, BMPGSZ_BIT, buf, BUFFER_LOCK_EXCLUSIVE, BUFFER_LOCK_UNLOCK, BufferGetBlockNumber(), BufferGetPage(), BufferIsValid(), END_CRIT_SECTION, ereport, errcode(), errmsg(), ERROR, fb(), HASH_MAX_BITMAPS, HASH_WRITE, HASHO_PAGE_ID, HashPageGetBitmap, HashPageGetMeta, HashPageGetOpaque, i, InvalidBlockNumber, InvalidBuffer, j, LH_BITMAP_PAGE, LH_BUCKET_PAGE, LH_META_PAGE, LH_OVERFLOW_PAGE, LH_PAGE_TYPE, LockBuffer(), MAIN_FORKNUM, MarkBufferDirty(), PageSetLSN(), REGBUF_STANDARD, REGBUF_WILL_INIT, RelationGetRelationName, RelationNeedsWAL, SETBIT, SizeOfHashAddOvflPage, START_CRIT_SECTION, XLOG_HASH_ADD_OVFL_PAGE, XLogBeginInsert(), XLogInsert(), XLogRegisterBufData(), XLogRegisterBuffer(), and XLogRegisterData().

Referenced by _hash_doinsert(), and _hash_splitbucket().

◆ _hash_firstfreebit()

Definition at line 448 of file hashovfl.c.

References BITS_PER_MAP, elog, ERROR, and i.

Referenced by _hash_addovflpage().

◆ _hash_freeovflpage()

| BlockNumber _hash_freeovflpage | ( | Relation | rel, |

| Buffer | bucketbuf, | ||

| Buffer | ovflbuf, | ||

| Buffer | wbuf, | ||

| IndexTuple * | itups, | ||

| OffsetNumber * | itup_offsets, | ||

| Size * | tups_size, | ||

| uint16 | nitups, | ||

| BufferAccessStrategy | bstrategy | ||

| ) |

Definition at line 490 of file hashovfl.c.

References _hash_checkpage(), _hash_getbuf(), _hash_getbuf_with_strategy(), _hash_ovflblkno_to_bitno(), _hash_pageinit(), _hash_pgaddmultitup(), _hash_relbuf(), Assert, BlockNumberIsValid(), BMPG_MASK, BMPG_SHIFT, BUFFER_LOCK_EXCLUSIVE, BUFFER_LOCK_UNLOCK, BufferGetBlockNumber(), BufferGetPage(), BufferGetPageSize(), BufferIsValid(), CLRBIT, elog, END_CRIT_SECTION, ERROR, fb(), HASH_METAPAGE, HASH_READ, HASH_WRITE, HASH_XLOG_FREE_OVFL_BUFS, HASHO_PAGE_ID, HashPageGetBitmap, HashPageGetMeta, HashPageGetOpaque, i, InvalidBlockNumber, InvalidBucket, InvalidBuffer, ISSET, LH_BITMAP_PAGE, LH_BUCKET_PAGE, LH_META_PAGE, LH_OVERFLOW_PAGE, LH_UNUSED_PAGE, LockBuffer(), MarkBufferDirty(), PageSetLSN(), PG_USED_FOR_ASSERTS_ONLY, xl_hash_squeeze_page::prevblkno, REGBUF_NO_CHANGE, REGBUF_NO_IMAGE, REGBUF_STANDARD, RelationNeedsWAL, SizeOfHashSqueezePage, START_CRIT_SECTION, XLOG_HASH_SQUEEZE_PAGE, XLogBeginInsert(), XLogEnsureRecordSpace(), XLogInsert(), XLogRegisterBufData(), XLogRegisterBuffer(), and XLogRegisterData().

Referenced by _hash_squeezebucket().

◆ _hash_initbitmapbuffer()

Definition at line 777 of file hashovfl.c.

References _hash_pageinit(), buf, BufferGetPage(), BufferGetPageSize(), fb(), HashPageOpaqueData::hasho_bucket, HashPageOpaqueData::hasho_flag, HashPageOpaqueData::hasho_nextblkno, HashPageOpaqueData::hasho_page_id, HASHO_PAGE_ID, HashPageOpaqueData::hasho_prevblkno, HashPageGetBitmap, HashPageGetOpaque, InvalidBlockNumber, InvalidBucket, and LH_BITMAP_PAGE.

Referenced by _hash_addovflpage(), _hash_init(), hash_xlog_add_ovfl_page(), and hash_xlog_init_bitmap_page().

◆ _hash_ovflblkno_to_bitno()

| uint32 _hash_ovflblkno_to_bitno | ( | HashMetaPage | metap, |

| BlockNumber | ovflblkno | ||

| ) |

Definition at line 62 of file hashovfl.c.

References _hash_get_totalbuckets(), ereport, errcode(), errmsg(), ERROR, fb(), and i.

Referenced by _hash_freeovflpage(), and hash_bitmap_info().

◆ _hash_squeezebucket()

| void _hash_squeezebucket | ( | Relation | rel, |

| Bucket | bucket, | ||

| BlockNumber | bucket_blkno, | ||

| Buffer | bucket_buf, | ||

| BufferAccessStrategy | bstrategy | ||

| ) |

Definition at line 842 of file hashovfl.c.

References _hash_freeovflpage(), _hash_getbuf_with_strategy(), _hash_pgaddmultitup(), _hash_relbuf(), Assert, BlockNumberIsValid(), BUFFER_LOCK_UNLOCK, BufferGetPage(), CopyIndexTuple(), END_CRIT_SECTION, fb(), FirstOffsetNumber, HASH_WRITE, HashPageGetOpaque, i, IndexTupleSize(), InvalidBuffer, ItemIdIsDead, LH_OVERFLOW_PAGE, LockBuffer(), MarkBufferDirty(), MAXALIGN, MaxIndexTuplesPerPage, MaxOffsetNumber, xl_hash_move_page_contents::ntups, OffsetNumberNext, PageGetFreeSpaceForMultipleTuples(), PageGetItem(), PageGetItemId(), PageGetMaxOffsetNumber(), PageIndexMultiDelete(), PageIsEmpty(), PageSetLSN(), pfree(), REGBUF_NO_CHANGE, REGBUF_NO_IMAGE, REGBUF_STANDARD, RelationNeedsWAL, SizeOfHashMovePageContents, START_CRIT_SECTION, XLOG_HASH_MOVE_PAGE_CONTENTS, XLogBeginInsert(), XLogEnsureRecordSpace(), XLogInsert(), XLogRegisterBufData(), XLogRegisterBuffer(), and XLogRegisterData().

Referenced by hashbucketcleanup().

◆ bitno_to_blkno()

|

static |

Definition at line 35 of file hashovfl.c.

References _hash_get_totalbuckets(), fb(), and i.

Referenced by _hash_addovflpage().