Loading...

Searching...

No Matches

hio.h File Reference

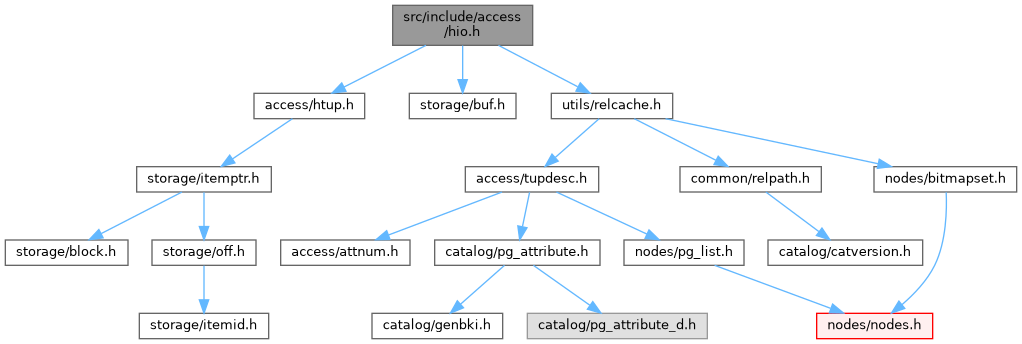

Include dependency graph for hio.h:

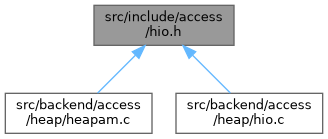

This graph shows which files directly or indirectly include this file:

Go to the source code of this file.

Data Structures | |

| struct | BulkInsertStateData |

Typedefs | |

| typedef struct BulkInsertStateData | BulkInsertStateData |

Functions | |

| void | RelationPutHeapTuple (Relation relation, Buffer buffer, HeapTuple tuple, bool token) |

| Buffer | RelationGetBufferForTuple (Relation relation, Size len, Buffer otherBuffer, int options, BulkInsertStateData *bistate, Buffer *vmbuffer, Buffer *vmbuffer_other, int num_pages) |

Typedef Documentation

◆ BulkInsertStateData

Function Documentation

◆ RelationGetBufferForTuple()

|

extern |

◆ RelationPutHeapTuple()

Definition at line 35 of file hio.c.

39{

41 OffsetNumber offnum;

42

43 /*

44 * A tuple that's being inserted speculatively should already have its

45 * token set.

46 */

48

49 /*

50 * Do not allow tuples with invalid combinations of hint bits to be placed

51 * on a page. This combination is detected as corruption by the

52 * contrib/amcheck logic, so if you disable this assertion, make

53 * corresponding changes there.

54 */

57

58 /* Add the tuple to the page */

60

64

65 /* Update tuple->t_self to the actual position where it was stored */

67

68 /*

69 * Insert the correct position into CTID of the stored tuple, too (unless

70 * this is a speculative insertion, in which case the token is held in

71 * CTID field instead)

72 */

74 {

77

79 }

80}

static ItemId PageGetItemId(Page page, OffsetNumber offsetNumber)

Definition bufpage.h:243

static void * PageGetItem(PageData *page, const ItemIdData *itemId)

Definition bufpage.h:353

#define PageAddItem(page, item, size, offsetNumber, overwrite, is_heap)

Definition bufpage.h:471

static bool HeapTupleHeaderIsSpeculative(const HeapTupleHeaderData *tup)

Definition htup_details.h:461

static void ItemPointerSet(ItemPointerData *pointer, BlockNumber blockNumber, OffsetNumber offNum)

Definition itemptr.h:135

Definition htup_details.h:154

Definition itemid.h:26

Definition oauth-curl.c:192

References Assert, BufferGetBlockNumber(), BufferGetPage(), elog, fb(), HEAP_XMAX_COMMITTED, HEAP_XMAX_IS_MULTI, HeapTupleHeaderIsSpeculative(), InvalidOffsetNumber, ItemPointerSet(), PageAddItem, PageGetItem(), PageGetItemId(), PANIC, HeapTupleHeaderData::t_ctid, HeapTupleData::t_data, HeapTupleHeaderData::t_infomask, HeapTupleData::t_len, and HeapTupleData::t_self.

Referenced by heap_insert(), heap_multi_insert(), and heap_update().