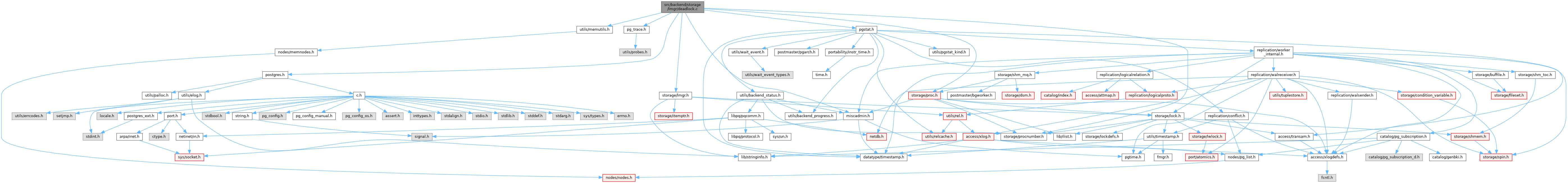

#include "postgres.h"#include "miscadmin.h"#include "pg_trace.h"#include "pgstat.h"#include "storage/lmgr.h"#include "storage/proc.h"#include "storage/procnumber.h"#include "utils/memutils.h"

Go to the source code of this file.

Data Structures | |

| struct | EDGE |

| struct | WAIT_ORDER |

| struct | DEADLOCK_INFO |

Functions | |

| static bool | DeadLockCheckRecurse (PGPROC *proc) |

| static int | TestConfiguration (PGPROC *startProc) |

| static bool | FindLockCycle (PGPROC *checkProc, EDGE *softEdges, int *nSoftEdges) |

| static bool | FindLockCycleRecurse (PGPROC *checkProc, int depth, EDGE *softEdges, int *nSoftEdges) |

| static bool | FindLockCycleRecurseMember (PGPROC *checkProc, PGPROC *checkProcLeader, int depth, EDGE *softEdges, int *nSoftEdges) |

| static bool | ExpandConstraints (EDGE *constraints, int nConstraints) |

| static bool | TopoSort (LOCK *lock, EDGE *constraints, int nConstraints, PGPROC **ordering) |

| void | InitDeadLockChecking (void) |

| DeadLockState | DeadLockCheck (PGPROC *proc) |

| PGPROC * | GetBlockingAutoVacuumPgproc (void) |

| void | DeadLockReport (void) |

| void | RememberSimpleDeadLock (PGPROC *proc1, LOCKMODE lockmode, LOCK *lock, PGPROC *proc2) |

Function Documentation

◆ DeadLockCheck()

| DeadLockState DeadLockCheck | ( | PGPROC * | proc | ) |

Definition at line 220 of file deadlock.c.

References Assert, blocking_autovacuum_proc, dclist_count(), dclist_init(), dclist_push_tail(), DeadLockCheckRecurse(), DS_BLOCKED_BY_AUTOVACUUM, DS_HARD_DEADLOCK, DS_NO_DEADLOCK, DS_SOFT_DEADLOCK, elog, FATAL, fb(), FindLockCycle(), GetLocksMethodTable(), i, j, links, WAIT_ORDER::lock, nCurConstraints, nPossibleConstraints, WAIT_ORDER::nProcs, nWaitOrders, possibleConstraints, ProcLockWakeup(), WAIT_ORDER::procs, waitOrders, and LOCK::waitProcs.

Referenced by CheckDeadLock().

◆ DeadLockCheckRecurse()

Definition at line 312 of file deadlock.c.

References curConstraints, DeadLockCheckRecurse(), elog, FATAL, fb(), i, MaxBackends, maxCurConstraints, maxPossibleConstraints, nCurConstraints, nPossibleConstraints, possibleConstraints, and TestConfiguration().

Referenced by DeadLockCheck(), and DeadLockCheckRecurse().

◆ DeadLockReport()

Definition at line 1075 of file deadlock.c.

References _, appendBinaryStringInfo(), appendStringInfo(), appendStringInfoChar(), deadlockDetails, DescribeLockTag(), ereport, errcode(), ERRCODE_T_R_DEADLOCK_DETECTED, errdetail_internal(), errdetail_log(), errhint(), errmsg(), ERROR, fb(), GetLockmodeName(), i, initStringInfo(), DEADLOCK_INFO::lockmode, DEADLOCK_INFO::locktag, LOCKTAG::locktag_lockmethodid, nDeadlockDetails, pgstat_get_backend_current_activity(), pgstat_report_deadlock(), DEADLOCK_INFO::pid, and resetStringInfo().

Referenced by LockAcquireExtended().

◆ ExpandConstraints()

Definition at line 790 of file deadlock.c.

References Assert, dclist_count(), fb(), i, j, EDGE::lock, WAIT_ORDER::lock, MaxBackends, WAIT_ORDER::nProcs, nWaitOrders, WAIT_ORDER::procs, TopoSort(), waitOrderProcs, waitOrders, and LOCK::waitProcs.

Referenced by TestConfiguration().

◆ FindLockCycle()

Definition at line 446 of file deadlock.c.

References fb(), FindLockCycleRecurse(), nDeadlockDetails, and nVisitedProcs.

Referenced by DeadLockCheck(), and TestConfiguration().

◆ FindLockCycleRecurse()

|

static |

Definition at line 457 of file deadlock.c.

References Assert, dlist_iter::cur, dlist_container, dlist_foreach, fb(), FindLockCycleRecurseMember(), i, MaxBackends, nDeadlockDetails, nVisitedProcs, and visitedProcs.

Referenced by FindLockCycle(), and FindLockCycleRecurseMember().

◆ FindLockCycleRecurseMember()

|

static |

Definition at line 536 of file deadlock.c.

References Assert, blocking_autovacuum_proc, dclist_foreach, deadlockDetails, dlist_container, dlist_foreach, fb(), FindLockCycleRecurse(), GetLocksMethodTable(), PROCLOCK::holdMask, i, links, LOCK_LOCKTAG, LOCKBIT_ON, PGPROC::lockGroupLeader, DEADLOCK_INFO::lockmode, DEADLOCK_INFO::locktag, LOCKTAG_RELATION_EXTEND, MaxBackends, MyProc, PROCLOCKTAG::myProc, WAIT_ORDER::nProcs, nWaitOrders, DEADLOCK_INFO::pid, PROC_IS_AUTOVACUUM, LOCK::procLocks, WAIT_ORDER::procs, PGPROC::statusFlags, LOCK::tag, PROCLOCK::tag, PGPROC::waitLockMode, waitOrders, and LOCK::waitProcs.

Referenced by FindLockCycleRecurse().

◆ GetBlockingAutoVacuumPgproc()

Definition at line 290 of file deadlock.c.

References blocking_autovacuum_proc, and fb().

Referenced by ProcSleep().

◆ InitDeadLockChecking()

Definition at line 144 of file deadlock.c.

References afterConstraints, beforeConstraints, curConstraints, deadlockDetails, fb(), MAX_BACKENDS_BITS, MaxBackends, maxCurConstraints, maxPossibleConstraints, MemoryContextSwitchTo(), palloc(), possibleConstraints, StaticAssertStmt, TopMemoryContext, topoProcs, visitedProcs, waitOrderProcs, and waitOrders.

Referenced by InitProcess().

◆ RememberSimpleDeadLock()

Definition at line 1147 of file deadlock.c.

References deadlockDetails, fb(), DEADLOCK_INFO::lockmode, DEADLOCK_INFO::locktag, nDeadlockDetails, DEADLOCK_INFO::pid, and LOCK::tag.

Referenced by JoinWaitQueue().

◆ TestConfiguration()

Definition at line 378 of file deadlock.c.

References curConstraints, ExpandConstraints(), fb(), FindLockCycle(), i, MaxBackends, maxPossibleConstraints, nCurConstraints, nPossibleConstraints, and possibleConstraints.

Referenced by DeadLockCheckRecurse().

◆ TopoSort()

|

static |

Definition at line 862 of file deadlock.c.

References afterConstraints, Assert, beforeConstraints, EDGE::blocker, dclist_count(), dclist_foreach, dlist_container, fb(), i, j, EDGE::link, links, PGPROC::lockGroupLeader, MemSet, EDGE::pred, topoProcs, EDGE::waiter, PGPROC::waitLock, and LOCK::waitProcs.

Referenced by ExpandConstraints().

Variable Documentation

◆ afterConstraints

|

static |

Definition at line 109 of file deadlock.c.

Referenced by InitDeadLockChecking(), and TopoSort().

◆ beforeConstraints

|

static |

Definition at line 108 of file deadlock.c.

Referenced by InitDeadLockChecking(), TopoSort(), and TopoSort().

◆ blocking_autovacuum_proc

Definition at line 129 of file deadlock.c.

Referenced by DeadLockCheck(), FindLockCycleRecurseMember(), and GetBlockingAutoVacuumPgproc().

◆ curConstraints

|

static |

Definition at line 117 of file deadlock.c.

Referenced by DeadLockCheckRecurse(), InitDeadLockChecking(), and TestConfiguration().

◆ deadlockDetails

|

static |

Definition at line 125 of file deadlock.c.

Referenced by DeadLockReport(), FindLockCycleRecurseMember(), InitDeadLockChecking(), and RememberSimpleDeadLock().

◆ maxCurConstraints

|

static |

Definition at line 119 of file deadlock.c.

Referenced by DeadLockCheckRecurse(), and InitDeadLockChecking().

◆ maxPossibleConstraints

|

static |

Definition at line 124 of file deadlock.c.

Referenced by DeadLockCheckRecurse(), InitDeadLockChecking(), and TestConfiguration().

◆ nCurConstraints

|

static |

Definition at line 118 of file deadlock.c.

Referenced by DeadLockCheck(), DeadLockCheckRecurse(), and TestConfiguration().

◆ nDeadlockDetails

|

static |

Definition at line 126 of file deadlock.c.

Referenced by DeadLockReport(), FindLockCycle(), FindLockCycleRecurse(), and RememberSimpleDeadLock().

◆ nPossibleConstraints

|

static |

Definition at line 123 of file deadlock.c.

Referenced by DeadLockCheck(), DeadLockCheckRecurse(), and TestConfiguration().

◆ nVisitedProcs

|

static |

Definition at line 104 of file deadlock.c.

Referenced by FindLockCycle(), and FindLockCycleRecurse().

◆ nWaitOrders

|

static |

Definition at line 113 of file deadlock.c.

Referenced by DeadLockCheck(), ExpandConstraints(), and FindLockCycleRecurseMember().

◆ possibleConstraints

|

static |

Definition at line 122 of file deadlock.c.

Referenced by DeadLockCheck(), DeadLockCheckRecurse(), InitDeadLockChecking(), and TestConfiguration().

◆ topoProcs

|

static |

Definition at line 107 of file deadlock.c.

Referenced by InitDeadLockChecking(), and TopoSort().

◆ visitedProcs

|

static |

Definition at line 103 of file deadlock.c.

Referenced by FindLockCycleRecurse(), and InitDeadLockChecking().

◆ waitOrderProcs

|

static |

Definition at line 114 of file deadlock.c.

Referenced by ExpandConstraints(), and InitDeadLockChecking().

◆ waitOrders

|

static |

Definition at line 112 of file deadlock.c.

Referenced by DeadLockCheck(), ExpandConstraints(), FindLockCycleRecurseMember(), and InitDeadLockChecking().