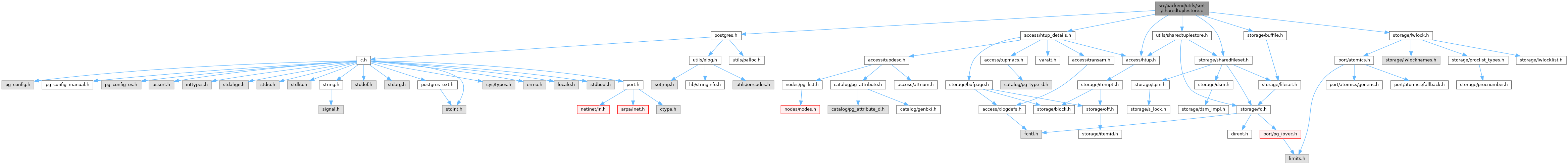

#include "postgres.h"#include "access/htup.h"#include "access/htup_details.h"#include "storage/buffile.h"#include "storage/lwlock.h"#include "storage/sharedfileset.h"#include "utils/sharedtuplestore.h"

Go to the source code of this file.

Data Structures | |

| struct | SharedTuplestoreChunk |

| struct | SharedTuplestoreParticipant |

| struct | SharedTuplestore |

| struct | SharedTuplestoreAccessor |

Macros | |

| #define | STS_CHUNK_PAGES 4 |

| #define | STS_CHUNK_HEADER_SIZE offsetof(SharedTuplestoreChunk, data) |

| #define | STS_CHUNK_DATA_SIZE (STS_CHUNK_PAGES * BLCKSZ - STS_CHUNK_HEADER_SIZE) |

Typedefs | |

| typedef struct SharedTuplestoreChunk | SharedTuplestoreChunk |

| typedef struct SharedTuplestoreParticipant | SharedTuplestoreParticipant |

Macro Definition Documentation

◆ STS_CHUNK_DATA_SIZE

| #define STS_CHUNK_DATA_SIZE (STS_CHUNK_PAGES * BLCKSZ - STS_CHUNK_HEADER_SIZE) |

Definition at line 39 of file sharedtuplestore.c.

◆ STS_CHUNK_HEADER_SIZE

| #define STS_CHUNK_HEADER_SIZE offsetof(SharedTuplestoreChunk, data) |

Definition at line 38 of file sharedtuplestore.c.

◆ STS_CHUNK_PAGES

| #define STS_CHUNK_PAGES 4 |

Definition at line 37 of file sharedtuplestore.c.

Typedef Documentation

◆ SharedTuplestoreChunk

◆ SharedTuplestoreParticipant

Function Documentation

◆ sts_attach()

| SharedTuplestoreAccessor * sts_attach | ( | SharedTuplestore * | sts, |

| int | my_participant_number, | ||

| SharedFileSet * | fileset | ||

| ) |

Definition at line 177 of file sharedtuplestore.c.

References Assert, CurrentMemoryContext, fb(), and palloc0_object.

Referenced by ExecParallelHashEnsureBatchAccessors(), and ExecParallelHashRepartitionRest().

◆ sts_begin_parallel_scan()

| void sts_begin_parallel_scan | ( | SharedTuplestoreAccessor * | accessor | ) |

Definition at line 252 of file sharedtuplestore.c.

References Assert, fb(), i, PG_USED_FOR_ASSERTS_ONLY, and sts_end_parallel_scan().

Referenced by ExecParallelHashJoinNewBatch(), and ExecParallelHashRepartitionRest().

◆ sts_end_parallel_scan()

| void sts_end_parallel_scan | ( | SharedTuplestoreAccessor * | accessor | ) |

Definition at line 280 of file sharedtuplestore.c.

References BufFileClose(), and fb().

Referenced by ExecHashTableDetach(), ExecHashTableDetachBatch(), ExecParallelHashCloseBatchAccessors(), ExecParallelHashJoinNewBatch(), ExecParallelHashRepartitionRest(), ExecParallelPrepHashTableForUnmatched(), and sts_begin_parallel_scan().

◆ sts_end_write()

| void sts_end_write | ( | SharedTuplestoreAccessor * | accessor | ) |

Definition at line 212 of file sharedtuplestore.c.

References BufFileClose(), fb(), pfree(), and sts_flush_chunk().

Referenced by ExecHashTableDetach(), ExecParallelHashCloseBatchAccessors(), ExecParallelHashJoinPartitionOuter(), and MultiExecParallelHash().

◆ sts_estimate()

◆ sts_filename()

|

static |

Definition at line 598 of file sharedtuplestore.c.

References fb(), MAXPGPATH, name, and snprintf.

Referenced by sts_parallel_scan_next(), and sts_puttuple().

◆ sts_flush_chunk()

|

static |

Definition at line 195 of file sharedtuplestore.c.

References BufFileWrite(), fb(), and STS_CHUNK_PAGES.

Referenced by sts_end_write(), and sts_puttuple().

◆ sts_initialize()

| SharedTuplestoreAccessor * sts_initialize | ( | SharedTuplestore * | sts, |

| int | participants, | ||

| int | my_participant_number, | ||

| size_t | meta_data_size, | ||

| int | flags, | ||

| SharedFileSet * | fileset, | ||

| const char * | name | ||

| ) |

Definition at line 125 of file sharedtuplestore.c.

References Assert, CurrentMemoryContext, elog, ERROR, fb(), SharedTuplestore::flags, i, SharedTuplestoreParticipant::lock, LWLockInitialize(), SharedTuplestore::meta_data_size, name, SharedTuplestore::name, SharedTuplestoreParticipant::npages, SharedTuplestore::nparticipants, palloc0_object, SharedTuplestore::participants, SharedTuplestoreParticipant::read_page, STS_CHUNK_DATA_SIZE, and SharedTuplestoreParticipant::writing.

Referenced by ExecParallelHashJoinSetUpBatches().

◆ sts_parallel_scan_next()

| MinimalTuple sts_parallel_scan_next | ( | SharedTuplestoreAccessor * | accessor, |

| void * | meta_data | ||

| ) |

Definition at line 495 of file sharedtuplestore.c.

References BufFileClose(), BufFileOpenFileSet(), BufFileReadExact(), BufFileSeekBlock(), ereport, errcode_for_file_access(), errmsg(), ERROR, fb(), SharedTuplestoreParticipant::lock, LW_EXCLUSIVE, LWLockAcquire(), LWLockRelease(), MAXPGPATH, MemoryContextSwitchTo(), name, SharedTuplestoreParticipant::npages, SharedTuplestoreParticipant::read_page, STS_CHUNK_HEADER_SIZE, STS_CHUNK_PAGES, sts_filename(), and sts_read_tuple().

Referenced by ExecParallelHashJoinNewBatch(), ExecParallelHashJoinOuterGetTuple(), and ExecParallelHashRepartitionRest().

◆ sts_puttuple()

| void sts_puttuple | ( | SharedTuplestoreAccessor * | accessor, |

| void * | meta_data, | ||

| MinimalTuple | tuple | ||

| ) |

Definition at line 299 of file sharedtuplestore.c.

References Assert, BufFileCreateFileSet(), fb(), MAXPGPATH, MemoryContextAllocZero(), MemoryContextSwitchTo(), Min, name, STS_CHUNK_DATA_SIZE, STS_CHUNK_PAGES, sts_filename(), sts_flush_chunk(), MinimalTupleData::t_len, and SharedTuplestoreParticipant::writing.

Referenced by ExecParallelHashJoinPartitionOuter(), ExecParallelHashRepartitionFirst(), ExecParallelHashRepartitionRest(), and ExecParallelHashTableInsert().

◆ sts_read_tuple()

|

static |

Definition at line 415 of file sharedtuplestore.c.

References BufFileReadExact(), ereport, errcode_for_file_access(), errdetail_internal(), errmsg(), ERROR, fb(), Max, MemoryContextAlloc(), Min, pfree(), STS_CHUNK_HEADER_SIZE, STS_CHUNK_PAGES, and MinimalTupleData::t_len.

Referenced by sts_parallel_scan_next().

◆ sts_reinitialize()

| void sts_reinitialize | ( | SharedTuplestoreAccessor * | accessor | ) |

Definition at line 233 of file sharedtuplestore.c.