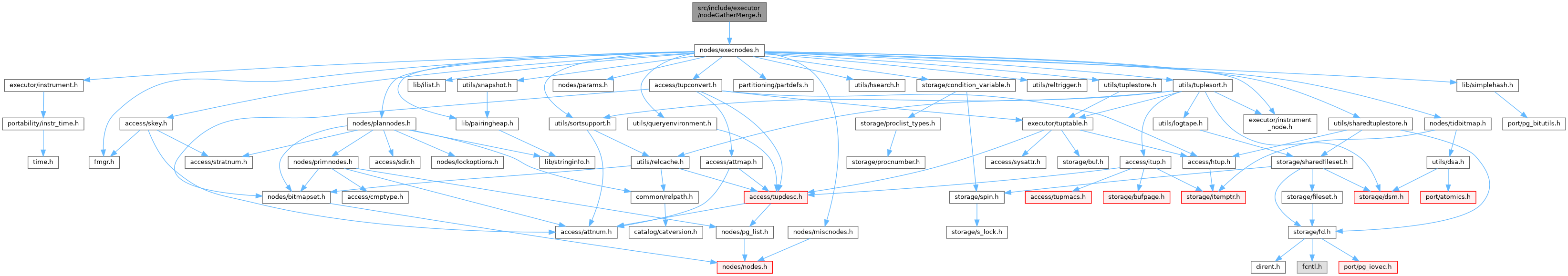

#include "nodes/execnodes.h"

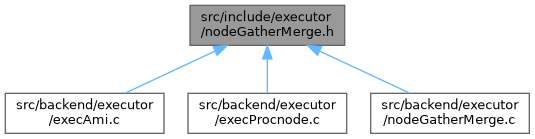

Go to the source code of this file.

Functions | |

| GatherMergeState * | ExecInitGatherMerge (GatherMerge *node, EState *estate, int eflags) |

| void | ExecEndGatherMerge (GatherMergeState *node) |

| void | ExecReScanGatherMerge (GatherMergeState *node) |

| void | ExecShutdownGatherMerge (GatherMergeState *node) |

Function Documentation

◆ ExecEndGatherMerge()

|

extern |

Definition at line 291 of file nodeGatherMerge.c.

References ExecEndNode(), ExecShutdownGatherMerge(), and outerPlanState.

Referenced by ExecEndNode().

◆ ExecInitGatherMerge()

|

extern |

Definition at line 68 of file nodeGatherMerge.c.

References Assert, CurrentMemoryContext, ExecAssignExprContext(), ExecConditionalAssignProjectionInfo(), ExecGatherMerge(), ExecGetResultType(), ExecInitNode(), ExecInitResultTypeTL(), fb(), gather_merge_setup(), i, innerPlan, makeNode, GatherMerge::numCols, OUTER_VAR, outerPlan, outerPlanState, palloc0_array, GatherMerge::plan, PrepareSortSupportFromOrderingOp(), Plan::qual, and SortSupportData::ssup_cxt.

Referenced by ExecInitNode().

◆ ExecReScanGatherMerge()

|

extern |

Definition at line 341 of file nodeGatherMerge.c.

References bms_add_member(), ExecReScan(), ExecShutdownGatherMergeWorkers(), fb(), gather_merge_clear_tuples(), GatherMergeState::gm_initialized, GatherMergeState::initialized, outerPlan, outerPlanState, PlanState::plan, and GatherMergeState::ps.

Referenced by ExecReScan().

◆ ExecShutdownGatherMerge()

|

extern |

Definition at line 304 of file nodeGatherMerge.c.

References ExecParallelCleanup(), ExecShutdownGatherMergeWorkers(), fb(), and GatherMergeState::pei.

Referenced by ExecEndGatherMerge(), and ExecShutdownNode_walker().