Go to the source code of this file.

Data Structures | |

| struct | test_shm_mq_header |

Macros | |

| #define | PG_TEST_SHM_MQ_MAGIC 0x79fb2447 |

Functions | |

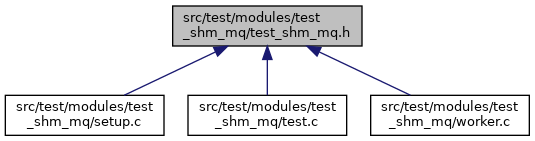

| void | test_shm_mq_setup (int64 queue_size, int32 nworkers, dsm_segment **segp, shm_mq_handle **output, shm_mq_handle **input) |

| pg_noreturn PGDLLEXPORT void | test_shm_mq_main (Datum) |

Macro Definition Documentation

◆ PG_TEST_SHM_MQ_MAGIC

| #define PG_TEST_SHM_MQ_MAGIC 0x79fb2447 |

Definition at line 22 of file test_shm_mq.h.

Function Documentation

◆ test_shm_mq_main()

|

extern |

Definition at line 49 of file worker.c.

References attach_to_queues(), BackendPidGetProc(), BackgroundWorkerUnblockSignals(), BackgroundWorker::bgw_notify_pid, copy_messages(), DatumGetUInt32(), DEBUG1, dsm_attach(), dsm_detach(), dsm_segment_address(), elog, ereport, errcode(), errmsg(), ERROR, fb(), test_shm_mq_header::mutex, MyBgworkerEntry, PG_TEST_SHM_MQ_MAGIC, proc_exit(), SetLatch(), shm_toc_attach(), shm_toc_lookup(), SpinLockAcquire(), SpinLockRelease(), test_shm_mq_header::workers_attached, test_shm_mq_header::workers_ready, and test_shm_mq_header::workers_total.

◆ test_shm_mq_setup()

|

extern |

Definition at line 51 of file setup.c.

References cancel_on_dsm_detach(), cleanup_background_workers(), fb(), dsm_segment::handle, input, output, pfree(), PointerGetDatum(), setup_background_workers(), setup_dynamic_shared_memory(), shm_mq_attach(), and wait_for_workers_to_become_ready().

Referenced by test_shm_mq(), and test_shm_mq_pipelined().