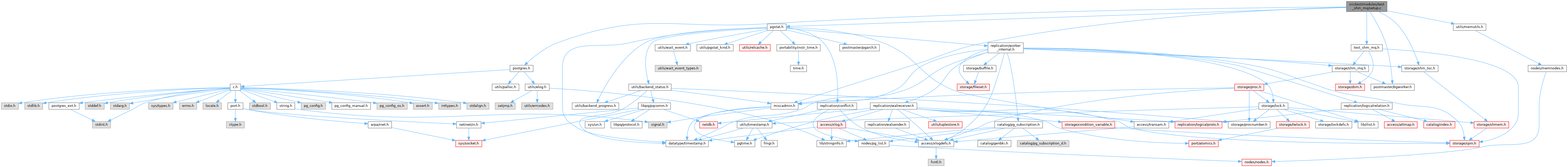

#include "postgres.h"#include "miscadmin.h"#include "pgstat.h"#include "postmaster/bgworker.h"#include "storage/proc.h"#include "storage/shm_toc.h"#include "test_shm_mq.h"#include "utils/memutils.h"

Go to the source code of this file.

Data Structures | |

| struct | worker_state |

Functions | |

| static void | setup_dynamic_shared_memory (int64 queue_size, int nworkers, dsm_segment **segp, test_shm_mq_header **hdrp, shm_mq **outp, shm_mq **inp) |

| static worker_state * | setup_background_workers (int nworkers, dsm_segment *seg) |

| static void | cleanup_background_workers (dsm_segment *seg, Datum arg) |

| static void | wait_for_workers_to_become_ready (worker_state *wstate, volatile test_shm_mq_header *hdr) |

| static bool | check_worker_status (worker_state *wstate) |

| void | test_shm_mq_setup (int64 queue_size, int32 nworkers, dsm_segment **segp, shm_mq_handle **output, shm_mq_handle **input) |

Variables | |

| static uint32 | we_bgworker_startup = 0 |

Function Documentation

◆ check_worker_status()

|

static |

Definition at line 307 of file setup.c.

References BGWH_POSTMASTER_DIED, BGWH_STOPPED, fb(), and GetBackgroundWorkerPid().

Referenced by wait_for_workers_to_become_ready().

◆ cleanup_background_workers()

|

static |

Definition at line 247 of file setup.c.

References arg, DatumGetPointer(), fb(), worker_state::nworkers, and TerminateBackgroundWorker().

Referenced by setup_background_workers(), and test_shm_mq_setup().

◆ setup_background_workers()

|

static |

Definition at line 175 of file setup.c.

References BackgroundWorker::bgw_flags, BackgroundWorker::bgw_function_name, BackgroundWorker::bgw_library_name, BackgroundWorker::bgw_main_arg, BGW_MAXLEN, BackgroundWorker::bgw_name, BGW_NEVER_RESTART, BackgroundWorker::bgw_notify_pid, BackgroundWorker::bgw_restart_time, BackgroundWorker::bgw_start_time, BackgroundWorker::bgw_type, BGWORKER_SHMEM_ACCESS, BgWorkerStart_ConsistentState, cleanup_background_workers(), CurTransactionContext, dsm_segment_handle(), ereport, errcode(), errhint(), errmsg(), ERROR, fb(), i, MemoryContextAlloc(), MemoryContextSwitchTo(), MyProcPid, on_dsm_detach(), PointerGetDatum(), RegisterDynamicBackgroundWorker(), snprintf, sprintf, TopTransactionContext, and UInt32GetDatum().

Referenced by test_shm_mq_setup().

◆ setup_dynamic_shared_memory()

|

static |

Definition at line 92 of file setup.c.

References dsm_create(), dsm_segment_address(), ereport, errcode(), errmsg(), ERROR, fb(), i, test_shm_mq_header::mutex, MyProc, PG_TEST_SHM_MQ_MAGIC, shm_mq_create(), shm_mq_minimum_size, shm_mq_set_receiver(), shm_mq_set_sender(), shm_toc_allocate(), shm_toc_create(), shm_toc_estimate(), shm_toc_estimate_chunk, shm_toc_estimate_keys, shm_toc_initialize_estimator, shm_toc_insert(), SpinLockInit(), test_shm_mq_header::workers_attached, test_shm_mq_header::workers_ready, and test_shm_mq_header::workers_total.

Referenced by test_shm_mq_setup().

◆ test_shm_mq_setup()

| void test_shm_mq_setup | ( | int64 | queue_size, |

| int32 | nworkers, | ||

| dsm_segment ** | segp, | ||

| shm_mq_handle ** | output, | ||

| shm_mq_handle ** | input | ||

| ) |

Definition at line 51 of file setup.c.

References cancel_on_dsm_detach(), cleanup_background_workers(), fb(), dsm_segment::handle, input, output, pfree(), PointerGetDatum(), setup_background_workers(), setup_dynamic_shared_memory(), shm_mq_attach(), and wait_for_workers_to_become_ready().

Referenced by test_shm_mq(), and test_shm_mq_pipelined().

◆ wait_for_workers_to_become_ready()

|

static |

Definition at line 259 of file setup.c.

References CHECK_FOR_INTERRUPTS, check_worker_status(), ereport, errcode(), errmsg(), ERROR, fb(), test_shm_mq_header::mutex, MyLatch, ResetLatch(), SpinLockAcquire(), SpinLockRelease(), WaitEventExtensionNew(), WaitLatch(), we_bgworker_startup, WL_EXIT_ON_PM_DEATH, WL_LATCH_SET, and test_shm_mq_header::workers_ready.

Referenced by test_shm_mq_setup().

Variable Documentation

◆ we_bgworker_startup

|

static |

Definition at line 44 of file setup.c.

Referenced by wait_for_workers_to_become_ready().