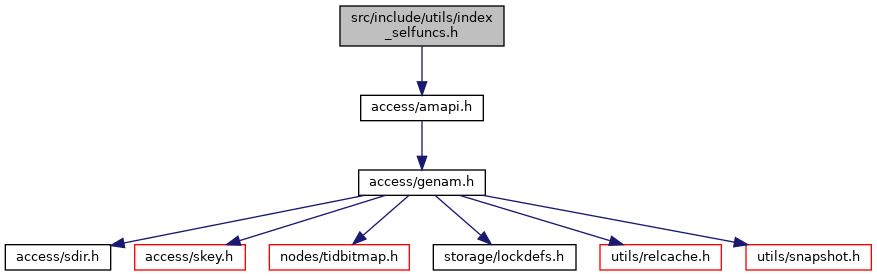

#include "access/amapi.h"

Go to the source code of this file.

Functions | |

| void | brincostestimate (struct PlannerInfo *root, struct IndexPath *path, double loop_count, Cost *indexStartupCost, Cost *indexTotalCost, Selectivity *indexSelectivity, double *indexCorrelation, double *indexPages) |

| void | btcostestimate (struct PlannerInfo *root, struct IndexPath *path, double loop_count, Cost *indexStartupCost, Cost *indexTotalCost, Selectivity *indexSelectivity, double *indexCorrelation, double *indexPages) |

| void | hashcostestimate (struct PlannerInfo *root, struct IndexPath *path, double loop_count, Cost *indexStartupCost, Cost *indexTotalCost, Selectivity *indexSelectivity, double *indexCorrelation, double *indexPages) |

| void | gistcostestimate (struct PlannerInfo *root, struct IndexPath *path, double loop_count, Cost *indexStartupCost, Cost *indexTotalCost, Selectivity *indexSelectivity, double *indexCorrelation, double *indexPages) |

| void | spgcostestimate (struct PlannerInfo *root, struct IndexPath *path, double loop_count, Cost *indexStartupCost, Cost *indexTotalCost, Selectivity *indexSelectivity, double *indexCorrelation, double *indexPages) |

| void | gincostestimate (struct PlannerInfo *root, struct IndexPath *path, double loop_count, Cost *indexStartupCost, Cost *indexTotalCost, Selectivity *indexSelectivity, double *indexCorrelation, double *indexPages) |

Function Documentation

◆ brincostestimate()

|

extern |

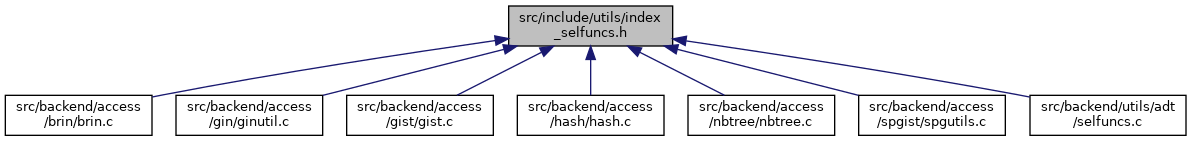

Definition at line 8992 of file selfuncs.c.

References Assert, attnum, ATTSTATSSLOT_NUMBERS, BoolGetDatum(), BRIN_DEFAULT_PAGES_PER_RANGE, brinGetStats(), CLAMP_PROBABILITY, clauselist_selectivity(), cpu_operator_cost, elog, ERROR, fb(), free_attstatsslot(), get_attstatsslot(), get_index_stats_hook, get_quals_from_indexclauses(), get_relation_stats_hook, get_tablespace_page_costs(), HeapTupleIsValid, index_close(), index_open(), index_other_operands_eval_cost(), IndexPath::indexclauses, IndexPath::indexinfo, Int16GetDatum(), InvalidOid, JOIN_INNER, lfirst_node, Max, Min, NoLock, ObjectIdGetDatum(), planner_rt_fetch, ReleaseSysCache(), ReleaseVariableStats, REVMAP_PAGE_MAXITEMS, root, RTE_RELATION, and SearchSysCache3().

Referenced by brinhandler().

◆ btcostestimate()

|

extern |

Definition at line 7687 of file selfuncs.c.

References add_predicate_to_index_quals(), ScalarArrayOpExpr::args, Assert, btcost_correlation(), BTEqualStrategyNumber, RestrictInfo::clause, clauselist_selectivity(), cpu_operator_cost, DEFAULT_PAGE_CPU_MULTIPLIER, DEFAULT_RANGE_INEQ_SEL, elog, ERROR, estimate_array_length(), examine_indexcol_variable(), fb(), genericcostestimate(), get_op_opfamily_strategy(), get_variable_numdistinct(), HeapTupleIsValid, IndexPath::indexclauses, GenericCosts::indexCorrelation, IndexPath::indexinfo, GenericCosts::indexSelectivity, GenericCosts::indexStartupCost, GenericCosts::indexTotalCost, InvalidOid, IS_NULL, IsA, JOIN_INNER, lappend(), lfirst_node, linitial_oid, lsecond, Max, Min, NIL, nodeTag, GenericCosts::num_sa_scans, GenericCosts::numIndexPages, GenericCosts::numIndexTuples, OidIsValid, OpExpr::opno, ScalarArrayOpExpr::opno, ReleaseVariableStats, and root.

Referenced by bthandler().

◆ gincostestimate()

|

extern |

Definition at line 8602 of file selfuncs.c.

References add_predicate_to_index_quals(), GinQualCounts::arrayScans, GinQualCounts::attHasFullScan, GinQualCounts::attHasNormalScan, RestrictInfo::clause, clauselist_selectivity(), cpu_index_tuple_cost, cpu_operator_cost, DEFAULT_PAGE_CPU_MULTIPLIER, elog, ERROR, GinQualCounts::exactEntries, fb(), get_quals_from_indexclauses(), get_tablespace_page_costs(), gincost_opexpr(), gincost_scalararrayopexpr(), ginGetStats(), i, index_close(), index_open(), index_other_operands_eval_cost(), index_pages_fetched(), IndexPath::indexclauses, IndexPath::indexinfo, IsA, JOIN_INNER, lfirst_node, list_length(), Max, Min, NIL, nodeTag, NoLock, GinQualCounts::partialEntries, root, scale, and GinQualCounts::searchEntries.

Referenced by ginhandler().

◆ gistcostestimate()

|

extern |

Definition at line 8192 of file selfuncs.c.

References cpu_operator_cost, DEFAULT_PAGE_CPU_MULTIPLIER, fb(), genericcostestimate(), GenericCosts::indexCorrelation, IndexPath::indexinfo, GenericCosts::indexSelectivity, GenericCosts::indexStartupCost, GenericCosts::indexTotalCost, GenericCosts::num_sa_scans, GenericCosts::numIndexPages, and root.

Referenced by gisthandler().

◆ hashcostestimate()

|

extern |

Definition at line 8150 of file selfuncs.c.

References fb(), genericcostestimate(), GenericCosts::indexCorrelation, GenericCosts::indexSelectivity, GenericCosts::indexStartupCost, GenericCosts::indexTotalCost, GenericCosts::numIndexPages, and root.

Referenced by hashhandler().

◆ spgcostestimate()

|

extern |

Definition at line 8247 of file selfuncs.c.

References cpu_operator_cost, DEFAULT_PAGE_CPU_MULTIPLIER, fb(), genericcostestimate(), GenericCosts::indexCorrelation, IndexPath::indexinfo, GenericCosts::indexSelectivity, GenericCosts::indexStartupCost, GenericCosts::indexTotalCost, GenericCosts::num_sa_scans, GenericCosts::numIndexPages, and root.

Referenced by spghandler().