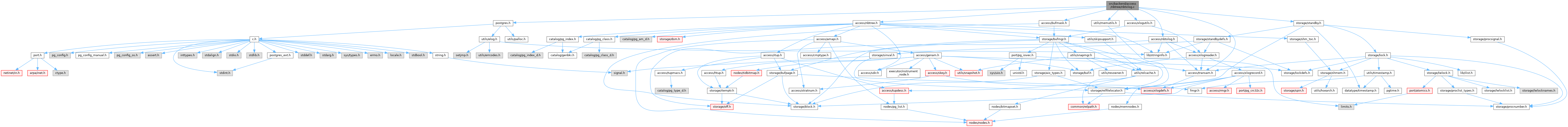

#include "postgres.h"#include "access/bufmask.h"#include "access/nbtree.h"#include "access/nbtxlog.h"#include "access/transam.h"#include "access/xlogutils.h"#include "storage/standby.h"#include "utils/memutils.h"

Go to the source code of this file.

Variables | |

| static MemoryContext | opCtx |

Function Documentation

◆ _bt_clear_incomplete_split()

|

static |

Definition at line 137 of file nbtxlog.c.

References Assert, BLK_NEEDS_REDO, BTPageGetOpaque, buf, BufferGetPage(), BufferIsValid(), XLogReaderState::EndRecPtr, fb(), MarkBufferDirty(), P_INCOMPLETE_SPLIT, PageSetLSN(), UnlockReleaseBuffer(), and XLogReadBufferForRedo().

Referenced by btree_xlog_insert(), btree_xlog_newroot(), and btree_xlog_split().

◆ _bt_restore_meta()

|

static |

Definition at line 80 of file nbtxlog.c.

References _bt_pageinit(), Assert, BTMetaPageData::btm_allequalimage, BTMetaPageData::btm_fastlevel, BTMetaPageData::btm_fastroot, BTMetaPageData::btm_last_cleanup_num_delpages, BTMetaPageData::btm_last_cleanup_num_heap_tuples, BTMetaPageData::btm_level, BTMetaPageData::btm_magic, BTMetaPageData::btm_root, BTMetaPageData::btm_version, BTP_META, BTPageGetMeta, BTPageGetOpaque, BTREE_MAGIC, BTREE_METAPAGE, BTREE_NOVAC_VERSION, BufferGetBlockNumber(), BufferGetPage(), BufferGetPageSize(), XLogReaderState::EndRecPtr, fb(), len, MarkBufferDirty(), PageSetLSN(), UnlockReleaseBuffer(), XLogInitBufferForRedo(), and XLogRecGetBlockData().

Referenced by btree_redo(), btree_xlog_insert(), btree_xlog_newroot(), and btree_xlog_unlink_page().

◆ _bt_restore_page()

Definition at line 36 of file nbtxlog.c.

References elog, fb(), i, IndexTupleSize(), InvalidOffsetNumber, items, len, MAXALIGN, MaxIndexTuplesPerPage, nitems, PageAddItem, and PANIC.

Referenced by btree_xlog_newroot(), and btree_xlog_split().

◆ btree_mask()

| void btree_mask | ( | char * | pagedata, |

| BlockNumber | blkno | ||

| ) |

Definition at line 1081 of file nbtxlog.c.

References BTPageGetOpaque, fb(), mask_lp_flags(), mask_page_hint_bits(), mask_page_lsn_and_checksum(), mask_unused_space(), and P_ISLEAF.

◆ btree_redo()

| void btree_redo | ( | XLogReaderState * | record | ) |

Definition at line 1004 of file nbtxlog.c.

References _bt_restore_meta(), btree_xlog_dedup(), btree_xlog_delete(), btree_xlog_insert(), btree_xlog_mark_page_halfdead(), btree_xlog_newroot(), btree_xlog_reuse_page(), btree_xlog_split(), btree_xlog_unlink_page(), btree_xlog_vacuum(), elog, fb(), MemoryContextReset(), MemoryContextSwitchTo(), opCtx, PANIC, XLOG_BTREE_DEDUP, XLOG_BTREE_DELETE, XLOG_BTREE_INSERT_LEAF, XLOG_BTREE_INSERT_META, XLOG_BTREE_INSERT_POST, XLOG_BTREE_INSERT_UPPER, XLOG_BTREE_MARK_PAGE_HALFDEAD, XLOG_BTREE_META_CLEANUP, XLOG_BTREE_NEWROOT, XLOG_BTREE_REUSE_PAGE, XLOG_BTREE_SPLIT_L, XLOG_BTREE_SPLIT_R, XLOG_BTREE_UNLINK_PAGE, XLOG_BTREE_UNLINK_PAGE_META, XLOG_BTREE_VACUUM, and XLogRecGetInfo.

◆ btree_xlog_cleanup()

Definition at line 1071 of file nbtxlog.c.

References fb(), MemoryContextDelete(), and opCtx.

◆ btree_xlog_dedup()

|

static |

Definition at line 454 of file nbtxlog.c.

References _bt_dedup_finish_pending(), _bt_dedup_save_htid(), _bt_dedup_start_pending(), Assert, BLK_NEEDS_REDO, BTMaxItemSize, BTPageGetOpaque, buf, BufferGetPage(), BufferIsValid(), elog, XLogReaderState::EndRecPtr, ERROR, fb(), intervals, InvalidOffsetNumber, ItemIdGetLength, MarkBufferDirty(), OffsetNumberNext, P_FIRSTDATAKEY, P_HAS_GARBAGE, P_HIKEY, P_RIGHTMOST, PageAddItem, PageGetItem(), PageGetItemId(), PageGetMaxOffsetNumber(), PageGetTempPageCopySpecial(), PageRestoreTempPage(), PageSetLSN(), palloc(), palloc_object, UnlockReleaseBuffer(), XLogReadBufferForRedo(), XLogRecGetBlockData(), and XLogRecGetData.

Referenced by btree_redo().

◆ btree_xlog_delete()

|

static |

Definition at line 640 of file nbtxlog.c.

References BLK_NEEDS_REDO, BTPageGetOpaque, BTPageOpaqueData::btpo_flags, btree_xlog_updates(), BufferGetPage(), BufferIsValid(), XLogReaderState::EndRecPtr, fb(), InHotStandby, MarkBufferDirty(), PageIndexMultiDelete(), PageSetLSN(), ResolveRecoveryConflictWithSnapshot(), UnlockReleaseBuffer(), XLogReadBufferForRedo(), XLogRecGetBlockData(), XLogRecGetBlockTag(), and XLogRecGetData.

Referenced by btree_redo().

◆ btree_xlog_insert()

|

static |

Definition at line 158 of file nbtxlog.c.

References _bt_clear_incomplete_split(), _bt_restore_meta(), _bt_swap_posting(), Assert, BLK_NEEDS_REDO, BufferGetPage(), BufferIsValid(), CopyIndexTuple(), elog, XLogReaderState::EndRecPtr, fb(), IndexTupleSize(), InvalidOffsetNumber, MarkBufferDirty(), MAXALIGN, OffsetNumberPrev, PageAddItem, PageGetItem(), PageGetItemId(), PageSetLSN(), PANIC, UnlockReleaseBuffer(), XLogReadBufferForRedo(), XLogRecGetBlockData(), and XLogRecGetData.

Referenced by btree_redo().

◆ btree_xlog_mark_page_halfdead()

|

static |

Definition at line 705 of file nbtxlog.c.

References _bt_pageinit(), BLK_NEEDS_REDO, BTP_HALF_DEAD, BTP_LEAF, BTPageGetOpaque, BTreeTupleGetDownLink(), BTreeTupleSetDownLink(), BTreeTupleSetTopParent(), BufferGetPage(), BufferGetPageSize(), BufferIsValid(), elog, XLogReaderState::EndRecPtr, ERROR, fb(), InvalidOffsetNumber, MarkBufferDirty(), MemSet, OffsetNumberNext, P_HIKEY, PageAddItem, PageGetItem(), PageGetItemId(), PageIndexTupleDelete(), PageSetLSN(), UnlockReleaseBuffer(), XLogInitBufferForRedo(), XLogReadBufferForRedo(), and XLogRecGetData.

Referenced by btree_redo().

◆ btree_xlog_newroot()

|

static |

Definition at line 927 of file nbtxlog.c.

References _bt_clear_incomplete_split(), _bt_pageinit(), _bt_restore_meta(), _bt_restore_page(), BTP_LEAF, BTP_ROOT, BTPageGetOpaque, BufferGetPage(), BufferGetPageSize(), XLogReaderState::EndRecPtr, fb(), len, MarkBufferDirty(), P_NONE, PageSetLSN(), UnlockReleaseBuffer(), XLogInitBufferForRedo(), XLogRecGetBlockData(), and XLogRecGetData.

Referenced by btree_redo().

◆ btree_xlog_reuse_page()

|

static |

Definition at line 993 of file nbtxlog.c.

References fb(), InHotStandby, ResolveRecoveryConflictWithSnapshotFullXid(), and XLogRecGetData.

Referenced by btree_redo().

◆ btree_xlog_split()

|

static |

Definition at line 247 of file nbtxlog.c.

References _bt_clear_incomplete_split(), _bt_pageinit(), _bt_restore_page(), _bt_swap_posting(), Assert, BLK_NEEDS_REDO, BTP_INCOMPLETE_SPLIT, BTP_LEAF, BTPageGetOpaque, buf, BufferGetPage(), BufferGetPageSize(), BufferIsValid(), CopyIndexTuple(), elog, XLogReaderState::EndRecPtr, ERROR, fb(), IndexTupleSize(), InvalidOffsetNumber, ItemIdGetLength, MarkBufferDirty(), MAXALIGN, OffsetNumberNext, OffsetNumberPrev, P_FIRSTDATAKEY, P_HIKEY, P_NONE, PageAddItem, PageGetItem(), PageGetItemId(), PageGetTempPageCopySpecial(), PageRestoreTempPage(), PageSetLSN(), UnlockReleaseBuffer(), XLogInitBufferForRedo(), XLogReadBufferForRedo(), XLogRecGetBlockData(), XLogRecGetBlockTag(), XLogRecGetBlockTagExtended(), and XLogRecGetData.

Referenced by btree_redo().

◆ btree_xlog_startup()

Definition at line 1063 of file nbtxlog.c.

References ALLOCSET_DEFAULT_SIZES, AllocSetContextCreate, CurrentMemoryContext, and opCtx.

◆ btree_xlog_unlink_page()

|

static |

Definition at line 789 of file nbtxlog.c.

References _bt_pageinit(), _bt_restore_meta(), Assert, BLK_NEEDS_REDO, BlockNumberIsValid(), BTP_HALF_DEAD, BTP_LEAF, BTPageGetOpaque, BTPageSetDeleted(), BTreeTupleSetTopParent(), BufferGetPage(), BufferGetPageSize(), BufferIsValid(), elog, XLogReaderState::EndRecPtr, ERROR, fb(), InvalidBuffer, InvalidOffsetNumber, MarkBufferDirty(), MemSet, P_HIKEY, P_NONE, PageAddItem, PageSetLSN(), UnlockReleaseBuffer(), XLOG_BTREE_UNLINK_PAGE_META, XLogInitBufferForRedo(), XLogReadBufferForRedo(), XLogRecGetData, and XLogRecHasBlockRef.

Referenced by btree_redo().

◆ btree_xlog_updates()

|

static |

Definition at line 546 of file nbtxlog.c.

References _bt_update_posting(), elog, fb(), i, IndexTupleSize(), MAXALIGN, PageGetItem(), PageGetItemId(), PageIndexTupleOverwrite(), palloc(), PANIC, pfree(), and SizeOfBtreeUpdate.

Referenced by btree_xlog_delete(), and btree_xlog_vacuum().

◆ btree_xlog_vacuum()

|

static |

Definition at line 586 of file nbtxlog.c.

References BLK_NEEDS_REDO, BTPageGetOpaque, BTPageOpaqueData::btpo_cycleid, BTPageOpaqueData::btpo_flags, btree_xlog_updates(), BufferGetPage(), BufferIsValid(), XLogReaderState::EndRecPtr, fb(), MarkBufferDirty(), PageIndexMultiDelete(), PageSetLSN(), RBM_NORMAL, UnlockReleaseBuffer(), XLogReadBufferForRedoExtended(), XLogRecGetBlockData(), and XLogRecGetData.

Referenced by btree_redo().

Variable Documentation

◆ opCtx

|

static |

Definition at line 25 of file nbtxlog.c.

Referenced by btree_redo(), btree_xlog_cleanup(), and btree_xlog_startup().