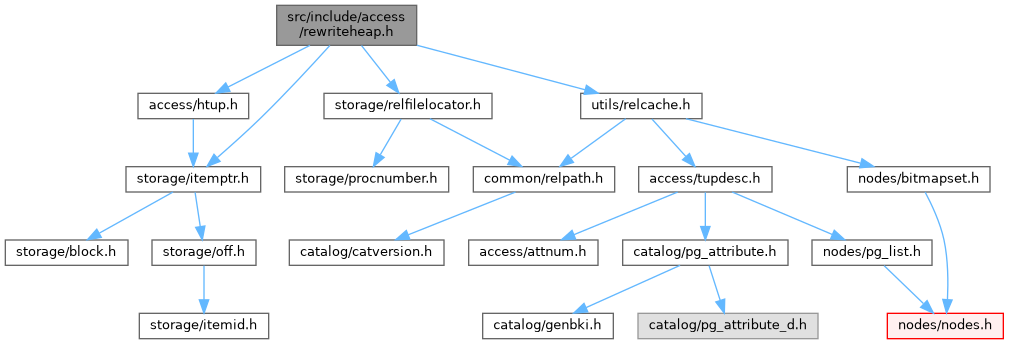

#include "access/htup.h"#include "storage/itemptr.h"#include "storage/relfilelocator.h"#include "utils/relcache.h"

Go to the source code of this file.

Data Structures | |

| struct | LogicalRewriteMappingData |

Macros | |

| #define | LOGICAL_REWRITE_FORMAT "map-%x-%x-%X_%X-%x-%x" |

Typedefs | |

| typedef struct RewriteStateData * | RewriteState |

| typedef struct LogicalRewriteMappingData | LogicalRewriteMappingData |

Macro Definition Documentation

◆ LOGICAL_REWRITE_FORMAT

Typedef Documentation

◆ LogicalRewriteMappingData

◆ RewriteState

| typedef struct RewriteStateData* RewriteState |

Definition at line 22 of file rewriteheap.h.

Function Documentation

◆ begin_heap_rewrite()

|

extern |

Definition at line 234 of file rewriteheap.c.

References ALLOCSET_DEFAULT_SIZES, AllocSetContextCreate, CurrentMemoryContext, fb(), HASH_BLOBS, HASH_CONTEXT, hash_create(), HASH_ELEM, logical_begin_heap_rewrite(), MAIN_FORKNUM, MemoryContextSwitchTo(), palloc0_object, RelationGetNumberOfBlocks, and smgr_bulk_start_rel().

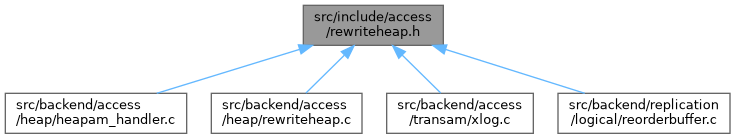

Referenced by heapam_relation_copy_for_cluster().

◆ CheckPointLogicalRewriteHeap()

Definition at line 1157 of file rewriteheap.c.

References AllocateDir(), CloseTransientFile(), data_sync_elevel(), DEBUG1, elog, ereport, errcode_for_file_access(), errmsg(), ERROR, fb(), fd(), FreeDir(), fsync_fname(), get_dirent_type(), GetRedoRecPtr(), LOGICAL_REWRITE_FORMAT, MAXPGPATH, OpenTransientFile(), PG_BINARY, pg_fsync(), PG_LOGICAL_MAPPINGS_DIR, PGFILETYPE_ERROR, PGFILETYPE_REG, pgstat_report_wait_end(), pgstat_report_wait_start(), ReadDir(), ReplicationSlotsComputeLogicalRestartLSN(), snprintf, and XLogRecPtrIsValid.

Referenced by CheckPointGuts().

◆ end_heap_rewrite()

|

extern |

Definition at line 297 of file rewriteheap.c.

References fb(), hash_seq_init(), hash_seq_search(), ItemPointerSetInvalid(), logical_end_heap_rewrite(), MemoryContextDelete(), raw_heap_insert(), smgr_bulk_finish(), and smgr_bulk_write().

Referenced by heapam_relation_copy_for_cluster().

◆ rewrite_heap_dead_tuple()

|

extern |

Definition at line 546 of file rewriteheap.c.

References Assert, fb(), HASH_FIND, HASH_REMOVE, hash_search(), heap_freetuple(), and HeapTupleHeaderGetXmin().

Referenced by heapam_relation_copy_for_cluster().

◆ rewrite_heap_tuple()

|

extern |

Definition at line 341 of file rewriteheap.c.

References Assert, fb(), HASH_ENTER, HASH_FIND, HASH_REMOVE, hash_search(), heap_copytuple(), heap_freetuple(), heap_freeze_tuple(), HEAP_UPDATED, HEAP_XACT_MASK, HEAP_XMAX_INVALID, HeapTupleHeaderGetUpdateXid(), HeapTupleHeaderGetXmin(), HeapTupleHeaderIndicatesMovedPartitions(), HeapTupleHeaderIsOnlyLocked(), ItemPointerEquals(), ItemPointerSetInvalid(), logical_rewrite_heap_tuple(), MemoryContextSwitchTo(), raw_heap_insert(), and TransactionIdPrecedes().

Referenced by reform_and_rewrite_tuple().