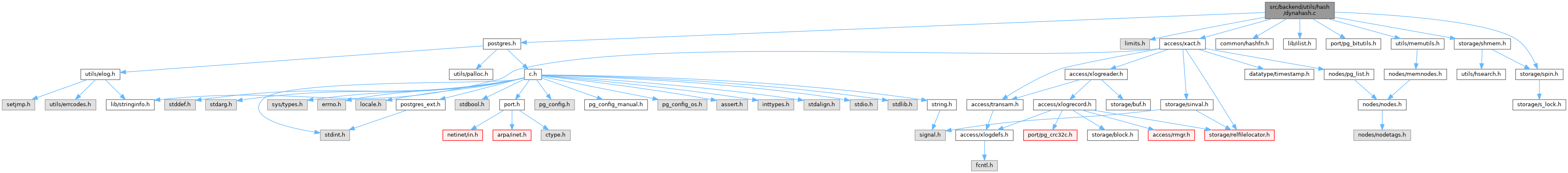

#include "postgres.h"#include <limits.h>#include "access/xact.h"#include "common/hashfn.h"#include "lib/ilist.h"#include "port/pg_bitutils.h"#include "storage/shmem.h"#include "storage/spin.h"#include "utils/memutils.h"

Go to the source code of this file.

Data Structures | |

| struct | FreeListData |

| struct | HASHHDR |

| struct | HTAB |

Macros | |

| #define | DEF_SEGSIZE 256 |

| #define | DEF_SEGSIZE_SHIFT 8 /* must be log2(DEF_SEGSIZE) */ |

| #define | DEF_DIRSIZE 256 |

| #define | NUM_FREELISTS 32 |

| #define | IS_PARTITIONED(hctl) ((hctl)->num_partitions != 0) |

| #define | FREELIST_IDX(hctl, hashcode) (IS_PARTITIONED(hctl) ? (hashcode) % NUM_FREELISTS : 0) |

| #define | ELEMENTKEY(helem) (((char *)(helem)) + MAXALIGN(sizeof(HASHELEMENT))) |

| #define | ELEMENT_FROM_KEY(key) ((HASHELEMENT *) (((char *) (key)) - MAXALIGN(sizeof(HASHELEMENT)))) |

| #define | MOD(x, y) ((x) & ((y)-1)) |

| #define | MAX_SEQ_SCANS 100 |

Typedefs | |

| typedef HASHELEMENT * | HASHBUCKET |

| typedef HASHBUCKET * | HASHSEGMENT |

Variables | |

| static MemoryContext | CurrentDynaHashCxt = NULL |

| static HTAB * | seq_scan_tables [MAX_SEQ_SCANS] |

| static int | seq_scan_level [MAX_SEQ_SCANS] |

| static int | num_seq_scans = 0 |

Macro Definition Documentation

◆ DEF_DIRSIZE

| #define DEF_DIRSIZE 256 |

Definition at line 125 of file dynahash.c.

◆ DEF_SEGSIZE

| #define DEF_SEGSIZE 256 |

Definition at line 123 of file dynahash.c.

◆ DEF_SEGSIZE_SHIFT

| #define DEF_SEGSIZE_SHIFT 8 /* must be log2(DEF_SEGSIZE) */ |

Definition at line 124 of file dynahash.c.

◆ ELEMENT_FROM_KEY

| #define ELEMENT_FROM_KEY | ( | key | ) | ((HASHELEMENT *) (((char *) (key)) - MAXALIGN(sizeof(HASHELEMENT)))) |

Definition at line 260 of file dynahash.c.

◆ ELEMENTKEY

Definition at line 255 of file dynahash.c.

◆ FREELIST_IDX

| #define FREELIST_IDX | ( | hctl, | |

| hashcode | |||

| ) | (IS_PARTITIONED(hctl) ? (hashcode) % NUM_FREELISTS : 0) |

Definition at line 214 of file dynahash.c.

◆ IS_PARTITIONED

| #define IS_PARTITIONED | ( | hctl | ) | ((hctl)->num_partitions != 0) |

Definition at line 212 of file dynahash.c.

◆ MAX_SEQ_SCANS

| #define MAX_SEQ_SCANS 100 |

Definition at line 1875 of file dynahash.c.

◆ MOD

◆ NUM_FREELISTS

| #define NUM_FREELISTS 32 |

Definition at line 128 of file dynahash.c.

Typedef Documentation

◆ HASHBUCKET

Definition at line 131 of file dynahash.c.

◆ HASHSEGMENT

Definition at line 134 of file dynahash.c.

Function Documentation

◆ AtEOSubXact_HashTables()

Definition at line 1957 of file dynahash.c.

References elog, fb(), i, num_seq_scans, seq_scan_level, seq_scan_tables, and WARNING.

Referenced by AbortSubTransaction(), and CommitSubTransaction().

◆ AtEOXact_HashTables()

Definition at line 1931 of file dynahash.c.

References elog, fb(), i, num_seq_scans, seq_scan_tables, and WARNING.

Referenced by AbortTransaction(), AutoVacLauncherMain(), BackgroundWriterMain(), CheckpointerMain(), CommitTransaction(), pgarch_archiveXlog(), PrepareTransaction(), WalSummarizerMain(), and WalWriterMain().

◆ calc_bucket()

Definition at line 915 of file dynahash.c.

References fb(), HASHHDR::high_mask, HASHHDR::low_mask, and HASHHDR::max_bucket.

Referenced by expand_table(), and hash_initial_lookup().

◆ choose_nelem_alloc()

Definition at line 667 of file dynahash.c.

References fb(), and MAXALIGN.

Referenced by hash_estimate_size(), and init_htab().

◆ deregister_seq_scan()

Definition at line 1896 of file dynahash.c.

References elog, ERROR, i, num_seq_scans, seq_scan_level, seq_scan_tables, and HTAB::tabname.

Referenced by hash_seq_term().

◆ dir_realloc()

Definition at line 1643 of file dynahash.c.

References HTAB::alloc, Assert, CurrentDynaHashCxt, HTAB::dir, HASHHDR::dsize, DynaHashAlloc(), fb(), HTAB::hctl, HTAB::hcxt, HASHHDR::max_dsize, MemSet, NO_MAX_DSIZE, and pfree().

Referenced by expand_table().

◆ DynaHashAlloc()

Definition at line 297 of file dynahash.c.

References Assert, CurrentDynaHashCxt, MCXT_ALLOC_NO_OOM, MemoryContextAllocExtended(), and MemoryContextIsValid.

Referenced by dir_realloc(), hash_create(), and hash_destroy().

◆ element_alloc()

Definition at line 1701 of file dynahash.c.

References HTAB::alloc, CurrentDynaHashCxt, HASHHDR::entrysize, fb(), FreeListData::freeList, HASHHDR::freeList, HTAB::hctl, HTAB::hcxt, i, IS_PARTITIONED, HASHHDR::isfixed, HTAB::isshared, MAXALIGN, FreeListData::mutex, slist_push_head(), SpinLockAcquire(), and SpinLockRelease().

Referenced by get_hash_entry(), and hash_create().

◆ expand_table()

Definition at line 1546 of file dynahash.c.

References Assert, calc_bucket(), HTAB::dir, dir_realloc(), HASHHDR::dsize, fb(), HTAB::hctl, HASHHDR::high_mask, IS_PARTITIONED, HASHELEMENT::link, HASHHDR::low_mask, HASHHDR::max_bucket, MOD, HASHHDR::nsegs, seg_alloc(), HTAB::sshift, and HTAB::ssize.

Referenced by hash_search_with_hash_value().

◆ get_hash_entry()

|

static |

Definition at line 1251 of file dynahash.c.

References element_alloc(), fb(), FreeListData::freeList, HASHHDR::freeList, HTAB::hctl, IS_PARTITIONED, HASHELEMENT::link, FreeListData::mutex, HASHHDR::nelem_alloc, FreeListData::nentries, NUM_FREELISTS, SpinLockAcquire(), and SpinLockRelease().

Referenced by hash_search_with_hash_value().

◆ get_hash_value()

Definition at line 908 of file dynahash.c.

References fb(), HTAB::hash, and HTAB::keysize.

Referenced by BufTableHashCode(), LockTagHashCode(), and lookup_type_cache().

◆ has_seq_scans()

Definition at line 1917 of file dynahash.c.

References i, num_seq_scans, and seq_scan_tables.

Referenced by hash_freeze(), and hash_search_with_hash_value().

◆ hash_corrupted()

Definition at line 1803 of file dynahash.c.

References elog, FATAL, HTAB::isshared, PANIC, and HTAB::tabname.

Referenced by hash_initial_lookup().

◆ hash_create()

Definition at line 358 of file dynahash.c.

References HTAB::alloc, HASHCTL::alloc, ALLOCSET_DEFAULT_SIZES, AllocSetContextCreate, Assert, CurrentDynaHashCxt, HTAB::dir, HASHHDR::dsize, HASHCTL::dsize, DynaHashAlloc(), element_alloc(), elog, HASHHDR::entrysize, HASHCTL::entrysize, ereport, errcode(), errmsg(), ERROR, fb(), HTAB::frozen, HTAB::hash, HASHCTL::hash, HASH_ALLOC, HASH_ATTACH, HASH_BLOBS, HASH_COMPARE, HASH_CONTEXT, HASH_DIRSIZE, HASH_ELEM, HASH_FIXED_SIZE, HASH_FUNCTION, HASH_KEYCOPY, HASH_PARTITION, HASH_SEGMENT, HASH_SHARED_MEM, HASH_STRINGS, HTAB::hctl, HASHCTL::hctl, HTAB::hcxt, HASHCTL::hcxt, hdefault(), i, init_htab(), IS_PARTITIONED, HASHHDR::isfixed, HTAB::isshared, HTAB::keycopy, HASHCTL::keycopy, HASHHDR::keysize, HTAB::keysize, HASHCTL::keysize, HTAB::match, HASHCTL::match, HASHHDR::max_dsize, HASHCTL::max_dsize, MemoryContextAlloc(), MemoryContextSetIdentifier(), MemSet, my_log2(), next_pow2_int(), NUM_FREELISTS, HASHHDR::num_partitions, HASHCTL::num_partitions, HASHHDR::sshift, HTAB::sshift, HASHHDR::ssize, HTAB::ssize, HASHCTL::ssize, string_compare(), string_hash(), strlcpy(), HTAB::tabname, tag_hash(), TopMemoryContext, and uint32_hash().

Referenced by _hash_finish_split(), _PG_init(), AddEventToPendingNotifies(), AddPendingSync(), assign_record_type_typmod(), begin_heap_rewrite(), build_colinfo_names_hash(), build_guc_variables(), build_join_rel_hash(), BuildEventTriggerCache(), cfunc_hashtable_init(), CheckForSessionAndXactLocks(), CompactCheckpointerRequestQueue(), compute_array_stats(), compute_tsvector_stats(), create_seq_hashtable(), createConnHash(), CreateLocalPredicateLockHash(), CreatePartitionDirectory(), do_autovacuum(), EnablePortalManager(), ExecInitModifyTable(), ExecuteTruncateGuts(), find_all_inheritors(), find_oper_cache_entry(), find_rendezvous_variable(), get_json_object_as_hash(), get_relation_notnullatts(), GetComboCommandId(), GetConnection(), gistInitBuildBuffers(), gistInitParentMap(), init_missing_cache(), init_procedure_caches(), init_rel_sync_cache(), init_timezone_hashtable(), init_ts_config_cache(), init_uncommitted_enum_types(), init_uncommitted_enum_values(), InitBufferManagerAccess(), InitializeAttoptCache(), InitializeRelfilenumberMap(), InitializeShippableCache(), InitializeTableSpaceCache(), InitLocalBuffers(), initLocalChannelTable(), InitLockManagerAccess(), initPendingListenActions(), InitQueryHashTable(), InitRecoveryTransactionEnvironment(), InitSync(), json_unique_check_init(), load_categories_hash(), log_invalid_page(), logical_begin_heap_rewrite(), logicalrep_partmap_init(), logicalrep_relmap_init(), lookup_proof_cache(), lookup_ts_dictionary_cache(), lookup_ts_parser_cache(), lookup_type_cache(), LookupOpclassInfo(), pa_allocate_worker(), pg_get_backend_memory_contexts(), plpgsql_estate_setup(), PLy_add_exceptions(), populate_recordset_object_start(), ProcessSyncingTablesForApply(), rebuild_database_list(), record_C_func(), RegisterExtensibleNodeEntry(), RelationCacheInitialize(), ReorderBufferAllocate(), ReorderBufferBuildTupleCidHash(), ReorderBufferToastInitHash(), ResetUnloggedRelationsInDbspaceDir(), ri_InitHashTables(), select_perl_context(), SerializePendingSyncs(), set_rtable_names(), ShmemInitHash(), smgropen(), transformGraph(), and XLogPrefetcherAllocate().

◆ hash_destroy()

Definition at line 865 of file dynahash.c.

References HTAB::alloc, Assert, DynaHashAlloc(), fb(), hash_stats(), HTAB::hcxt, and MemoryContextDelete().

Referenced by _hash_finish_split(), CheckForSessionAndXactLocks(), CleanupListenersOnExit(), CompactCheckpointerRequestQueue(), destroy_colinfo_names_hash(), do_autovacuum(), ExecuteTruncateGuts(), find_all_inheritors(), get_json_object_as_hash(), pg_get_backend_memory_contexts(), pgoutput_memory_context_reset(), populate_recordset_object_end(), PostPrepare_PredicateLocks(), ProcessSyncingTablesForApply(), ReleasePredicateLocksLocal(), ReorderBufferFreeTXN(), ReorderBufferToastReset(), ReorderBufferTruncateTXN(), ResetSequenceCaches(), ResetUnloggedRelationsInDbspaceDir(), SerializePendingSyncs(), set_rtable_names(), ShutdownRecoveryTransactionEnvironment(), XLogCheckInvalidPages(), and XLogPrefetcherFree().

◆ hash_estimate_size()

Definition at line 783 of file dynahash.c.

References add_size(), choose_nelem_alloc(), DEF_DIRSIZE, DEF_SEGSIZE, fb(), MAXALIGN, mul_size(), and next_pow2_int64().

Referenced by BufTableShmemSize(), CalculateShmemSize(), LockManagerShmemSize(), pgss_memsize(), PredicateLockShmemSize(), and WaitEventCustomShmemSize().

◆ hash_freeze()

Definition at line 1529 of file dynahash.c.

References elog, ERROR, HTAB::frozen, has_seq_scans(), HTAB::isshared, and HTAB::tabname.

◆ hash_get_num_entries()

Definition at line 1336 of file dynahash.c.

References HASHHDR::freeList, HTAB::hctl, i, IS_PARTITIONED, FreeListData::nentries, and NUM_FREELISTS.

Referenced by build_guc_variables(), compute_array_stats(), compute_tsvector_stats(), entry_alloc(), entry_dealloc(), entry_reset(), EstimatePendingSyncsSpace(), EstimateUncommittedEnumsSpace(), get_crosstab_tuplestore(), get_explain_guc_options(), get_guc_variables(), GetLockStatusData(), GetPredicateLockStatusData(), GetRunningTransactionLocks(), GetWaitEventCustomNames(), hash_stats(), pgss_shmem_shutdown(), ResetUnloggedRelationsInDbspaceDir(), SerializePendingSyncs(), transformGraph(), and XLogHaveInvalidPages().

◆ hash_get_shared_size()

Definition at line 854 of file dynahash.c.

References Assert, HASHCTL::dsize, HASH_DIRSIZE, and HASHCTL::max_dsize.

Referenced by ShmemInitHash().

◆ hash_initial_lookup()

|

inlinestatic |

Definition at line 1779 of file dynahash.c.

References calc_bucket(), HTAB::dir, fb(), hash_corrupted(), HTAB::hctl, MOD, HTAB::sshift, and HTAB::ssize.

Referenced by hash_search_with_hash_value(), hash_seq_init_with_hash_value(), and hash_update_hash_key().

◆ hash_search()

Definition at line 952 of file dynahash.c.

References fb(), HTAB::hash, hash_search_with_hash_value(), and HTAB::keysize.

Referenced by _hash_finish_split(), _hash_splitbucket(), add_guc_variable(), add_join_rel(), add_to_names_hash(), AddEnumLabel(), AddEventToPendingNotifies(), AddPendingSync(), ApplyLogicalMappingFile(), ApplyPendingListenActions(), assign_record_type_typmod(), AsyncExistsPendingNotify(), AtEOSubXact_RelationCache(), AtEOXact_RelationCache(), build_guc_variables(), build_join_rel_hash(), BuildEventTriggerCache(), cfunc_hashtable_delete(), cfunc_hashtable_insert(), cfunc_hashtable_lookup(), CheckAndPromotePredicateLockRequest(), CheckForSerializableConflictOut(), CheckForSessionAndXactLocks(), colname_is_unique(), CompactCheckpointerRequestQueue(), compile_plperl_function(), compile_pltcl_function(), compute_array_stats(), compute_tsvector_stats(), createNewConnection(), define_custom_variable(), delete_rel_type_cache_if_needed(), deleteConnection(), do_autovacuum(), DropAllPredicateLocksFromTable(), DropAllPreparedStatements(), DropPreparedStatement(), entry_alloc(), entry_dealloc(), entry_reset(), EnumTypeUncommitted(), EnumUncommitted(), EnumValuesCreate(), EventCacheLookup(), ExecInitModifyTable(), ExecLookupResultRelByOid(), ExecuteTruncateGuts(), ExtendBufferedRelLocal(), FetchPreparedStatement(), finalize_in_progress_typentries(), find_all_inheritors(), find_join_rel(), find_oper_cache_entry(), find_option(), find_relation_notnullatts(), find_rendezvous_variable(), forget_invalid_pages(), forget_invalid_pages_db(), ForgetPrivateRefCountEntry(), get_attribute_options(), get_cast_hashentry(), get_rel_sync_entry(), get_relation_notnullatts(), get_tablespace(), GetComboCommandId(), GetConnection(), getConnectionByName(), GetExtensibleNodeEntry(), getmissingattr(), GetPrivateRefCountEntrySlow(), getState(), GetWaitEventCustomIdentifier(), gistGetNodeBuffer(), gistGetParent(), gistMemorizeParent(), gistRelocateBuildBuffersOnSplit(), hash_object_field_end(), init_sequence(), insert_rel_type_cache_if_needed(), InvalidateAttoptCacheCallback(), InvalidateLocalBuffer(), InvalidateOprCacheCallBack(), InvalidateShippableCacheCallback(), InvalidateTableSpaceCacheCallback(), is_shippable(), IsListeningOn(), JsObjectGetField(), json_unique_check_key(), LocalBufferAlloc(), LockAcquireExtended(), LockHasWaiters(), LockHeldByMe(), LockRelease(), log_invalid_page(), logical_rewrite_log_mapping(), logicalrep_partition_open(), logicalrep_rel_open(), logicalrep_relmap_update(), lookup_C_func(), lookup_proof_cache(), lookup_ts_config_cache(), lookup_ts_dictionary_cache(), lookup_ts_parser_cache(), lookup_type_cache(), LookupOpclassInfo(), make_oper_cache_entry(), MarkGUCPrefixReserved(), pa_allocate_worker(), pa_find_worker(), pa_free_worker(), PartitionDirectoryLookup(), pg_get_backend_memory_contexts(), pg_tzset(), pgss_store(), plperl_spi_exec_prepared(), plperl_spi_freeplan(), plperl_spi_prepare(), plperl_spi_query_prepared(), pltcl_fetch_interp(), PLy_commit(), PLy_generate_spi_exceptions(), PLy_procedure_get(), PLy_rollback(), PLy_spi_subtransaction_abort(), populate_recordset_object_field_end(), predicatelock_twophase_recover(), PredicateLockExists(), PredicateLockShmemInit(), PredicateLockTwoPhaseFinish(), PrefetchLocalBuffer(), PrepareTableEntriesForListen(), PrepareTableEntriesForUnlisten(), PrepareTableEntriesForUnlistenAll(), ProcessSyncingTablesForApply(), ProcessSyncRequests(), prune_element_hashtable(), prune_lexemes_hashtable(), PutMemoryContextsStatsTupleStore(), rebuild_database_list(), record_C_func(), RegisterExtensibleNodeEntry(), RegisterPredicateLockingXid(), rel_sync_cache_relation_cb(), RelationPreTruncate(), ReleaseOneSerializableXact(), RelFileLocatorSkippingWAL(), RelfilenumberMapInvalidateCallback(), RelidByRelfilenumber(), RememberSyncRequest(), RemoveLocalLock(), ReorderBufferBuildTupleCidHash(), ReorderBufferCleanupTXN(), ReorderBufferToastAppendChunk(), ReorderBufferToastReplace(), ReorderBufferTXNByXid(), ReservePrivateRefCountEntry(), ResetUnloggedRelationsInDbspaceDir(), ResolveCminCmaxDuringDecoding(), RestoreUncommittedEnums(), rewrite_heap_dead_tuple(), rewrite_heap_tuple(), ri_FetchPreparedPlan(), ri_HashCompareOp(), ri_HashPreparedPlan(), ri_LoadConstraintInfo(), select_perl_context(), SerializePendingSyncs(), set_rtable_names(), ShmemInitStruct(), smgrdestroy(), smgrDoPendingSyncs(), smgropen(), smgrreleaserellocator(), StandbyAcquireAccessExclusiveLock(), StandbyReleaseAllLocks(), StandbyReleaseLocks(), StandbyReleaseOldLocks(), StandbyReleaseXidEntryLocks(), StorePreparedStatement(), table_recheck_autovac(), TypeCacheRelCallback(), WaitEventCustomNew(), XLogPrefetcherAddFilter(), XLogPrefetcherCompleteFilters(), and XLogPrefetcherIsFiltered().

◆ hash_search_with_hash_value()

| void * hash_search_with_hash_value | ( | HTAB * | hashp, |

| const void * | keyPtr, | ||

| uint32 | hashvalue, | ||

| HASHACTION | action, | ||

| bool * | foundPtr | ||

| ) |

Definition at line 965 of file dynahash.c.

References Assert, ELEMENTKEY, elog, ereport, errcode(), errmsg(), ERROR, expand_table(), fb(), FreeListData::freeList, HASHHDR::freeList, FREELIST_IDX, HTAB::frozen, get_hash_entry(), has_seq_scans(), HASH_ENTER, HASH_ENTER_NULL, HASH_FIND, hash_initial_lookup(), HASH_REMOVE, HTAB::hctl, IS_PARTITIONED, HTAB::isshared, HTAB::keycopy, HTAB::keysize, HTAB::match, HASHHDR::max_bucket, FreeListData::mutex, FreeListData::nentries, SpinLockAcquire(), SpinLockRelease(), and HTAB::tabname.

Referenced by BufTableDelete(), BufTableInsert(), BufTableLookup(), CheckTargetForConflictsIn(), CleanUpLock(), ClearOldPredicateLocks(), CreatePredicateLock(), DecrementParentLocks(), DeleteChildTargetLocks(), DeleteLockTarget(), DropAllPredicateLocksFromTable(), FastPathGetRelationLockEntry(), GetLockConflicts(), hash_search(), lock_twophase_recover(), LockAcquireExtended(), LockRefindAndRelease(), LockRelease(), LockWaiterCount(), PageIsPredicateLocked(), PredicateLockAcquire(), ReleaseOneSerializableXact(), RemoveScratchTarget(), RemoveTargetIfNoLongerUsed(), RestoreScratchTarget(), SetupLockInTable(), and TransferPredicateLocksToNewTarget().

◆ hash_select_dirsize()

Definition at line 830 of file dynahash.c.

References DEF_DIRSIZE, DEF_SEGSIZE, fb(), and next_pow2_int64().

Referenced by ShmemInitHash().

◆ hash_seq_init()

| void hash_seq_init | ( | HASH_SEQ_STATUS * | status, |

| HTAB * | hashp | ||

| ) |

Definition at line 1380 of file dynahash.c.

References HASH_SEQ_STATUS::curBucket, HASH_SEQ_STATUS::curEntry, fb(), HTAB::frozen, HASH_SEQ_STATUS::hasHashvalue, HASH_SEQ_STATUS::hashp, and register_seq_scan().

Referenced by ApplyPendingListenActions(), AtAbort_Portals(), AtCleanup_Portals(), AtEOSubXact_RelationCache(), AtEOXact_RelationCache(), AtPrepare_Locks(), AtSubAbort_Portals(), AtSubCleanup_Portals(), AtSubCommit_Portals(), BeginReportingGUCOptions(), CheckForBufferLeaks(), CheckForSessionAndXactLocks(), CheckTableForSerializableConflictIn(), cleanup_rel_sync_cache(), compute_array_stats(), compute_tsvector_stats(), dblink_get_connections(), DestroyPartitionDirectory(), disconnect_cached_connections(), DropAllPredicateLocksFromTable(), DropAllPreparedStatements(), end_heap_rewrite(), entry_dealloc(), entry_reset(), ExecuteTruncateGuts(), forget_invalid_pages(), forget_invalid_pages_db(), ForgetPortalSnapshots(), gc_qtexts(), get_guc_variables(), GetLockStatusData(), GetPredicateLockStatusData(), GetRunningTransactionLocks(), GetWaitEventCustomNames(), hash_seq_init_with_hash_value(), HoldPinnedPortals(), InitializeGUCOptions(), InvalidateAttoptCacheCallback(), InvalidateOprCacheCallBack(), InvalidateOprProofCacheCallBack(), InvalidateShippableCacheCallback(), InvalidateTableSpaceCacheCallback(), InvalidateTSCacheCallBack(), LockReassignCurrentOwner(), LockReleaseAll(), LockReleaseCurrentOwner(), LockReleaseSession(), logical_end_heap_rewrite(), logical_heap_rewrite_flush_mappings(), logicalrep_partmap_invalidate_cb(), logicalrep_partmap_reset_relmap(), logicalrep_relmap_invalidate_cb(), MarkGUCPrefixReserved(), packGraph(), pg_cursor(), pg_get_shmem_allocations(), pg_get_shmem_allocations_numa(), pg_listening_channels(), pg_prepared_statement(), pg_stat_statements_internal(), pgfdw_inval_callback(), pgfdw_subxact_callback(), pgfdw_xact_callback(), pgss_shmem_shutdown(), plperl_fini(), PortalErrorCleanup(), PortalHashTableDeleteAll(), postgres_fdw_get_connections_internal(), PostPrepare_Locks(), PreCommit_Notify(), PreCommit_Portals(), PrepareTableEntriesForUnlistenAll(), ProcessConfigFileInternal(), ProcessSyncRequests(), prune_element_hashtable(), prune_lexemes_hashtable(), rebuild_database_list(), rel_sync_cache_publication_cb(), rel_sync_cache_relation_cb(), RelationCacheInitializePhase3(), RelationCacheInvalidate(), RelfilenumberMapInvalidateCallback(), RememberSyncRequest(), ReorderBufferToastReset(), selectColorTrigrams(), SerializePendingSyncs(), SerializeUncommittedEnums(), smgrDoPendingSyncs(), smgrreleaseall(), StandbyReleaseAllLocks(), StandbyReleaseOldLocks(), ThereAreNoReadyPortals(), TypeCacheOpcCallback(), TypeCacheRelCallback(), TypeCacheTypCallback(), write_relcache_init_file(), and XLogCheckInvalidPages().

◆ hash_seq_init_with_hash_value()

| void hash_seq_init_with_hash_value | ( | HASH_SEQ_STATUS * | status, |

| HTAB * | hashp, | ||

| uint32 | hashvalue | ||

| ) |

Definition at line 1400 of file dynahash.c.

References HASH_SEQ_STATUS::curBucket, HASH_SEQ_STATUS::curEntry, fb(), hash_initial_lookup(), hash_seq_init(), HASH_SEQ_STATUS::hasHashvalue, and HASH_SEQ_STATUS::hashvalue.

Referenced by InvalidateAttoptCacheCallback(), and TypeCacheTypCallback().

◆ hash_seq_search()

| void * hash_seq_search | ( | HASH_SEQ_STATUS * | status | ) |

Definition at line 1415 of file dynahash.c.

References HASH_SEQ_STATUS::curBucket, HASH_SEQ_STATUS::curEntry, HTAB::dir, ELEMENTKEY, fb(), hash_seq_term(), HASH_SEQ_STATUS::hasHashvalue, HASH_SEQ_STATUS::hashp, HASH_SEQ_STATUS::hashvalue, HTAB::hctl, HASHELEMENT::link, HASHHDR::max_bucket, MOD, HTAB::sshift, and HTAB::ssize.

Referenced by ApplyPendingListenActions(), AtAbort_Portals(), AtCleanup_Portals(), AtEOSubXact_RelationCache(), AtEOXact_RelationCache(), AtPrepare_Locks(), AtSubAbort_Portals(), AtSubCleanup_Portals(), AtSubCommit_Portals(), BeginReportingGUCOptions(), CheckForBufferLeaks(), CheckForSessionAndXactLocks(), CheckTableForSerializableConflictIn(), cleanup_rel_sync_cache(), compute_array_stats(), compute_tsvector_stats(), dblink_get_connections(), DestroyPartitionDirectory(), disconnect_cached_connections(), DropAllPredicateLocksFromTable(), DropAllPreparedStatements(), end_heap_rewrite(), entry_dealloc(), entry_reset(), ExecuteTruncateGuts(), forget_invalid_pages(), forget_invalid_pages_db(), ForgetPortalSnapshots(), gc_qtexts(), get_guc_variables(), GetLockStatusData(), GetPredicateLockStatusData(), GetRunningTransactionLocks(), GetWaitEventCustomNames(), HoldPinnedPortals(), InitializeGUCOptions(), InvalidateAttoptCacheCallback(), InvalidateOprCacheCallBack(), InvalidateOprProofCacheCallBack(), InvalidateShippableCacheCallback(), InvalidateTableSpaceCacheCallback(), InvalidateTSCacheCallBack(), LockReassignCurrentOwner(), LockReleaseAll(), LockReleaseCurrentOwner(), LockReleaseSession(), logical_end_heap_rewrite(), logical_heap_rewrite_flush_mappings(), logicalrep_partmap_invalidate_cb(), logicalrep_partmap_reset_relmap(), logicalrep_relmap_invalidate_cb(), MarkGUCPrefixReserved(), packGraph(), pg_cursor(), pg_get_shmem_allocations(), pg_get_shmem_allocations_numa(), pg_listening_channels(), pg_prepared_statement(), pg_stat_statements_internal(), pgfdw_inval_callback(), pgfdw_subxact_callback(), pgfdw_xact_callback(), pgss_shmem_shutdown(), plperl_fini(), PortalErrorCleanup(), PortalHashTableDeleteAll(), postgres_fdw_get_connections_internal(), PostPrepare_Locks(), PreCommit_Notify(), PreCommit_Portals(), PrepareTableEntriesForUnlistenAll(), ProcessConfigFileInternal(), ProcessSyncRequests(), prune_element_hashtable(), prune_lexemes_hashtable(), rebuild_database_list(), rel_sync_cache_publication_cb(), rel_sync_cache_relation_cb(), RelationCacheInitializePhase3(), RelationCacheInvalidate(), RelfilenumberMapInvalidateCallback(), RememberSyncRequest(), ReorderBufferToastReset(), selectColorTrigrams(), SerializePendingSyncs(), SerializeUncommittedEnums(), smgrDoPendingSyncs(), smgrreleaseall(), StandbyReleaseAllLocks(), StandbyReleaseOldLocks(), ThereAreNoReadyPortals(), TypeCacheOpcCallback(), TypeCacheRelCallback(), TypeCacheTypCallback(), write_relcache_init_file(), and XLogCheckInvalidPages().

◆ hash_seq_term()

| void hash_seq_term | ( | HASH_SEQ_STATUS * | status | ) |

Definition at line 1509 of file dynahash.c.

References deregister_seq_scan(), HTAB::frozen, and HASH_SEQ_STATUS::hashp.

Referenced by gc_qtexts(), hash_seq_search(), logicalrep_partmap_invalidate_cb(), logicalrep_relmap_invalidate_cb(), pgss_shmem_shutdown(), PortalHashTableDeleteAll(), PreCommit_Portals(), and RelationCacheInitializePhase3().

◆ hash_stats()

Definition at line 884 of file dynahash.c.

References DEBUG4, elog, fb(), hash_get_num_entries(), HTAB::hctl, INT64_FORMAT, HASHHDR::keysize, HASHHDR::max_bucket, HASHHDR::nsegs, HTAB::tabname, and UINT64_FORMAT.

Referenced by hash_destroy().

◆ hash_update_hash_key()

Definition at line 1140 of file dynahash.c.

References ELEMENT_FROM_KEY, ELEMENTKEY, elog, ERROR, fb(), HTAB::frozen, HTAB::hash, hash_initial_lookup(), HTAB::hctl, HTAB::keycopy, HTAB::keysize, HTAB::match, and HTAB::tabname.

Referenced by PostPrepare_Locks().

◆ hdefault()

Definition at line 638 of file dynahash.c.

References DEF_DIRSIZE, DEF_SEGSIZE, DEF_SEGSIZE_SHIFT, HASHHDR::dsize, HTAB::hctl, HASHHDR::isfixed, HASHHDR::max_dsize, MemSet, NO_MAX_DSIZE, HASHHDR::nsegs, HASHHDR::num_partitions, HASHHDR::sshift, and HASHHDR::ssize.

Referenced by hash_create().

◆ init_htab()

Definition at line 700 of file dynahash.c.

References HTAB::alloc, choose_nelem_alloc(), CurrentDynaHashCxt, HTAB::dir, HASHHDR::dsize, HASHHDR::entrysize, fb(), HASHHDR::freeList, HTAB::hctl, HTAB::hcxt, HASHHDR::high_mask, i, IS_PARTITIONED, HASHHDR::low_mask, HASHHDR::max_bucket, FreeListData::mutex, HASHHDR::nelem_alloc, next_pow2_int(), HASHHDR::nsegs, NUM_FREELISTS, seg_alloc(), SpinLockInit(), and HASHHDR::ssize.

Referenced by hash_create().

◆ my_log2()

Definition at line 1817 of file dynahash.c.

References pg_ceil_log2_64(), and PG_INT64_MAX.

Referenced by hash_create(), next_pow2_int(), and next_pow2_int64().

◆ next_pow2_int()

Definition at line 1839 of file dynahash.c.

References fb(), and my_log2().

Referenced by hash_create(), and init_htab().

◆ next_pow2_int64()

Definition at line 1831 of file dynahash.c.

References my_log2().

Referenced by hash_estimate_size(), and hash_select_dirsize().

◆ register_seq_scan()

Definition at line 1884 of file dynahash.c.

References elog, ERROR, GetCurrentTransactionNestLevel(), MAX_SEQ_SCANS, num_seq_scans, seq_scan_level, seq_scan_tables, and HTAB::tabname.

Referenced by hash_seq_init().

◆ seg_alloc()

|

static |

Definition at line 1682 of file dynahash.c.

References HTAB::alloc, CurrentDynaHashCxt, fb(), HTAB::hcxt, MemSet, and HTAB::ssize.

Referenced by expand_table(), and init_htab().

◆ string_compare()

Variable Documentation

◆ CurrentDynaHashCxt

|

static |

Definition at line 294 of file dynahash.c.

Referenced by dir_realloc(), DynaHashAlloc(), element_alloc(), hash_create(), init_htab(), and seg_alloc().

◆ num_seq_scans

|

static |

Definition at line 1879 of file dynahash.c.

Referenced by AtEOSubXact_HashTables(), AtEOXact_HashTables(), deregister_seq_scan(), has_seq_scans(), and register_seq_scan().

◆ seq_scan_level

|

static |

Definition at line 1878 of file dynahash.c.

Referenced by AtEOSubXact_HashTables(), deregister_seq_scan(), and register_seq_scan().

◆ seq_scan_tables

|

static |

Definition at line 1877 of file dynahash.c.

Referenced by AtEOSubXact_HashTables(), AtEOXact_HashTables(), deregister_seq_scan(), has_seq_scans(), and register_seq_scan().