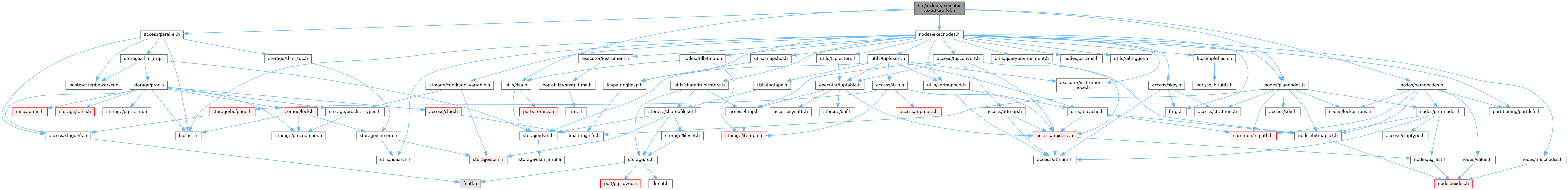

#include "access/parallel.h"#include "nodes/execnodes.h"#include "nodes/parsenodes.h"#include "nodes/plannodes.h"#include "utils/dsa.h"

Go to the source code of this file.

Data Structures | |

| struct | ParallelExecutorInfo |

Typedefs | |

| typedef struct SharedExecutorInstrumentation | SharedExecutorInstrumentation |

| typedef struct ParallelExecutorInfo | ParallelExecutorInfo |

Functions | |

| ParallelExecutorInfo * | ExecInitParallelPlan (PlanState *planstate, EState *estate, Bitmapset *sendParams, int nworkers, int64 tuples_needed) |

| void | ExecParallelCreateReaders (ParallelExecutorInfo *pei) |

| void | ExecParallelFinish (ParallelExecutorInfo *pei) |

| void | ExecParallelCleanup (ParallelExecutorInfo *pei) |

| void | ExecParallelReinitialize (PlanState *planstate, ParallelExecutorInfo *pei, Bitmapset *sendParams) |

| void | ParallelQueryMain (dsm_segment *seg, shm_toc *toc) |

Typedef Documentation

◆ ParallelExecutorInfo

◆ SharedExecutorInstrumentation

Definition at line 22 of file execParallel.h.

Function Documentation

◆ ExecInitParallelPlan()

|

extern |

Definition at line 612 of file execParallel.c.

References ParallelExecutorInfo::area, Assert, bms_is_empty, ParallelExecutorInfo::buffer_usage, CreateParallelContext(), dsa_create_in_place, dsa_minimum_size(), elog, ERROR, EState::es_instrument, EState::es_jit_flags, EState::es_param_list_info, EState::es_query_dsa, EState::es_snapshot, EState::es_sourceText, EState::es_top_eflags, EstimateParamListSpace(), ParallelContext::estimator, ExecParallelEstimate(), ExecParallelInitializeDSM(), ExecParallelSetupTupleQueues(), ExecSerializePlan(), ExecSetParamPlanMulti(), fb(), ParallelExecutorInfo::finished, GetActiveSnapshot(), GetInstrumentationArray, GetPerTupleExprContext, i, InitializeParallelDSM(), InstrInit(), SharedExecutorInstrumentation::instrument_offset, SharedExecutorInstrumentation::instrument_options, ExecParallelInitializeDSMContext::instrumentation, ParallelExecutorInfo::instrumentation, InvalidDsaPointer, SharedJitInstrumentation::jit_instr, ParallelExecutorInfo::jit_instrumentation, MAXALIGN, mul_size(), ExecParallelInitializeDSMContext::nnodes, SharedExecutorInstrumentation::num_plan_nodes, SharedExecutorInstrumentation::num_workers, SharedJitInstrumentation::num_workers, ParallelContext::nworkers, palloc0_object, PARALLEL_KEY_BUFFER_USAGE, PARALLEL_KEY_DSA, PARALLEL_KEY_EXECUTOR_FIXED, PARALLEL_KEY_INSTRUMENTATION, PARALLEL_KEY_JIT_INSTRUMENTATION, PARALLEL_KEY_PARAMLISTINFO, PARALLEL_KEY_PLANNEDSTMT, PARALLEL_KEY_QUERY_TEXT, PARALLEL_KEY_WAL_USAGE, PARALLEL_TUPLE_QUEUE_SIZE, ParallelExecutorInfo::param_exec, ExecParallelInitializeDSMContext::pcxt, ParallelExecutorInfo::pcxt, PGJIT_NONE, PlanState::plan, ParallelExecutorInfo::planstate, ParallelExecutorInfo::reader, ParallelContext::seg, SerializeParamExecParams(), SerializeParamList(), shm_toc_allocate(), shm_toc_estimate_chunk, shm_toc_estimate_keys, shm_toc_insert(), ParallelContext::toc, ParallelExecutorInfo::tqueue, and ParallelExecutorInfo::wal_usage.

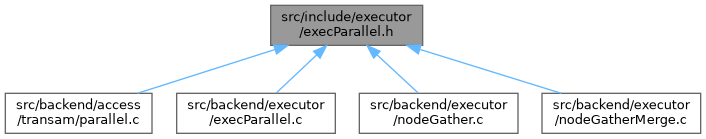

Referenced by ExecGather(), and ExecGatherMerge().

◆ ExecParallelCleanup()

|

extern |

Definition at line 1227 of file execParallel.c.

References ParallelExecutorInfo::area, DestroyParallelContext(), dsa_detach(), dsa_free(), DsaPointerIsValid, ExecParallelRetrieveInstrumentation(), ExecParallelRetrieveJitInstrumentation(), fb(), ParallelExecutorInfo::instrumentation, InvalidDsaPointer, ParallelExecutorInfo::jit_instrumentation, ParallelExecutorInfo::param_exec, ParallelExecutorInfo::pcxt, pfree(), and ParallelExecutorInfo::planstate.

Referenced by ExecShutdownGather(), and ExecShutdownGatherMerge().

◆ ExecParallelCreateReaders()

|

extern |

Definition at line 903 of file execParallel.c.

References Assert, ParallelWorkerInfo::bgwhandle, CreateTupleQueueReader(), fb(), i, ParallelContext::nworkers_launched, palloc(), ParallelExecutorInfo::pcxt, ParallelExecutorInfo::reader, shm_mq_set_handle(), ParallelExecutorInfo::tqueue, and ParallelContext::worker.

Referenced by ExecGather(), and ExecGatherMerge().

◆ ExecParallelFinish()

|

extern |

Definition at line 1174 of file execParallel.c.

References ParallelExecutorInfo::buffer_usage, DestroyTupleQueueReader(), fb(), ParallelExecutorInfo::finished, i, InstrAccumParallelQuery(), ParallelContext::nworkers_launched, ParallelExecutorInfo::pcxt, pfree(), ParallelExecutorInfo::reader, shm_mq_detach(), ParallelExecutorInfo::tqueue, WaitForParallelWorkersToFinish(), and ParallelExecutorInfo::wal_usage.

Referenced by ExecShutdownGatherMergeWorkers(), and ExecShutdownGatherWorkers().

◆ ExecParallelReinitialize()

|

extern |

Definition at line 929 of file execParallel.c.

References ParallelExecutorInfo::area, Assert, bms_is_empty, dsa_free(), DsaPointerIsValid, EState::es_query_dsa, ExecParallelReInitializeDSM(), ExecParallelSetupTupleQueues(), ExecSetParamPlanMulti(), fb(), ParallelExecutorInfo::finished, GetPerTupleExprContext, InvalidDsaPointer, PARALLEL_KEY_EXECUTOR_FIXED, ParallelExecutorInfo::param_exec, ParallelExecutorInfo::pcxt, ParallelExecutorInfo::reader, ReinitializeParallelDSM(), SerializeParamExecParams(), shm_toc_lookup(), PlanState::state, ParallelContext::toc, and ParallelExecutorInfo::tqueue.

Referenced by ExecGather(), and ExecGatherMerge().

◆ ParallelQueryMain()

|

extern |

Definition at line 1452 of file execParallel.c.

References Assert, debug_query_string, dsa_attach_in_place(), dsa_detach(), dsa_get_address(), DsaPointerIsValid, EState::es_jit, EState::es_query_dsa, QueryDesc::estate, ExecParallelGetQueryDesc(), ExecParallelGetReceiver(), ExecParallelInitializeWorker(), ExecParallelReportInstrumentation(), ExecSetTupleBound(), ExecutorEnd(), ExecutorFinish(), ExecutorRun(), ExecutorStart(), fb(), ForwardScanDirection, FreeQueryDesc(), JitContext::instr, InstrEndParallelQuery(), InstrStartParallelQuery(), SharedExecutorInstrumentation::instrument_options, SharedJitInstrumentation::jit_instr, PlannedStmt::jitFlags, PARALLEL_KEY_BUFFER_USAGE, PARALLEL_KEY_DSA, PARALLEL_KEY_EXECUTOR_FIXED, PARALLEL_KEY_INSTRUMENTATION, PARALLEL_KEY_JIT_INSTRUMENTATION, PARALLEL_KEY_WAL_USAGE, ParallelWorkerNumber, pgstat_report_activity(), QueryDesc::plannedstmt, QueryDesc::planstate, RestoreParamExecParams(), shm_toc_lookup(), QueryDesc::sourceText, PlanState::state, and STATE_RUNNING.