#include "postgres.h"#include <math.h>#include "access/htup_details.h"#include "catalog/pg_type.h"#include "miscadmin.h"#include "nodes/makefuncs.h"#include "nodes/nodeFuncs.h"#include "optimizer/cost.h"#include "optimizer/pathnode.h"#include "optimizer/paths.h"#include "optimizer/planner.h"#include "optimizer/prep.h"#include "optimizer/tlist.h"#include "parser/parse_coerce.h"#include "utils/selfuncs.h"

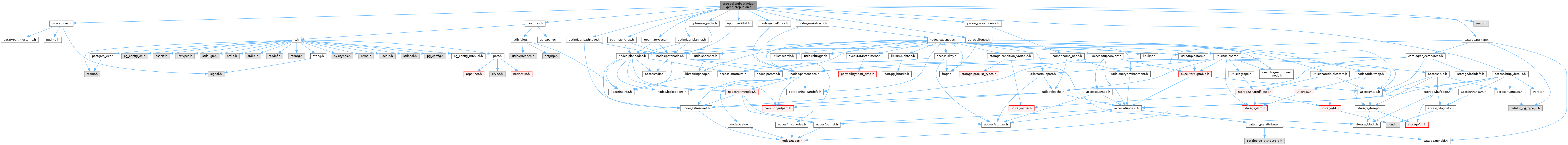

Go to the source code of this file.

Function Documentation

◆ build_setop_child_paths()

|

static |

Definition at line 488 of file prepunion.c.

References add_partial_path(), add_path(), add_setop_child_rel_equivalences(), Assert, bms_is_empty, RelOptInfo::cheapest_total_path, RelOptInfo::consider_parallel, convert_subquery_pathkeys(), create_incremental_sort_path(), create_sort_path(), create_subqueryscan_path(), Query::distinctClause, enable_incremental_sort, estimate_num_groups(), fb(), fetch_upper_rel(), get_tlist_exprs(), Query::groupClause, Query::groupingSets, PlannerInfo::hasHavingQual, is_dummy_rel(), RelOptInfo::lateral_relids, lfirst, PlannerInfo::limit_tuples, linitial, make_tlist_from_pathtarget(), mark_dummy_rel(), NIL, PlannerInfo::parse, pathkeys_count_contained_in(), postprocess_setop_rel(), root, Path::rows, RTE_SUBQUERY, RelOptInfo::rtekind, set_subquery_size_estimates(), PlannerInfo::setop_pathkeys, subpath(), RelOptInfo::subroot, Query::targetList, and UPPERREL_FINAL.

Referenced by generate_nonunion_paths(), generate_recursion_path(), and generate_union_paths().

◆ create_setop_pathtarget()

|

static |

Definition at line 1765 of file prepunion.c.

References create_pathtarget, fb(), lfirst, root, Path::rows, and PathTarget::width.

Referenced by generate_nonunion_paths(), and generate_union_paths().

◆ generate_append_tlist()

|

static |

Definition at line 1616 of file prepunion.c.

References Assert, exprType(), exprTypmod(), fb(), forthree, lappend(), lfirst, lfirst_oid, list_head(), list_length(), lnext(), makeTargetEntry(), makeVar(), NIL, palloc_array, pfree(), and pstrdup().

Referenced by generate_recursion_path(), and generate_union_paths().

◆ generate_nonunion_paths()

|

static |

Definition at line 1051 of file prepunion.c.

References add_path(), SetOperationStmt::all, Assert, bms_union(), build_setop_child_paths(), create_append_path(), create_setop_path(), create_setop_pathtarget(), create_sort_path(), elog, ereport, errcode(), errdetail(), errmsg(), ERROR, fb(), fetch_upper_rel(), generate_setop_grouplist(), generate_setop_tlist(), get_cheapest_path_for_pathkeys(), grouping_is_hashable(), grouping_is_sortable(), is_dummy_rel(), SetOperationStmt::larg, list_make1, list_make2, make_pathkeys_for_sortclauses(), mark_dummy_rel(), Min, NIL, SetOperationStmt::op, Path::pathkeys, pathkeys_contained_in(), SetOperationStmt::rarg, recurse_set_operations(), root, Path::rows, RTE_SUBQUERY, SETOP_EXCEPT, SETOP_HASHED, SETOP_INTERSECT, SETOP_SORTED, SETOPCMD_EXCEPT, SETOPCMD_EXCEPT_ALL, SETOPCMD_INTERSECT, SETOPCMD_INTERSECT_ALL, AppendPathInput::subpaths, TOTAL_COST, and UPPERREL_SETOP.

Referenced by recurse_set_operations().

◆ generate_recursion_path()

|

static |

Definition at line 364 of file prepunion.c.

References add_path(), Assert, bms_union(), build_setop_child_paths(), create_pathtarget, create_recursiveunion_path(), elog, ereport, errcode(), errdetail(), errmsg(), ERROR, fb(), fetch_upper_rel(), generate_append_tlist(), generate_setop_grouplist(), grouping_is_hashable(), list_make2, NIL, postprocess_setop_rel(), recurse_set_operations(), root, Path::rows, RTE_SUBQUERY, SETOP_UNION, and UPPERREL_SETOP.

Referenced by plan_set_operations().

◆ generate_setop_grouplist()

|

static |

Definition at line 1725 of file prepunion.c.

References Assert, copyObject, fb(), lfirst, list_head(), and lnext().

Referenced by generate_nonunion_paths(), generate_recursion_path(), and generate_union_paths().

◆ generate_setop_tlist()

|

static |

Definition at line 1488 of file prepunion.c.

References applyRelabelType(), Assert, COERCE_IMPLICIT_CAST, coerce_to_common_type(), exprCollation(), exprType(), exprTypmod(), fb(), forfour, IsA, lappend(), lfirst, lfirst_oid, makeTargetEntry(), makeVar(), NIL, and pstrdup().

Referenced by generate_nonunion_paths(), and recurse_set_operations().

◆ generate_union_paths()

|

static |

Definition at line 690 of file prepunion.c.

References add_path(), AGG_HASHED, AGGSPLIT_SIMPLE, SetOperationStmt::all, Assert, bms_add_members(), build_setop_child_paths(), RelOptInfo::cheapest_total_path, RelOptInfo::consider_parallel, create_agg_path(), create_append_path(), create_gather_path(), create_merge_append_path(), create_setop_pathtarget(), create_sort_path(), create_unique_path(), enable_parallel_append, estimate_num_groups(), fb(), fetch_upper_rel(), forthree, generate_append_tlist(), generate_setop_grouplist(), get_cheapest_path_for_pathkeys(), grouping_is_hashable(), grouping_is_sortable(), is_dummy_rel(), lappend(), lfirst, lfirst_int, lfirst_node, linitial, list_length(), make_pathkeys_for_sortclauses(), mark_dummy_rel(), Max, max_parallel_workers_per_gather, Min, NIL, RelOptInfo::partial_pathlist, AppendPathInput::partial_subpaths, Path::pathkeys, RelOptInfo::pathlist, pg_leftmost_one_pos32(), plan_union_children(), RelOptInfo::relids, RELOPT_UPPER_REL, root, RTE_SUBQUERY, RelOptInfo::rtekind, subpath(), AppendPathInput::subpaths, TOTAL_COST, and UPPERREL_SETOP.

Referenced by recurse_set_operations().

◆ plan_set_operations()

| RelOptInfo * plan_set_operations | ( | PlannerInfo * | root | ) |

Definition at line 97 of file prepunion.c.

References Assert, castNode, fb(), generate_recursion_path(), IsA, NIL, parse(), recurse_set_operations(), root, and setup_simple_rel_arrays().

Referenced by grouping_planner().

◆ plan_union_children()

|

static |

Definition at line 1400 of file prepunion.c.

References SetOperationStmt::all, equal(), fb(), IsA, lappend(), lappend_int(), SetOperationStmt::larg, lcons(), linitial, list_delete_first(), list_make1, NIL, SetOperationStmt::op, SetOperationStmt::rarg, recurse_set_operations(), and root.

Referenced by generate_union_paths().

◆ postprocess_setop_rel()

|

static |

Definition at line 1462 of file prepunion.c.

References create_upper_paths_hook, fb(), root, set_cheapest(), and UPPERREL_SETOP.

Referenced by build_setop_child_paths(), generate_recursion_path(), and recurse_set_operations().

◆ recurse_set_operations()

|

static |

Definition at line 213 of file prepunion.c.

References apply_projection_to_path(), Assert, build_simple_rel(), check_stack_depth(), choose_plan_name(), create_pathtarget, create_projection_path(), elog, ERROR, fb(), generate_nonunion_paths(), generate_setop_tlist(), generate_union_paths(), IsA, lfirst, NIL, nodeTag, SetOperationStmt::op, RelOptInfo::partial_pathlist, RelOptInfo::pathlist, postprocess_setop_rel(), PlannerInfo::processed_tlist, RelOptInfo::reltarget, root, SETOP_UNION, subpath(), subquery_planner(), RelOptInfo::subroot, tlist_same_collations(), and tlist_same_datatypes().

Referenced by generate_nonunion_paths(), generate_recursion_path(), plan_set_operations(), and plan_union_children().