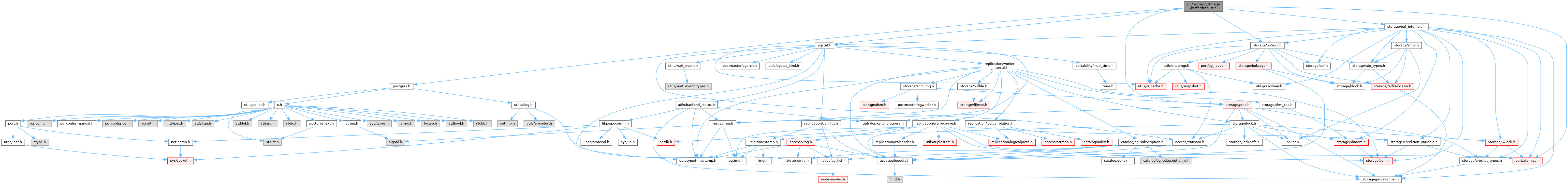

#include "postgres.h"#include "pgstat.h"#include "port/atomics.h"#include "storage/buf_internals.h"#include "storage/bufmgr.h"#include "storage/proc.h"

Go to the source code of this file.

Data Structures | |

| struct | BufferStrategyControl |

| struct | BufferAccessStrategyData |

Macros | |

| #define | INT_ACCESS_ONCE(var) ((int)(*((volatile int *)&(var)))) |

Typedefs | |

| typedef struct BufferAccessStrategyData | BufferAccessStrategyData |

Functions | |

| static BufferDesc * | GetBufferFromRing (BufferAccessStrategy strategy, uint64 *buf_state) |

| static void | AddBufferToRing (BufferAccessStrategy strategy, BufferDesc *buf) |

| static uint32 | ClockSweepTick (void) |

| BufferDesc * | StrategyGetBuffer (BufferAccessStrategy strategy, uint64 *buf_state, bool *from_ring) |

| int | StrategySyncStart (uint32 *complete_passes, uint32 *num_buf_alloc) |

| void | StrategyNotifyBgWriter (int bgwprocno) |

| Size | StrategyShmemSize (void) |

| void | StrategyInitialize (bool init) |

| BufferAccessStrategy | GetAccessStrategy (BufferAccessStrategyType btype) |

| BufferAccessStrategy | GetAccessStrategyWithSize (BufferAccessStrategyType btype, int ring_size_kb) |

| int | GetAccessStrategyBufferCount (BufferAccessStrategy strategy) |

| int | GetAccessStrategyPinLimit (BufferAccessStrategy strategy) |

| void | FreeAccessStrategy (BufferAccessStrategy strategy) |

| IOContext | IOContextForStrategy (BufferAccessStrategy strategy) |

| bool | StrategyRejectBuffer (BufferAccessStrategy strategy, BufferDesc *buf, bool from_ring) |

Variables | |

| static BufferStrategyControl * | StrategyControl = NULL |

Macro Definition Documentation

◆ INT_ACCESS_ONCE

Definition at line 24 of file freelist.c.

Typedef Documentation

◆ BufferAccessStrategyData

Function Documentation

◆ AddBufferToRing()

|

static |

Definition at line 737 of file freelist.c.

References buf, BufferDescriptorGetBuffer(), BufferAccessStrategyData::buffers, and BufferAccessStrategyData::current.

Referenced by StrategyGetBuffer().

◆ ClockSweepTick()

Definition at line 100 of file freelist.c.

References BufferStrategyControl::buffer_strategy_lock, BufferStrategyControl::completePasses, fb(), NBuffers, BufferStrategyControl::nextVictimBuffer, pg_atomic_compare_exchange_u32(), pg_atomic_fetch_add_u32(), SpinLockAcquire, SpinLockRelease, StrategyControl, and success.

Referenced by StrategyGetBuffer().

◆ FreeAccessStrategy()

| void FreeAccessStrategy | ( | BufferAccessStrategy | strategy | ) |

Definition at line 643 of file freelist.c.

Referenced by blgetbitmap(), FreeBulkInsertState(), heap_endscan(), initscan(), parallel_vacuum_main(), and RelationCopyStorageUsingBuffer().

◆ GetAccessStrategy()

| BufferAccessStrategy GetAccessStrategy | ( | BufferAccessStrategyType | btype | ) |

Definition at line 461 of file freelist.c.

References BAS_BULKREAD, BAS_BULKWRITE, BAS_NORMAL, BAS_VACUUM, effective_io_concurrency, elog, ERROR, fb(), GetAccessStrategyWithSize(), GetPinLimit(), io_combine_limit, and Max.

Referenced by blgetbitmap(), bt_check_every_level(), collect_corrupt_items(), collect_visibility_data(), GetBulkInsertState(), gin_check_parent_keys_consistency(), gin_check_posting_tree_parent_keys_consistency(), initscan(), pgstat_index(), pgstathashindex(), pgstatindex_impl(), RelationCopyStorageUsingBuffer(), ScanSourceDatabasePgClass(), statapprox_heap(), and verify_heapam().

◆ GetAccessStrategyBufferCount()

| int GetAccessStrategyBufferCount | ( | BufferAccessStrategy | strategy | ) |

Definition at line 586 of file freelist.c.

References fb(), and BufferAccessStrategyData::nbuffers.

Referenced by parallel_vacuum_init().

◆ GetAccessStrategyPinLimit()

| int GetAccessStrategyPinLimit | ( | BufferAccessStrategy | strategy | ) |

Definition at line 609 of file freelist.c.

References BAS_BULKREAD, BufferAccessStrategyData::btype, fb(), BufferAccessStrategyData::nbuffers, and NBuffers.

Referenced by read_stream_begin_impl().

◆ GetAccessStrategyWithSize()

| BufferAccessStrategy GetAccessStrategyWithSize | ( | BufferAccessStrategyType | btype, |

| int | ring_size_kb | ||

| ) |

Definition at line 546 of file freelist.c.

References Assert, BufferAccessStrategyData::btype, fb(), Min, BufferAccessStrategyData::nbuffers, NBuffers, and palloc0().

Referenced by do_autovacuum(), ExecVacuum(), GetAccessStrategy(), and parallel_vacuum_main().

◆ GetBufferFromRing()

|

static |

Definition at line 658 of file freelist.c.

References BM_LOCKED, buf, BUF_REFCOUNT_ONE, BUF_STATE_GET_REFCOUNT, BUF_STATE_GET_USAGECOUNT, BufferDescriptorGetBuffer(), BufferAccessStrategyData::buffers, BufferAccessStrategyData::current, fb(), GetBufferDescriptor(), InvalidBuffer, BufferAccessStrategyData::nbuffers, pg_atomic_compare_exchange_u64(), pg_atomic_read_u64(), TrackNewBufferPin(), unlikely, and WaitBufHdrUnlocked().

Referenced by StrategyGetBuffer().

◆ IOContextForStrategy()

| IOContext IOContextForStrategy | ( | BufferAccessStrategy | strategy | ) |

Definition at line 747 of file freelist.c.

References BAS_BULKREAD, BAS_BULKWRITE, BAS_NORMAL, BAS_VACUUM, BufferAccessStrategyData::btype, elog, ERROR, IOCONTEXT_BULKREAD, IOCONTEXT_BULKWRITE, IOCONTEXT_NORMAL, IOCONTEXT_VACUUM, and pg_unreachable.

Referenced by AsyncReadBuffers(), ExtendBufferedRelShared(), PinBufferForBlock(), and WaitReadBuffers().

◆ StrategyGetBuffer()

| BufferDesc * StrategyGetBuffer | ( | BufferAccessStrategy | strategy, |

| uint64 * | buf_state, | ||

| bool * | from_ring | ||

| ) |

Definition at line 174 of file freelist.c.

References AddBufferToRing(), BufferStrategyControl::bgwprocno, BM_LOCKED, buf, BUF_REFCOUNT_ONE, BUF_STATE_GET_REFCOUNT, BUF_STATE_GET_USAGECOUNT, BUF_USAGECOUNT_ONE, BufferDescriptorGetBuffer(), ClockSweepTick(), elog, ERROR, fb(), GetBufferDescriptor(), GetBufferFromRing(), GetPGProcByNumber, INT_ACCESS_ONCE, NBuffers, BufferStrategyControl::numBufferAllocs, pg_atomic_compare_exchange_u64(), pg_atomic_fetch_add_u32(), pg_atomic_read_u64(), SetLatch(), StrategyControl, TrackNewBufferPin(), unlikely, and WaitBufHdrUnlocked().

Referenced by GetVictimBuffer().

◆ StrategyInitialize()

Definition at line 401 of file freelist.c.

References Assert, BufferStrategyControl::bgwprocno, BufferStrategyControl::buffer_strategy_lock, BufferStrategyControl::completePasses, init, InitBufTable(), NBuffers, BufferStrategyControl::nextVictimBuffer, NUM_BUFFER_PARTITIONS, BufferStrategyControl::numBufferAllocs, pg_atomic_init_u32(), ShmemInitStruct(), SpinLockInit, and StrategyControl.

Referenced by BufferManagerShmemInit().

◆ StrategyNotifyBgWriter()

Definition at line 358 of file freelist.c.

References BufferStrategyControl::bgwprocno, BufferStrategyControl::buffer_strategy_lock, SpinLockAcquire, SpinLockRelease, and StrategyControl.

Referenced by BackgroundWriterMain().

◆ StrategyRejectBuffer()

| bool StrategyRejectBuffer | ( | BufferAccessStrategy | strategy, |

| BufferDesc * | buf, | ||

| bool | from_ring | ||

| ) |

Definition at line 787 of file freelist.c.

References BAS_BULKREAD, BufferAccessStrategyData::btype, buf, BufferDescriptorGetBuffer(), BufferAccessStrategyData::buffers, BufferAccessStrategyData::current, fb(), and InvalidBuffer.

Referenced by GetVictimBuffer().

◆ StrategyShmemSize()

Definition at line 380 of file freelist.c.

References add_size(), BufTableShmemSize(), MAXALIGN, NBuffers, and NUM_BUFFER_PARTITIONS.

Referenced by BufferManagerShmemSize().

◆ StrategySyncStart()

Definition at line 321 of file freelist.c.

References BufferStrategyControl::buffer_strategy_lock, BufferStrategyControl::completePasses, fb(), NBuffers, BufferStrategyControl::nextVictimBuffer, BufferStrategyControl::numBufferAllocs, pg_atomic_exchange_u32(), pg_atomic_read_u32(), SpinLockAcquire, SpinLockRelease, and StrategyControl.

Referenced by BgBufferSync().

Variable Documentation

◆ StrategyControl

|

static |

Definition at line 57 of file freelist.c.

Referenced by ClockSweepTick(), StrategyGetBuffer(), StrategyInitialize(), StrategyNotifyBgWriter(), and StrategySyncStart().