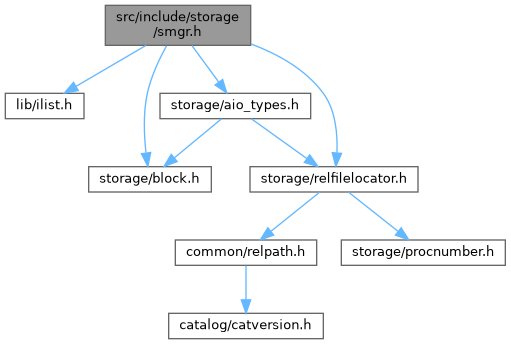

#include "lib/ilist.h"#include "storage/aio_types.h"#include "storage/block.h"#include "storage/relfilelocator.h"

Go to the source code of this file.

Data Structures | |

| struct | SMgrRelationData |

Macros | |

| #define | SmgrIsTemp(smgr) RelFileLocatorBackendIsTemp((smgr)->smgr_rlocator) |

Typedefs | |

| typedef struct SMgrRelationData | SMgrRelationData |

| typedef SMgrRelationData * | SMgrRelation |

Variables | |

| PGDLLIMPORT const PgAioTargetInfo | aio_smgr_target_info |

Macro Definition Documentation

◆ SmgrIsTemp

| #define SmgrIsTemp | ( | smgr | ) | RelFileLocatorBackendIsTemp((smgr)->smgr_rlocator) |

Definition at line 74 of file smgr.h.

Typedef Documentation

◆ SMgrRelation

◆ SMgrRelationData

Function Documentation

◆ AtEOXact_SMgr()

Definition at line 1017 of file smgr.c.

References smgrdestroyall().

Referenced by AbortTransaction(), AutoVacLauncherMain(), BackgroundWriterMain(), CheckpointerMain(), CommitTransaction(), PrepareTransaction(), and WalWriterMain().

◆ pgaio_io_set_target_smgr()

|

extern |

Definition at line 1038 of file smgr.c.

References fb(), RelFileLocatorBackend::locator, pgaio_io_get_target_data(), pgaio_io_set_target(), PGAIO_TID_SMGR, SMgrRelationData::smgr_rlocator, and SmgrIsTemp.

Referenced by mdstartreadv().

◆ ProcessBarrierSmgrRelease()

Definition at line 1027 of file smgr.c.

References smgrreleaseall().

Referenced by ProcessProcSignalBarrier().

◆ smgrclose()

|

extern |

Definition at line 374 of file smgr.c.

References fb(), and smgrrelease().

Referenced by DropRelationFiles(), fill_seq_with_data(), heapam_relation_copy_data(), heapam_relation_set_new_filelocator(), index_copy_data(), RelationCloseSmgr(), RelationSetNewRelfilenumber(), ScanSourceDatabasePgClass(), and smgrDoPendingDeletes().

◆ smgrcreate()

|

extern |

Definition at line 481 of file smgr.c.

References fb(), HOLD_INTERRUPTS, RESUME_INTERRUPTS, f_smgr::smgr_create, and smgrsw.

Referenced by CreateAndCopyRelationData(), ExtendBufferedRelTo(), fill_seq_with_data(), heapam_relation_copy_data(), heapam_relation_set_new_filelocator(), index_build(), index_copy_data(), RelationCreateStorage(), smgr_redo(), and XLogReadBufferExtended().

◆ smgrdestroyall()

Definition at line 386 of file smgr.c.

References dlist_mutable_iter::cur, dlist_container, dlist_foreach_modify, HOLD_INTERRUPTS, RESUME_INTERRUPTS, smgrdestroy(), and unpinned_relns.

Referenced by AtEOXact_SMgr(), BackgroundWriterMain(), CheckpointerMain(), RequestCheckpoint(), xlog_redo(), and XLogDropDatabase().

◆ smgrdosyncall()

|

extern |

Definition at line 498 of file smgr.c.

References fb(), FlushRelationsAllBuffers(), HOLD_INTERRUPTS, i, MAX_FORKNUM, RESUME_INTERRUPTS, f_smgr::smgr_immedsync, SMgrRelationData::smgr_which, and smgrsw.

Referenced by smgrDoPendingSyncs().

◆ smgrdounlinkall()

|

extern |

Definition at line 538 of file smgr.c.

References CacheInvalidateSmgr(), DropRelationsAllBuffers(), fb(), HOLD_INTERRUPTS, i, MAX_FORKNUM, palloc_array, pfree(), RESUME_INTERRUPTS, SMgrRelationData::smgr_rlocator, SMgrRelationData::smgr_which, and smgrsw.

Referenced by DropRelationFiles(), RelationSetNewRelfilenumber(), and smgrDoPendingDeletes().

◆ smgrexists()

|

extern |

Definition at line 462 of file smgr.c.

References fb(), HOLD_INTERRUPTS, RESUME_INTERRUPTS, f_smgr::smgr_exists, and smgrsw.

Referenced by autoprewarm_database_main(), bt_index_check_callback(), CreateAndCopyRelationData(), DropRelationsAllBuffers(), ExtendBufferedRelTo(), FreeSpaceMapPrepareTruncateRel(), fsm_readbuf(), heapam_relation_copy_data(), index_build(), index_copy_data(), pg_prewarm(), RelationTruncate(), smgr_redo(), smgrDoPendingSyncs(), visibilitymap_prepare_truncate(), vm_readbuf(), and XLogPrefetcherNextBlock().

◆ smgrextend()

|

extern |

Definition at line 620 of file smgr.c.

References fb(), HOLD_INTERRUPTS, InvalidBlockNumber, RESUME_INTERRUPTS, f_smgr::smgr_extend, and smgrsw.

Referenced by _hash_alloc_buckets(), RelationCopyStorageUsingBuffer(), and smgr_bulk_flush().

◆ smgrimmedsync()

|

extern |

Definition at line 974 of file smgr.c.

References fb(), HOLD_INTERRUPTS, RESUME_INTERRUPTS, f_smgr::smgr_immedsync, and smgrsw.

Referenced by smgr_bulk_finish().

◆ smgrinit()

Definition at line 188 of file smgr.c.

References HOLD_INTERRUPTS, i, NSmgr, on_proc_exit(), RESUME_INTERRUPTS, f_smgr::smgr_init, smgrshutdown(), and smgrsw.

Referenced by BaseInit().

◆ smgrmaxcombine()

|

extern |

Definition at line 697 of file smgr.c.

References fb(), HOLD_INTERRUPTS, RESUME_INTERRUPTS, f_smgr::smgr_maxcombine, and smgrsw.

Referenced by StartReadBuffersImpl().

◆ smgrnblocks()

|

extern |

Definition at line 819 of file smgr.c.

References fb(), HOLD_INTERRUPTS, InvalidBlockNumber, RESUME_INTERRUPTS, f_smgr::smgr_nblocks, smgrnblocks_cached(), and smgrsw.

Referenced by ExtendBufferedRelLocal(), ExtendBufferedRelShared(), ExtendBufferedRelTo(), FreeSpaceMapPrepareTruncateRel(), fsm_readbuf(), gistBuildCallback(), pg_truncate_visibility_map(), RelationCopyStorage(), RelationCopyStorageUsingBuffer(), RelationGetNumberOfBlocksInFork(), RelationTruncate(), ScanSourceDatabasePgClass(), smgr_bulk_start_smgr(), smgr_redo(), smgrDoPendingSyncs(), table_block_relation_size(), visibilitymap_prepare_truncate(), vm_readbuf(), XLogPrefetcherNextBlock(), and XLogReadBufferExtended().

◆ smgrnblocks_cached()

|

extern |

Definition at line 847 of file smgr.c.

References fb(), InRecovery, and InvalidBlockNumber.

Referenced by DropRelationBuffers(), DropRelationsAllBuffers(), and smgrnblocks().

◆ smgropen()

|

extern |

Definition at line 240 of file smgr.c.

References Assert, ctl, dlist_init(), dlist_push_tail(), fb(), HASH_BLOBS, hash_create(), HASH_ELEM, HASH_ENTER, hash_search(), HOLD_INTERRUPTS, i, InvalidBlockNumber, HASHCTL::keysize, MAX_FORKNUM, RelFileNumberIsValid, RelFileLocator::relNumber, RESUME_INTERRUPTS, f_smgr::smgr_open, SMgrRelationHash, smgrsw, and unpinned_relns.

Referenced by CreateAndCopyRelationData(), CreateFakeRelcacheEntry(), DropRelationFiles(), fill_seq_with_data(), FlushBuffer(), FlushLocalBuffer(), IssuePendingWritebacks(), mdsyncfiletag(), ReadBufferWithoutRelcache(), RelationCopyStorageUsingBuffer(), RelationCreateStorage(), RelationGetSmgr(), RelationSetNewRelfilenumber(), ScanSourceDatabasePgClass(), smgr_aio_reopen(), smgr_redo(), smgrDoPendingDeletes(), smgrDoPendingSyncs(), XLogPrefetcherNextBlock(), and XLogReadBufferExtended().

◆ smgrpin()

|

extern |

Definition at line 296 of file smgr.c.

References dlist_delete(), and fb().

Referenced by RelationGetSmgr().

◆ smgrprefetch()

|

extern |

Definition at line 678 of file smgr.c.

References fb(), HOLD_INTERRUPTS, RESUME_INTERRUPTS, f_smgr::smgr_prefetch, and smgrsw.

Referenced by PrefetchLocalBuffer(), PrefetchSharedBuffer(), and StartReadBuffersImpl().

◆ smgrread()

|

inlinestatic |

Definition at line 124 of file smgr.h.

References fb(), and smgrreadv().

Referenced by pg_prewarm(), and RelationCopyStorage().

◆ smgrreadv()

|

extern |

Definition at line 721 of file smgr.c.

References fb(), HOLD_INTERRUPTS, RESUME_INTERRUPTS, f_smgr::smgr_readv, and smgrsw.

Referenced by smgrread().

◆ smgrregistersync()

|

extern |

Definition at line 940 of file smgr.c.

References fb(), HOLD_INTERRUPTS, RESUME_INTERRUPTS, f_smgr::smgr_registersync, and smgrsw.

Referenced by smgr_bulk_finish().

◆ smgrrelease()

|

extern |

Definition at line 350 of file smgr.c.

References fb(), HOLD_INTERRUPTS, InvalidBlockNumber, MAX_FORKNUM, RESUME_INTERRUPTS, f_smgr::smgr_close, and smgrsw.

Referenced by smgrclose(), smgrreleaseall(), and smgrreleaserellocator().

◆ smgrreleaseall()

Definition at line 412 of file smgr.c.

References fb(), hash_seq_init(), hash_seq_search(), HOLD_INTERRUPTS, RESUME_INTERRUPTS, SMgrRelationHash, and smgrrelease().

Referenced by ProcessBarrierSmgrRelease(), read_rel_block_ll(), and RelationCacheInvalidate().

◆ smgrreleaserellocator()

|

extern |

Definition at line 443 of file smgr.c.

References fb(), HASH_FIND, hash_search(), SMgrRelationHash, and smgrrelease().

Referenced by LocalExecuteInvalidationMessage().

◆ smgrstartreadv()

|

extern |

Definition at line 753 of file smgr.c.

References fb(), HOLD_INTERRUPTS, RESUME_INTERRUPTS, f_smgr::smgr_startreadv, and smgrsw.

Referenced by AsyncReadBuffers(), and read_rel_block_ll().

◆ smgrtruncate()

|

extern |

Definition at line 875 of file smgr.c.

References CacheInvalidateSmgr(), DropRelationBuffers(), fb(), i, InvalidBlockNumber, f_smgr::smgr_truncate, and smgrsw.

Referenced by pg_truncate_visibility_map(), RelationTruncate(), and smgr_redo().

◆ smgrunpin()

|

extern |

Definition at line 311 of file smgr.c.

References Assert, dlist_push_tail(), fb(), and unpinned_relns.

Referenced by RelationCloseSmgr().

◆ smgrwrite()

|

inlinestatic |

Definition at line 131 of file smgr.h.

References fb(), and smgrwritev().

Referenced by FlushBuffer(), FlushLocalBuffer(), modify_rel_block(), and smgr_bulk_flush().

◆ smgrwriteback()

|

extern |

Definition at line 805 of file smgr.c.

References fb(), HOLD_INTERRUPTS, RESUME_INTERRUPTS, f_smgr::smgr_writeback, and smgrsw.

Referenced by IssuePendingWritebacks().

◆ smgrwritev()

|

extern |

Definition at line 791 of file smgr.c.

References fb(), HOLD_INTERRUPTS, RESUME_INTERRUPTS, f_smgr::smgr_writev, and smgrsw.

Referenced by smgrwrite().

◆ smgrzeroextend()

|

extern |

Definition at line 649 of file smgr.c.

References fb(), HOLD_INTERRUPTS, InvalidBlockNumber, RESUME_INTERRUPTS, f_smgr::smgr_zeroextend, and smgrsw.

Referenced by ExtendBufferedRelLocal(), and ExtendBufferedRelShared().

Variable Documentation

◆ aio_smgr_target_info

|

extern |

Definition at line 172 of file smgr.c.