#include "postgres.h"#include <unistd.h>#include <fcntl.h>#include <sys/file.h>#include "access/clog.h"#include "access/commit_ts.h"#include "access/multixact.h"#include "access/xlog.h"#include "miscadmin.h"#include "pgstat.h"#include "portability/instr_time.h"#include "postmaster/bgwriter.h"#include "storage/fd.h"#include "storage/latch.h"#include "storage/md.h"#include "utils/hsearch.h"#include "utils/memutils.h"

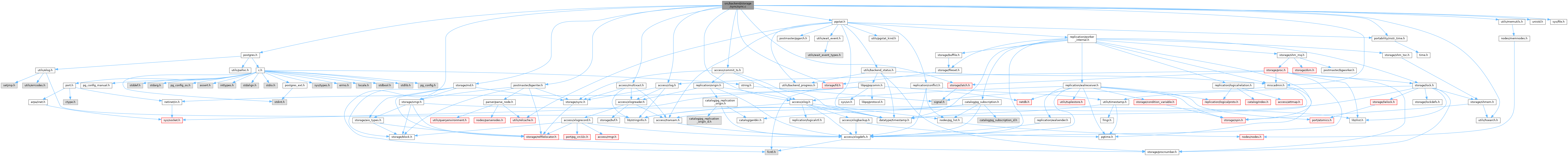

Go to the source code of this file.

Data Structures | |

| struct | PendingFsyncEntry |

| struct | PendingUnlinkEntry |

| struct | SyncOps |

Macros | |

| #define | FSYNCS_PER_ABSORB 10 |

| #define | UNLINKS_PER_ABSORB 10 |

Typedefs | |

| typedef uint16 | CycleCtr |

| typedef struct SyncOps | SyncOps |

Functions | |

| void | InitSync (void) |

| void | SyncPreCheckpoint (void) |

| void | SyncPostCheckpoint (void) |

| void | ProcessSyncRequests (void) |

| void | RememberSyncRequest (const FileTag *ftag, SyncRequestType type) |

| bool | RegisterSyncRequest (const FileTag *ftag, SyncRequestType type, bool retryOnError) |

Variables | |

| static HTAB * | pendingOps = NULL |

| static List * | pendingUnlinks = NIL |

| static MemoryContext | pendingOpsCxt |

| static CycleCtr | sync_cycle_ctr = 0 |

| static CycleCtr | checkpoint_cycle_ctr = 0 |

| static const SyncOps | syncsw [] |

Macro Definition Documentation

◆ FSYNCS_PER_ABSORB

◆ UNLINKS_PER_ABSORB

Typedef Documentation

◆ CycleCtr

◆ SyncOps

Function Documentation

◆ InitSync()

Definition at line 124 of file sync.c.

References ALLOCSET_DEFAULT_SIZES, AllocSetContextCreate, AmCheckpointerProcess, fb(), HASH_BLOBS, HASH_CONTEXT, hash_create(), HASH_ELEM, IsUnderPostmaster, MemoryContextAllowInCriticalSection(), NIL, pendingOps, pendingOpsCxt, pendingUnlinks, and TopMemoryContext.

Referenced by BaseInit().

◆ ProcessSyncRequests()

Definition at line 286 of file sync.c.

References AbsorbSyncRequests(), Assert, PendingFsyncEntry::canceled, CheckpointStats, CheckpointStatsData::ckpt_agg_sync_time, CheckpointStatsData::ckpt_longest_sync, CheckpointStatsData::ckpt_sync_rels, PendingFsyncEntry::cycle_ctr, data_sync_elevel(), DEBUG1, elog, enableFsync, ereport, errcode_for_file_access(), errmsg(), errmsg_internal(), ERROR, fb(), FILE_POSSIBLY_DELETED, FSYNCS_PER_ABSORB, FileTag::handler, HASH_REMOVE, hash_search(), hash_seq_init(), hash_seq_search(), INSTR_TIME_GET_MICROSEC, INSTR_TIME_SET_CURRENT, INSTR_TIME_SUBTRACT, log_checkpoints, longest(), MAXPGPATH, pendingOps, sync_cycle_ctr, SyncOps::sync_syncfiletag, syncsw, and PendingFsyncEntry::tag.

Referenced by CheckPointGuts().

◆ RegisterSyncRequest()

| bool RegisterSyncRequest | ( | const FileTag * | ftag, |

| SyncRequestType | type, | ||

| bool | retryOnError | ||

| ) |

Definition at line 580 of file sync.c.

References fb(), ForwardSyncRequest(), pendingOps, RememberSyncRequest(), type, WaitLatch(), WL_EXIT_ON_PM_DEATH, and WL_TIMEOUT.

Referenced by ForgetDatabaseSyncRequests(), register_dirty_segment(), register_forget_request(), register_unlink_segment(), SlruInternalDeleteSegment(), and SlruPhysicalWritePage().

◆ RememberSyncRequest()

| void RememberSyncRequest | ( | const FileTag * | ftag, |

| SyncRequestType | type | ||

| ) |

Definition at line 487 of file sync.c.

References Assert, PendingFsyncEntry::canceled, PendingUnlinkEntry::canceled, checkpoint_cycle_ctr, PendingFsyncEntry::cycle_ctr, PendingUnlinkEntry::cycle_ctr, fb(), FileTag::handler, HASH_ENTER, HASH_FIND, hash_search(), hash_seq_init(), hash_seq_search(), lappend(), lfirst, MemoryContextSwitchTo(), palloc_object, pendingOps, pendingOpsCxt, pendingUnlinks, sync_cycle_ctr, SyncOps::sync_filetagmatches, SYNC_FILTER_REQUEST, SYNC_FORGET_REQUEST, SYNC_REQUEST, SYNC_UNLINK_REQUEST, syncsw, PendingUnlinkEntry::tag, and type.

Referenced by AbsorbSyncRequests(), and RegisterSyncRequest().

◆ SyncPostCheckpoint()

Definition at line 202 of file sync.c.

References AbsorbSyncRequests(), PendingUnlinkEntry::canceled, checkpoint_cycle_ctr, PendingUnlinkEntry::cycle_ctr, ereport, errcode_for_file_access(), errmsg(), fb(), FileTag::handler, i, lfirst, list_cell_number(), list_delete_first_n(), list_free_deep(), list_nth(), MAXPGPATH, NIL, pendingUnlinks, pfree(), SyncOps::sync_unlinkfiletag, syncsw, PendingUnlinkEntry::tag, UNLINKS_PER_ABSORB, and WARNING.

Referenced by CreateCheckPoint().

◆ SyncPreCheckpoint()

Definition at line 177 of file sync.c.

References AbsorbSyncRequests(), and checkpoint_cycle_ctr.

Referenced by CreateCheckPoint().

Variable Documentation

◆ checkpoint_cycle_ctr

|

static |

Definition at line 75 of file sync.c.

Referenced by RememberSyncRequest(), SyncPostCheckpoint(), and SyncPreCheckpoint().

◆ pendingOps

Definition at line 70 of file sync.c.

Referenced by InitSync(), ProcessSyncRequests(), RegisterSyncRequest(), and RememberSyncRequest().

◆ pendingOpsCxt

|

static |

Definition at line 72 of file sync.c.

Referenced by InitSync(), and RememberSyncRequest().

◆ pendingUnlinks

Definition at line 71 of file sync.c.

Referenced by InitSync(), RememberSyncRequest(), and SyncPostCheckpoint().

◆ sync_cycle_ctr

|

static |

Definition at line 74 of file sync.c.

Referenced by ProcessSyncRequests(), and RememberSyncRequest().

◆ syncsw

Definition at line 95 of file sync.c.

Referenced by ProcessSyncRequests(), RememberSyncRequest(), and SyncPostCheckpoint().