#include "postgres.h"#include "access/heapam_xlog.h"#include "access/visibilitymap.h"#include "access/xloginsert.h"#include "access/xlogutils.h"#include "miscadmin.h"#include "port/pg_bitutils.h"#include "storage/bufmgr.h"#include "storage/smgr.h"#include "utils/inval.h"#include "utils/rel.h"

Go to the source code of this file.

Macros | |

| #define | MAPSIZE (BLCKSZ - MAXALIGN(SizeOfPageHeaderData)) |

| #define | HEAPBLOCKS_PER_BYTE (BITS_PER_BYTE / BITS_PER_HEAPBLOCK) |

| #define | HEAPBLOCKS_PER_PAGE (MAPSIZE * HEAPBLOCKS_PER_BYTE) |

| #define | HEAPBLK_TO_MAPBLOCK(x) ((x) / HEAPBLOCKS_PER_PAGE) |

| #define | HEAPBLK_TO_MAPBYTE(x) (((x) % HEAPBLOCKS_PER_PAGE) / HEAPBLOCKS_PER_BYTE) |

| #define | HEAPBLK_TO_OFFSET(x) (((x) % HEAPBLOCKS_PER_BYTE) * BITS_PER_HEAPBLOCK) |

| #define | VISIBLE_MASK8 (0x55) /* The lower bit of each bit pair */ |

| #define | FROZEN_MASK8 (0xaa) /* The upper bit of each bit pair */ |

Functions | |

| static Buffer | vm_readbuf (Relation rel, BlockNumber blkno, bool extend) |

| static Buffer | vm_extend (Relation rel, BlockNumber vm_nblocks) |

| bool | visibilitymap_clear (Relation rel, BlockNumber heapBlk, Buffer vmbuf, uint8 flags) |

| void | visibilitymap_pin (Relation rel, BlockNumber heapBlk, Buffer *vmbuf) |

| bool | visibilitymap_pin_ok (BlockNumber heapBlk, Buffer vmbuf) |

| void | visibilitymap_set (Relation rel, BlockNumber heapBlk, Buffer heapBuf, XLogRecPtr recptr, Buffer vmBuf, TransactionId cutoff_xid, uint8 flags) |

| void | visibilitymap_set_vmbits (BlockNumber heapBlk, Buffer vmBuf, uint8 flags, const RelFileLocator rlocator) |

| uint8 | visibilitymap_get_status (Relation rel, BlockNumber heapBlk, Buffer *vmbuf) |

| void | visibilitymap_count (Relation rel, BlockNumber *all_visible, BlockNumber *all_frozen) |

| BlockNumber | visibilitymap_prepare_truncate (Relation rel, BlockNumber nheapblocks) |

Macro Definition Documentation

◆ FROZEN_MASK8

Definition at line 124 of file visibilitymap.c.

◆ HEAPBLK_TO_MAPBLOCK

| #define HEAPBLK_TO_MAPBLOCK | ( | x | ) | ((x) / HEAPBLOCKS_PER_PAGE) |

Definition at line 118 of file visibilitymap.c.

◆ HEAPBLK_TO_MAPBYTE

| #define HEAPBLK_TO_MAPBYTE | ( | x | ) | (((x) % HEAPBLOCKS_PER_PAGE) / HEAPBLOCKS_PER_BYTE) |

Definition at line 119 of file visibilitymap.c.

◆ HEAPBLK_TO_OFFSET

| #define HEAPBLK_TO_OFFSET | ( | x | ) | (((x) % HEAPBLOCKS_PER_BYTE) * BITS_PER_HEAPBLOCK) |

Definition at line 120 of file visibilitymap.c.

◆ HEAPBLOCKS_PER_BYTE

| #define HEAPBLOCKS_PER_BYTE (BITS_PER_BYTE / BITS_PER_HEAPBLOCK) |

Definition at line 112 of file visibilitymap.c.

◆ HEAPBLOCKS_PER_PAGE

| #define HEAPBLOCKS_PER_PAGE (MAPSIZE * HEAPBLOCKS_PER_BYTE) |

Definition at line 115 of file visibilitymap.c.

◆ MAPSIZE

| #define MAPSIZE (BLCKSZ - MAXALIGN(SizeOfPageHeaderData)) |

Definition at line 109 of file visibilitymap.c.

◆ VISIBLE_MASK8

Definition at line 123 of file visibilitymap.c.

Function Documentation

◆ visibilitymap_clear()

| bool visibilitymap_clear | ( | Relation | rel, |

| BlockNumber | heapBlk, | ||

| Buffer | vmbuf, | ||

| uint8 | flags | ||

| ) |

Definition at line 139 of file visibilitymap.c.

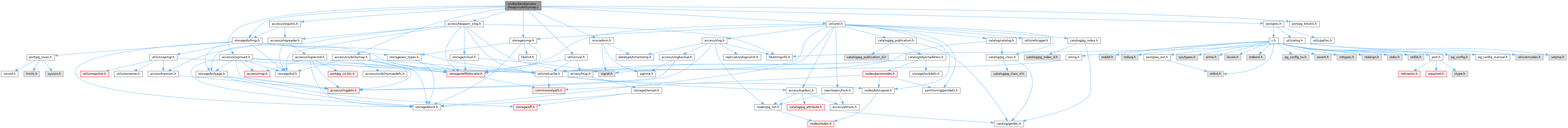

References Assert, BUFFER_LOCK_EXCLUSIVE, BUFFER_LOCK_UNLOCK, BufferGetBlockNumber(), BufferGetPage(), BufferIsValid(), DEBUG1, elog, ERROR, fb(), HEAPBLK_TO_MAPBLOCK, HEAPBLK_TO_MAPBYTE, HEAPBLK_TO_OFFSET, LockBuffer(), MarkBufferDirty(), PageGetContents(), RelationGetRelationName, VISIBILITYMAP_ALL_VISIBLE, and VISIBILITYMAP_VALID_BITS.

Referenced by heap_delete(), heap_force_common(), heap_insert(), heap_lock_tuple(), heap_lock_updated_tuple_rec(), heap_multi_insert(), heap_update(), heap_xlog_delete(), heap_xlog_insert(), heap_xlog_lock(), heap_xlog_lock_updated(), heap_xlog_multi_insert(), heap_xlog_update(), and identify_and_fix_vm_corruption().

◆ visibilitymap_count()

| void visibilitymap_count | ( | Relation | rel, |

| BlockNumber * | all_visible, | ||

| BlockNumber * | all_frozen | ||

| ) |

Definition at line 456 of file visibilitymap.c.

References Assert, BufferGetPage(), BufferIsValid(), fb(), FROZEN_MASK8, MAPSIZE, PageGetContents(), pg_popcount_masked(), ReleaseBuffer(), VISIBLE_MASK8, and vm_readbuf().

Referenced by do_analyze_rel(), heap_vacuum_eager_scan_setup(), heap_vacuum_rel(), index_update_stats(), and pg_visibility_map_summary().

◆ visibilitymap_get_status()

| uint8 visibilitymap_get_status | ( | Relation | rel, |

| BlockNumber | heapBlk, | ||

| Buffer * | vmbuf | ||

| ) |

Definition at line 408 of file visibilitymap.c.

References BufferGetBlockNumber(), BufferGetPage(), BufferIsValid(), DEBUG1, elog, fb(), HEAPBLK_TO_MAPBLOCK, HEAPBLK_TO_MAPBYTE, HEAPBLK_TO_OFFSET, InvalidBuffer, PageGetContents(), RelationGetRelationName, ReleaseBuffer(), VISIBILITYMAP_VALID_BITS, and vm_readbuf().

Referenced by collect_visibility_data(), find_next_unskippable_block(), heapcheck_read_stream_next_unskippable(), identify_and_fix_vm_corruption(), lazy_scan_prune(), pg_visibility(), and pg_visibility_map().

◆ visibilitymap_pin()

| void visibilitymap_pin | ( | Relation | rel, |

| BlockNumber | heapBlk, | ||

| Buffer * | vmbuf | ||

| ) |

Definition at line 192 of file visibilitymap.c.

References BufferGetBlockNumber(), BufferIsValid(), fb(), HEAPBLK_TO_MAPBLOCK, ReleaseBuffer(), and vm_readbuf().

Referenced by GetVisibilityMapPins(), heap_delete(), heap_force_common(), heap_lock_tuple(), heap_lock_updated_tuple_rec(), heap_update(), heap_xlog_delete(), heap_xlog_insert(), heap_xlog_lock(), heap_xlog_lock_updated(), heap_xlog_multi_insert(), heap_xlog_update(), lazy_scan_heap(), lazy_vacuum_heap_rel(), and RelationGetBufferForTuple().

◆ visibilitymap_pin_ok()

| bool visibilitymap_pin_ok | ( | BlockNumber | heapBlk, |

| Buffer | vmbuf | ||

| ) |

Definition at line 216 of file visibilitymap.c.

References BufferGetBlockNumber(), BufferIsValid(), fb(), and HEAPBLK_TO_MAPBLOCK.

Referenced by GetVisibilityMapPins(), and RelationGetBufferForTuple().

◆ visibilitymap_prepare_truncate()

| BlockNumber visibilitymap_prepare_truncate | ( | Relation | rel, |

| BlockNumber | nheapblocks | ||

| ) |

Definition at line 510 of file visibilitymap.c.

References BUFFER_LOCK_EXCLUSIVE, BufferGetPage(), BufferIsValid(), DEBUG1, elog, END_CRIT_SECTION, fb(), HEAPBLK_TO_MAPBLOCK, HEAPBLK_TO_MAPBYTE, HEAPBLK_TO_OFFSET, InRecovery, InvalidBlockNumber, LockBuffer(), log_newpage_buffer(), MAPSIZE, MarkBufferDirty(), MemSet, PageGetContents(), RelationGetRelationName, RelationGetSmgr(), RelationNeedsWAL, smgrexists(), smgrnblocks(), START_CRIT_SECTION, UnlockReleaseBuffer(), VISIBILITYMAP_FORKNUM, vm_readbuf(), and XLogHintBitIsNeeded.

Referenced by pg_truncate_visibility_map(), RelationTruncate(), and smgr_redo().

◆ visibilitymap_set()

| void visibilitymap_set | ( | Relation | rel, |

| BlockNumber | heapBlk, | ||

| Buffer | heapBuf, | ||

| XLogRecPtr | recptr, | ||

| Buffer | vmBuf, | ||

| TransactionId | cutoff_xid, | ||

| uint8 | flags | ||

| ) |

Definition at line 245 of file visibilitymap.c.

References Assert, BUFFER_LOCK_EXCLUSIVE, BUFFER_LOCK_UNLOCK, BufferGetBlockNumber(), BufferGetPage(), BufferIsLockedByMeInMode(), BufferIsValid(), DEBUG1, elog, END_CRIT_SECTION, ERROR, fb(), HEAPBLK_TO_MAPBLOCK, HEAPBLK_TO_MAPBYTE, HEAPBLK_TO_OFFSET, InRecovery, LockBuffer(), log_heap_visible(), MarkBufferDirty(), PageGetContents(), PageIsAllVisible(), PageSetLSN(), RelationGetRelationName, RelationNeedsWAL, START_CRIT_SECTION, VISIBILITYMAP_ALL_FROZEN, VISIBILITYMAP_VALID_BITS, XLogHintBitIsNeeded, and XLogRecPtrIsValid.

Referenced by heap_xlog_visible(), lazy_scan_new_or_empty(), and lazy_scan_prune().

◆ visibilitymap_set_vmbits()

| void visibilitymap_set_vmbits | ( | BlockNumber | heapBlk, |

| Buffer | vmBuf, | ||

| uint8 | flags, | ||

| const RelFileLocator | rlocator | ||

| ) |

Definition at line 344 of file visibilitymap.c.

References Assert, BUFFER_LOCK_EXCLUSIVE, BufferGetBlockNumber(), BufferGetPage(), BufferIsLockedByMeInMode(), BufferIsValid(), CritSectionCount, DEBUG1, elog, ERROR, fb(), HEAPBLK_TO_MAPBLOCK, HEAPBLK_TO_MAPBYTE, HEAPBLK_TO_OFFSET, InRecovery, MAIN_FORKNUM, MarkBufferDirty(), MyProcNumber, PageGetContents(), relpathbackend, str, VISIBILITYMAP_ALL_FROZEN, and VISIBILITYMAP_VALID_BITS.

Referenced by heap_multi_insert(), heap_xlog_multi_insert(), heap_xlog_prune_freeze(), and lazy_vacuum_heap_page().

◆ vm_extend()

|

static |

Definition at line 684 of file visibilitymap.c.

References BMR_REL, buf, CacheInvalidateSmgr(), EB_CLEAR_SIZE_CACHE, EB_CREATE_FORK_IF_NEEDED, ExtendBufferedRelTo(), fb(), RBM_ZERO_ON_ERROR, RelationGetSmgr(), and VISIBILITYMAP_FORKNUM.

Referenced by vm_readbuf().

◆ vm_readbuf()

|

static |

Definition at line 610 of file visibilitymap.c.

References buf, BUFFER_LOCK_EXCLUSIVE, BUFFER_LOCK_UNLOCK, BufferGetPage(), fb(), InvalidBlockNumber, InvalidBuffer, LockBuffer(), PageInit(), PageIsNew(), RBM_ZERO_ON_ERROR, ReadBufferExtended(), RelationGetSmgr(), smgrexists(), smgrnblocks(), VISIBILITYMAP_FORKNUM, and vm_extend().

Referenced by visibilitymap_count(), visibilitymap_get_status(), visibilitymap_pin(), and visibilitymap_prepare_truncate().