#include "postgres.h"#include "access/genam.h"#include "access/gist_private.h"#include "access/transam.h"#include "commands/vacuum.h"#include "lib/integerset.h"#include "miscadmin.h"#include "storage/indexfsm.h"#include "storage/lmgr.h"#include "storage/read_stream.h"#include "utils/memutils.h"

Go to the source code of this file.

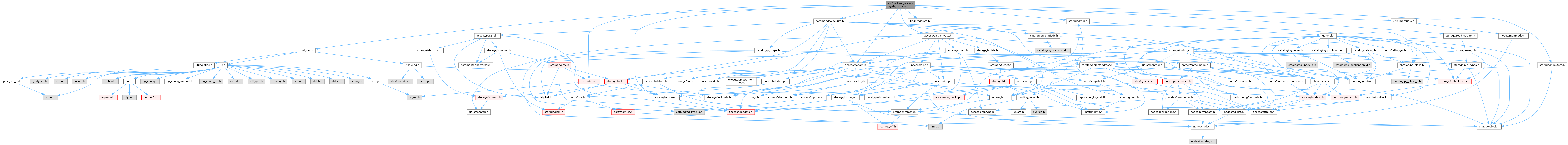

Data Structures | |

| struct | GistVacState |

Functions | |

| static void | gistvacuumscan (IndexVacuumInfo *info, IndexBulkDeleteResult *stats, IndexBulkDeleteCallback callback, void *callback_state) |

| static void | gistvacuumpage (GistVacState *vstate, Buffer buffer) |

| static void | gistvacuum_delete_empty_pages (IndexVacuumInfo *info, GistVacState *vstate) |

| static bool | gistdeletepage (IndexVacuumInfo *info, IndexBulkDeleteResult *stats, Buffer parentBuffer, OffsetNumber downlink, Buffer leafBuffer) |

| IndexBulkDeleteResult * | gistbulkdelete (IndexVacuumInfo *info, IndexBulkDeleteResult *stats, IndexBulkDeleteCallback callback, void *callback_state) |

| IndexBulkDeleteResult * | gistvacuumcleanup (IndexVacuumInfo *info, IndexBulkDeleteResult *stats) |

Function Documentation

◆ gistbulkdelete()

| IndexBulkDeleteResult * gistbulkdelete | ( | IndexVacuumInfo * | info, |

| IndexBulkDeleteResult * | stats, | ||

| IndexBulkDeleteCallback | callback, | ||

| void * | callback_state | ||

| ) |

Definition at line 59 of file gistvacuum.c.

References callback(), fb(), gistvacuumscan(), and palloc0_object.

Referenced by gisthandler().

◆ gistdeletepage()

|

static |

Definition at line 631 of file gistvacuum.c.

References Assert, BufferGetBlockNumber(), BufferGetPage(), END_CRIT_SECTION, fb(), FirstOffsetNumber, GistFollowRight, gistGetFakeLSN(), GistPageIsDeleted, GistPageIsLeaf, GistPageSetDeleted(), gistXLogPageDelete(), IndexVacuumInfo::index, InvalidOffsetNumber, ItemPointerGetBlockNumber(), MarkBufferDirty(), PageGetItem(), PageGetItemId(), PageGetMaxOffsetNumber(), PageIndexTupleDelete(), PageIsNew(), IndexBulkDeleteResult::pages_deleted, IndexBulkDeleteResult::pages_newly_deleted, PageSetLSN(), ReadNextFullTransactionId(), RelationNeedsWAL, and START_CRIT_SECTION.

Referenced by gistvacuum_delete_empty_pages().

◆ gistvacuum_delete_empty_pages()

|

static |

Definition at line 504 of file gistvacuum.c.

References Assert, BufferGetPage(), fb(), FirstOffsetNumber, GIST_EXCLUSIVE, GIST_SHARE, GIST_UNLOCK, gistcheckpage(), gistdeletepage(), GistPageIsDeleted, GistPageIsLeaf, i, IndexVacuumInfo::index, intset_begin_iterate(), intset_is_member(), intset_iterate_next(), intset_num_entries(), ItemPointerGetBlockNumber(), LockBuffer(), MAIN_FORKNUM, MaxOffsetNumber, OffsetNumberNext, PageGetItem(), PageGetItemId(), PageGetMaxOffsetNumber(), PageIsNew(), RBM_NORMAL, ReadBufferExtended(), ReleaseBuffer(), IndexVacuumInfo::strategy, and UnlockReleaseBuffer().

Referenced by gistvacuumscan().

◆ gistvacuumcleanup()

| IndexBulkDeleteResult * gistvacuumcleanup | ( | IndexVacuumInfo * | info, |

| IndexBulkDeleteResult * | stats | ||

| ) |

Definition at line 75 of file gistvacuum.c.

References IndexVacuumInfo::analyze_only, IndexVacuumInfo::estimated_count, fb(), gistvacuumscan(), IndexVacuumInfo::num_heap_tuples, IndexBulkDeleteResult::num_index_tuples, and palloc0_object.

Referenced by gisthandler().

◆ gistvacuumpage()

|

static |

Definition at line 308 of file gistvacuum.c.

References BufferGetBlockNumber(), BufferGetPage(), callback(), END_CRIT_SECTION, ereport, errdetail(), errhint(), errmsg(), fb(), FirstOffsetNumber, GIST_EXCLUSIVE, GistFollowRight, gistGetFakeLSN(), GistMarkTuplesDeleted, GistPageGetNSN, GistPageGetOpaque, GistPageIsDeleted, GistPageIsLeaf, gistPageRecyclable(), GistTupleIsInvalid, gistXLogUpdate(), IndexVacuumInfo::index, intset_add_member(), InvalidBlockNumber, InvalidBuffer, LockBuffer(), LOG, MAIN_FORKNUM, MarkBufferDirty(), MaxOffsetNumber, OffsetNumberNext, PageGetItem(), PageGetItemId(), PageGetMaxOffsetNumber(), PageIndexMultiDelete(), PageSetLSN(), RBM_NORMAL, ReadBufferExtended(), RecordFreeIndexPage(), RelationGetRelationName, RelationNeedsWAL, GISTPageOpaqueData::rightlink, START_CRIT_SECTION, IndexVacuumInfo::strategy, UnlockReleaseBuffer(), and vacuum_delay_point().

Referenced by gistvacuumscan().

◆ gistvacuumscan()

|

static |

Definition at line 125 of file gistvacuum.c.

References block_range_read_stream_cb(), buf, BufferIsValid(), callback(), BlockRangeReadStreamPrivate::current_blocknum, CurrentMemoryContext, IndexBulkDeleteResult::estimated_count, ExclusiveLock, fb(), GenerationContextCreate(), GetInsertRecPtr(), GIST_ROOT_BLKNO, gistGetFakeLSN(), gistvacuum_delete_empty_pages(), gistvacuumpage(), IndexVacuumInfo::index, IndexFreeSpaceMapVacuum(), intset_create(), BlockRangeReadStreamPrivate::last_exclusive, LockRelationForExtension(), MAIN_FORKNUM, MemoryContextDelete(), MemoryContextSwitchTo(), IndexBulkDeleteResult::num_index_tuples, IndexBulkDeleteResult::num_pages, IndexBulkDeleteResult::pages_deleted, IndexBulkDeleteResult::pages_free, read_stream_begin_relation(), read_stream_end(), READ_STREAM_FULL, READ_STREAM_MAINTENANCE, read_stream_next_buffer(), read_stream_reset(), READ_STREAM_USE_BATCHING, RELATION_IS_LOCAL, RelationGetNumberOfBlocks, RelationNeedsWAL, IndexVacuumInfo::strategy, UnlockRelationForExtension(), and vacuum_delay_point().

Referenced by gistbulkdelete(), and gistvacuumcleanup().