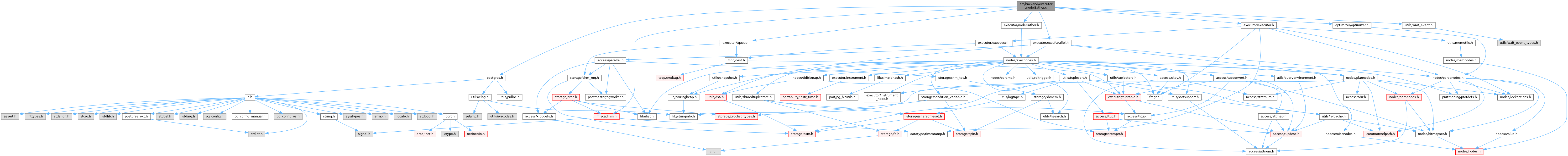

#include "postgres.h"#include "executor/execParallel.h"#include "executor/executor.h"#include "executor/nodeGather.h"#include "executor/tqueue.h"#include "miscadmin.h"#include "optimizer/optimizer.h"#include "storage/latch.h"#include "utils/wait_event.h"

Go to the source code of this file.

Functions | |

| static TupleTableSlot * | ExecGather (PlanState *pstate) |

| static TupleTableSlot * | gather_getnext (GatherState *gatherstate) |

| static MinimalTuple | gather_readnext (GatherState *gatherstate) |

| static void | ExecShutdownGatherWorkers (GatherState *node) |

| GatherState * | ExecInitGather (Gather *node, EState *estate, int eflags) |

| void | ExecEndGather (GatherState *node) |

| void | ExecShutdownGather (GatherState *node) |

| void | ExecReScanGather (GatherState *node) |

Function Documentation

◆ ExecEndGather()

| void ExecEndGather | ( | GatherState * | node | ) |

Definition at line 252 of file nodeGather.c.

References ExecEndNode(), ExecShutdownGather(), and outerPlanState.

Referenced by ExecEndNode().

◆ ExecGather()

|

static |

Definition at line 138 of file nodeGather.c.

References castNode, CHECK_FOR_INTERRUPTS, ExprContext::ecxt_outertuple, EState::es_parallel_workers_launched, EState::es_parallel_workers_to_launch, EState::es_use_parallel_mode, ExecInitParallelPlan(), ExecParallelCreateReaders(), ExecParallelReinitialize(), ExecProject(), fb(), gather_getnext(), GatherState::initialized, LaunchParallelWorkers(), GatherState::need_to_scan_locally, GatherState::nextreader, GatherState::nreaders, ParallelContext::nworkers_launched, GatherState::nworkers_launched, ParallelContext::nworkers_to_launch, outerPlanState, palloc(), parallel_leader_participation, ParallelExecutorInfo::pcxt, GatherState::pei, PlanState::plan, GatherState::ps, PlanState::ps_ExprContext, PlanState::ps_ProjInfo, ParallelExecutorInfo::reader, GatherState::reader, ResetExprContext, PlanState::state, TupIsNull, and GatherState::tuples_needed.

Referenced by ExecInitGather().

◆ ExecInitGather()

| GatherState * ExecInitGather | ( | Gather * | node, |

| EState * | estate, | ||

| int | eflags | ||

| ) |

Definition at line 54 of file nodeGather.c.

References Assert, ExecAssignExprContext(), ExecConditionalAssignProjectionInfo(), ExecGather(), ExecGetResultType(), ExecInitExtraTupleSlot(), ExecInitNode(), ExecInitResultTypeTL(), fb(), innerPlan, makeNode, OUTER_VAR, outerPlan, outerPlanState, parallel_leader_participation, Gather::plan, Plan::qual, Gather::single_copy, and TTSOpsMinimalTuple.

Referenced by ExecInitNode().

◆ ExecReScanGather()

| void ExecReScanGather | ( | GatherState * | node | ) |

Definition at line 443 of file nodeGather.c.

References bms_add_member(), ExecReScan(), ExecShutdownGatherWorkers(), fb(), GatherState::initialized, outerPlan, outerPlanState, PlanState::plan, and GatherState::ps.

Referenced by ExecReScan().

◆ ExecShutdownGather()

| void ExecShutdownGather | ( | GatherState * | node | ) |

Definition at line 419 of file nodeGather.c.

References ExecParallelCleanup(), ExecShutdownGatherWorkers(), fb(), and GatherState::pei.

Referenced by ExecEndGather(), and ExecShutdownNode_walker().

◆ ExecShutdownGatherWorkers()

|

static |

Definition at line 401 of file nodeGather.c.

References ExecParallelFinish(), fb(), GatherState::pei, pfree(), and GatherState::reader.

Referenced by ExecReScanGather(), ExecShutdownGather(), and gather_readnext().

◆ gather_getnext()

|

static |

Definition at line 264 of file nodeGather.c.

References CHECK_FOR_INTERRUPTS, EState::es_query_dsa, ExecClearTuple(), ExecProcNode(), ExecStoreMinimalTuple(), fb(), gather_readnext(), HeapTupleIsValid, outerPlan, outerPlanState, and TupIsNull.

Referenced by ExecGather().

◆ gather_readnext()

|

static |

Definition at line 312 of file nodeGather.c.

References Assert, CHECK_FOR_INTERRUPTS, ExecShutdownGatherWorkers(), fb(), MyLatch, ResetLatch(), TupleQueueReaderNext(), WaitLatch(), WL_EXIT_ON_PM_DEATH, and WL_LATCH_SET.

Referenced by gather_getnext().