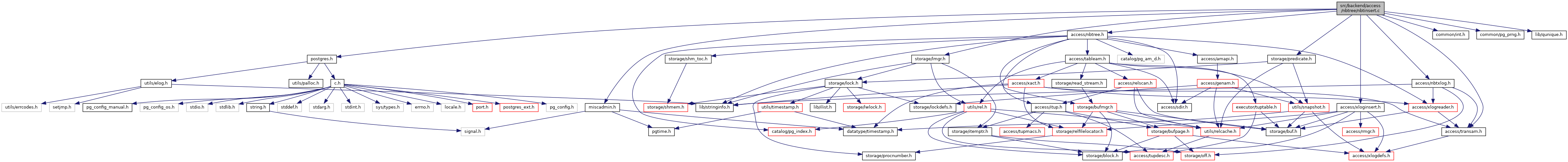

#include "postgres.h"#include "access/nbtree.h"#include "access/nbtxlog.h"#include "access/tableam.h"#include "access/transam.h"#include "access/xloginsert.h"#include "common/int.h"#include "common/pg_prng.h"#include "lib/qunique.h"#include "miscadmin.h"#include "storage/lmgr.h"#include "storage/predicate.h"#include "utils/injection_point.h"

Go to the source code of this file.

Macros | |

| #define | BTREE_FASTPATH_MIN_LEVEL 2 |

Macro Definition Documentation

◆ BTREE_FASTPATH_MIN_LEVEL

| #define BTREE_FASTPATH_MIN_LEVEL 2 |

Definition at line 32 of file nbtinsert.c.

Function Documentation

◆ _bt_blk_cmp()

Definition at line 3024 of file nbtinsert.c.

References fb(), and pg_cmp_u32().

Referenced by _bt_deadblocks(), and _bt_simpledel_pass().

◆ _bt_check_unique()

|

static |

Definition at line 410 of file nbtinsert.c.

References _bt_binsrch_insert(), _bt_compare(), _bt_relandgetbuf(), _bt_relbuf(), BTScanInsertData::anynullkeys, Assert, BT_READ, BTP_HAS_GARBAGE, BTPageGetOpaque, BTPageOpaqueData::btpo_flags, BTPageOpaqueData::btpo_next, BTreeTupleGetNPosting(), BTreeTupleGetPostingN(), BTreeTupleIsPivot(), BTreeTupleIsPosting(), BufferGetBlockNumber(), BufferGetPage(), BuildIndexValueDescription(), CheckForSerializableConflictIn(), elog, ereport, errcode(), errdetail(), errhint(), errmsg(), ERROR, errtableconstraint(), fb(), index_deform_tuple(), INDEX_MAX_KEYS, InitDirtySnapshot, InvalidBuffer, InvalidTransactionId, ItemIdIsDead, ItemIdMarkDead, ItemPointerCompare(), MarkBufferDirtyHint(), OffsetNumberNext, P_FIRSTDATAKEY, P_HIKEY, P_IGNORE, P_RIGHTMOST, PageGetItem(), PageGetItemId(), PageGetMaxOffsetNumber(), RelationGetDescr, RelationGetRelationName, BTScanInsertData::scantid, SnapshotSelf, IndexTupleData::t_tid, table_index_fetch_tuple_check(), TransactionIdIsValid, UNIQUE_CHECK_EXISTING, UNIQUE_CHECK_PARTIAL, and values.

Referenced by _bt_doinsert().

◆ _bt_deadblocks()

|

static |

Definition at line 2951 of file nbtinsert.c.

References _bt_blk_cmp(), Assert, BTreeTupleGetNPosting(), BTreeTupleGetPostingN(), BTreeTupleIsPivot(), BTreeTupleIsPosting(), fb(), i, ItemIdIsDead, ItemPointerGetBlockNumber(), j, Max, PageGetItem(), PageGetItemId(), palloc_array, qsort, qunique(), repalloc(), and IndexTupleData::t_tid.

Referenced by _bt_simpledel_pass().

◆ _bt_delete_or_dedup_one_page()

|

static |

Definition at line 2696 of file nbtinsert.c.

References _bt_bottomupdel_pass(), _bt_dedup_pass(), _bt_simpledel_pass(), BTScanInsertData::allequalimage, Assert, BTGetDeduplicateItems, BTPageGetOpaque, BufferGetPage(), fb(), BTScanInsertData::heapkeyspace, ItemIdIsDead, MaxIndexTuplesPerPage, OffsetNumberNext, P_FIRSTDATAKEY, P_ISLEAF, PageGetFreeSpace(), PageGetItemId(), and PageGetMaxOffsetNumber().

Referenced by _bt_findinsertloc().

◆ _bt_doinsert()

| bool _bt_doinsert | ( | Relation | rel, |

| IndexTuple | itup, | ||

| IndexUniqueCheck | checkUnique, | ||

| bool | indexUnchanged, | ||

| Relation | heapRel | ||

| ) |

Definition at line 104 of file nbtinsert.c.

References _bt_check_unique(), _bt_findinsertloc(), _bt_freestack(), _bt_insertonpg(), _bt_mkscankey(), _bt_relbuf(), _bt_search_insert(), BTScanInsertData::anynullkeys, Assert, BufferGetBlockNumber(), CheckForSerializableConflictIn(), fb(), BTScanInsertData::heapkeyspace, IndexTupleSize(), InvalidBuffer, MAXALIGN, pfree(), BTScanInsertData::scantid, SpeculativeInsertionWait(), IndexTupleData::t_tid, TransactionIdIsValid, UNIQUE_CHECK_EXISTING, UNIQUE_CHECK_NO, unlikely, XactLockTableWait(), and XLTW_InsertIndex.

Referenced by btinsert().

◆ _bt_findinsertloc()

|

static |

Definition at line 817 of file nbtinsert.c.

References _bt_binsrch_insert(), _bt_check_third_page(), _bt_compare(), _bt_delete_or_dedup_one_page(), _bt_stepright(), BTScanInsertData::allequalimage, Assert, BTMaxItemSize, BTPageGetOpaque, BufferGetPage(), fb(), BTScanInsertData::heapkeyspace, P_HAS_GARBAGE, P_HIKEY, P_INCOMPLETE_SPLIT, P_ISLEAF, P_RIGHTMOST, PageGetFreeSpace(), PageGetMaxOffsetNumber(), pg_global_prng_state, pg_prng_uint32(), PG_UINT32_MAX, BTScanInsertData::scantid, and unlikely.

Referenced by _bt_doinsert().

◆ _bt_finish_split()

Definition at line 2256 of file nbtinsert.c.

References _bt_getbuf(), _bt_insert_parent(), _bt_relbuf(), Assert, BT_WRITE, BTPageGetMeta, BTPageGetOpaque, BTREE_METAPAGE, BufferGetBlockNumber(), BufferGetPage(), DEBUG1, elog, fb(), INJECTION_POINT, P_INCOMPLETE_SPLIT, P_LEFTMOST, and P_RIGHTMOST.

Referenced by _bt_getstackbuf(), _bt_moveright(), and _bt_stepright().

◆ _bt_getstackbuf()

| Buffer _bt_getstackbuf | ( | Relation | rel, |

| Relation | heaprel, | ||

| BTStack | stack, | ||

| BlockNumber | child | ||

| ) |

Definition at line 2335 of file nbtinsert.c.

References _bt_finish_split(), _bt_getbuf(), _bt_relbuf(), Assert, BT_WRITE, BTPageGetOpaque, BTPageOpaqueData::btpo_next, BTreeTupleGetDownLink(), BTStackData::bts_blkno, BTStackData::bts_offset, BTStackData::bts_parent, buf, BufferGetPage(), fb(), InvalidBuffer, InvalidOffsetNumber, OffsetNumberNext, OffsetNumberPrev, P_FIRSTDATAKEY, P_IGNORE, P_INCOMPLETE_SPLIT, P_RIGHTMOST, PageGetItem(), PageGetItemId(), PageGetMaxOffsetNumber(), and start.

Referenced by _bt_insert_parent(), and _bt_lock_subtree_parent().

◆ _bt_insert_parent()

|

static |

Definition at line 2114 of file nbtinsert.c.

References _bt_get_endpoint(), _bt_getstackbuf(), _bt_insertonpg(), _bt_newlevel(), _bt_relbuf(), Assert, BlockNumberIsValid(), BTPageGetOpaque, BTPageOpaqueData::btpo_level, BTreeTupleSetDownLink(), BTStackData::bts_blkno, BTStackData::bts_offset, BTStackData::bts_parent, buf, BufferGetBlockNumber(), BufferGetPage(), CopyIndexTuple(), DEBUG2, elog, ereport, errcode(), errmsg_internal(), ERROR, fb(), IndexTupleSize(), InvalidBuffer, InvalidOffsetNumber, MAXALIGN, P_HIKEY, P_ISLEAF, PageGetItem(), PageGetItemId(), pfree(), RelationGetRelationName, and RelationGetTargetBlock.

Referenced by _bt_finish_split(), and _bt_insertonpg().

◆ _bt_insertonpg()

|

static |

Definition at line 1107 of file nbtinsert.c.

References _bt_getbuf(), _bt_getrootheight(), _bt_insert_parent(), _bt_relbuf(), _bt_split(), _bt_swap_posting(), _bt_upgrademetapage(), BTScanInsertData::allequalimage, Assert, BlockNumberIsValid(), BT_WRITE, BTPageGetMeta, BTPageGetOpaque, BTPageOpaqueData::btpo_level, BTREE_FASTPATH_MIN_LEVEL, BTREE_METAPAGE, BTREE_NOVAC_VERSION, BTreeTupleGetNAtts, BTreeTupleIsPosting(), buf, BufferGetBlockNumber(), BufferGetPage(), BufferIsValid(), CopyIndexTuple(), elog, END_CRIT_SECTION, ereport, errcode(), errmsg_internal(), ERROR, fb(), BTScanInsertData::heapkeyspace, IndexRelationGetNumberOfAttributes, IndexRelationGetNumberOfKeyAttributes, IndexTupleSize(), INJECTION_POINT, InvalidBlockNumber, InvalidBuffer, InvalidOffsetNumber, ItemIdIsDead, ItemPointerGetBlockNumber(), ItemPointerGetOffsetNumber(), MarkBufferDirty(), MAXALIGN, OffsetNumberNext, P_FIRSTDATAKEY, P_INCOMPLETE_SPLIT, P_ISLEAF, P_ISROOT, P_LEFTMOST, P_RIGHTMOST, PageAddItem, PageGetFreeSpace(), PageGetItem(), PageGetItemId(), PageSetLSN(), PANIC, pfree(), PredicateLockPageSplit(), REGBUF_STANDARD, REGBUF_WILL_INIT, RelationGetRelationName, RelationNeedsWAL, RelationSetTargetBlock, SizeOfBtreeInsert, START_CRIT_SECTION, IndexTupleData::t_tid, unlikely, XLOG_BTREE_INSERT_LEAF, XLOG_BTREE_INSERT_META, XLOG_BTREE_INSERT_POST, XLOG_BTREE_INSERT_UPPER, XLogBeginInsert(), XLogInsert(), XLogRegisterBufData(), XLogRegisterBuffer(), and XLogRegisterData().

Referenced by _bt_doinsert(), and _bt_insert_parent().

◆ _bt_newlevel()

Definition at line 2460 of file nbtinsert.c.

References _bt_allocbuf(), _bt_getbuf(), _bt_relbuf(), _bt_upgrademetapage(), xl_btree_metadata::allequalimage, Assert, BT_WRITE, BTP_ROOT, BTPageGetMeta, BTPageGetOpaque, BTREE_METAPAGE, BTREE_NOVAC_VERSION, BTreeTupleGetNAtts, BTreeTupleSetDownLink(), BTreeTupleSetNAtts(), BufferGetBlockNumber(), BufferGetPage(), CopyIndexTuple(), elog, END_CRIT_SECTION, xl_btree_metadata::fastlevel, xl_btree_metadata::fastroot, fb(), IndexRelationGetNumberOfKeyAttributes, InvalidOffsetNumber, ItemIdGetLength, xl_btree_metadata::last_cleanup_num_delpages, xl_btree_metadata::level, MarkBufferDirty(), P_FIRSTKEY, P_HIKEY, P_INCOMPLETE_SPLIT, P_NONE, PageAddItem, PageGetItem(), PageGetItemId(), PageSetLSN(), palloc(), PANIC, pfree(), REGBUF_STANDARD, REGBUF_WILL_INIT, RelationGetRelationName, RelationNeedsWAL, xl_btree_metadata::root, SizeOfBtreeNewroot, START_CRIT_SECTION, xl_btree_metadata::version, XLOG_BTREE_NEWROOT, XLogBeginInsert(), XLogInsert(), XLogRegisterBufData(), XLogRegisterBuffer(), and XLogRegisterData().

Referenced by _bt_insert_parent().

◆ _bt_pgaddtup()

|

inlinestatic |

Definition at line 2644 of file nbtinsert.c.

References BTreeTupleSetNAtts(), fb(), InvalidOffsetNumber, PageAddItem, IndexTupleData::t_info, and unlikely.

Referenced by _bt_split().

◆ _bt_search_insert()

|

static |

Definition at line 319 of file nbtinsert.c.

References _bt_checkpage(), _bt_compare(), _bt_conditionallockbuf(), _bt_relbuf(), _bt_search(), Assert, BT_WRITE, BTPageGetOpaque, BufferGetPage(), fb(), InvalidBlockNumber, InvalidBuffer, P_HIKEY, P_IGNORE, P_ISLEAF, P_RIGHTMOST, PageGetFreeSpace(), PageGetMaxOffsetNumber(), ReadBuffer(), RelationGetTargetBlock, RelationSetTargetBlock, and ReleaseBuffer().

Referenced by _bt_doinsert().

◆ _bt_simpledel_pass()

|

static |

Definition at line 2825 of file nbtinsert.c.

References _bt_blk_cmp(), _bt_deadblocks(), _bt_delitems_delete_check(), Assert, BTreeTupleGetNPosting(), BTreeTupleGetPostingN(), BTreeTupleIsPosting(), BufferGetBlockNumber(), BufferGetPage(), fb(), ItemIdIsDead, ItemPointerGetBlockNumber(), MaxTIDsPerBTreePage, OffsetNumberNext, PageGetItem(), PageGetItemId(), palloc(), pfree(), and IndexTupleData::t_tid.

Referenced by _bt_delete_or_dedup_one_page().

◆ _bt_split()

|

static |

Definition at line 1475 of file nbtinsert.c.

References _bt_allocbuf(), _bt_findsplitloc(), _bt_getbuf(), _bt_pageinit(), _bt_pgaddtup(), _bt_relbuf(), _bt_truncate(), _bt_vacuum_cycleid(), Assert, BT_WRITE, BTP_HAS_GARBAGE, BTP_INCOMPLETE_SPLIT, BTP_ROOT, BTP_SPLIT_END, BTPageGetOpaque, BTreeTupleGetNAtts, BTreeTupleIsPosting(), buf, BufferGetBlockNumber(), BufferGetPage(), BufferGetPageSize(), elog, END_CRIT_SECTION, ereport, errcode(), errmsg_internal(), ERROR, fb(), i, IndexRelationGetNumberOfKeyAttributes, IndexTupleSize(), InvalidBuffer, InvalidOffsetNumber, ItemIdGetLength, ItemPointerCompare(), xl_btree_split::level, MarkBufferDirty(), MAXALIGN, OffsetNumberNext, OffsetNumberPrev, P_FIRSTDATAKEY, P_HIKEY, P_ISLEAF, P_RIGHTMOST, PageAddItem, PageGetItem(), PageGetItemId(), PageGetLSN(), PageGetMaxOffsetNumber(), PageSetLSN(), pfree(), REGBUF_STANDARD, REGBUF_WILL_INIT, RelationGetRelationName, RelationNeedsWAL, SizeOfBtreeSplit, START_CRIT_SECTION, IndexTupleData::t_tid, XLOG_BTREE_SPLIT_L, XLOG_BTREE_SPLIT_R, XLogBeginInsert(), XLogInsert(), XLogRegisterBufData(), XLogRegisterBuffer(), and XLogRegisterData().

Referenced by _bt_insertonpg().

◆ _bt_stepright()

|

static |

Definition at line 1029 of file nbtinsert.c.

References _bt_finish_split(), _bt_relandgetbuf(), _bt_relbuf(), Assert, BT_WRITE, BTPageGetOpaque, BTPageOpaqueData::btpo_next, BufferGetPage(), elog, ERROR, fb(), InvalidBuffer, P_IGNORE, P_INCOMPLETE_SPLIT, P_RIGHTMOST, and RelationGetRelationName.

Referenced by _bt_findinsertloc().