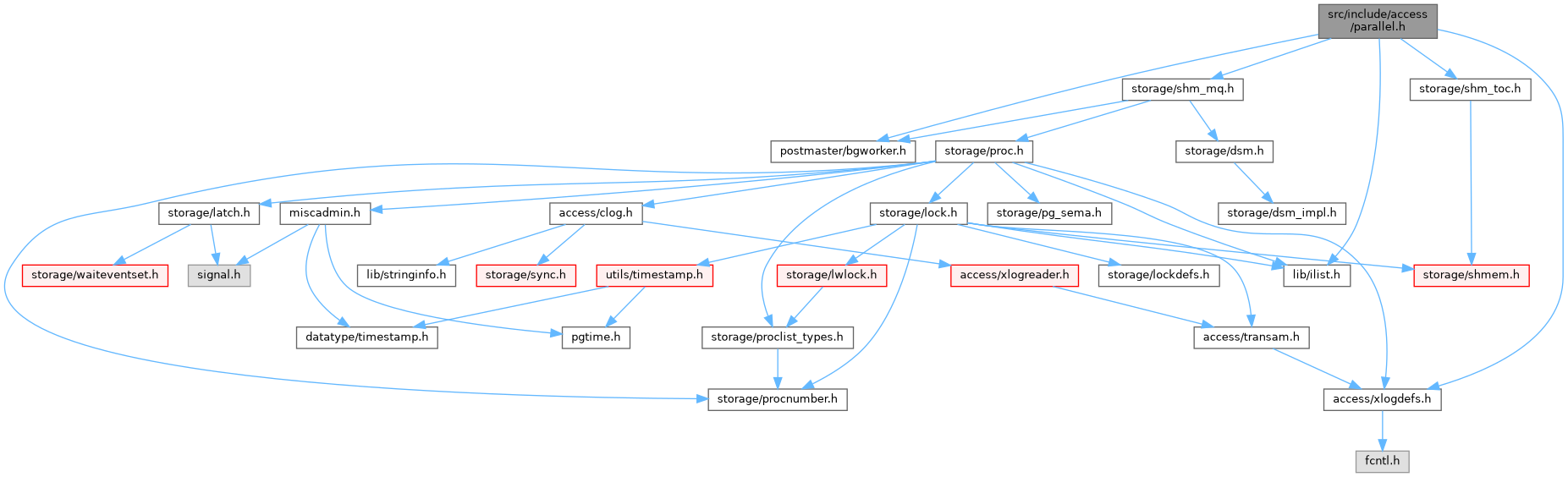

#include <signal.h>#include "access/xlogdefs.h"#include "lib/ilist.h"#include "postmaster/bgworker.h"#include "storage/shm_mq.h"#include "storage/shm_toc.h"

Go to the source code of this file.

Data Structures | |

| struct | ParallelWorkerInfo |

| struct | ParallelContext |

| struct | ParallelWorkerContext |

Macros | |

| #define | IsParallelWorker() (ParallelWorkerNumber >= 0) |

Typedefs | |

| typedef void(* | parallel_worker_main_type) (dsm_segment *seg, shm_toc *toc) |

| typedef struct ParallelWorkerInfo | ParallelWorkerInfo |

| typedef struct ParallelContext | ParallelContext |

| typedef struct ParallelWorkerContext | ParallelWorkerContext |

Functions | |

| ParallelContext * | CreateParallelContext (const char *library_name, const char *function_name, int nworkers) |

| void | InitializeParallelDSM (ParallelContext *pcxt) |

| void | ReinitializeParallelDSM (ParallelContext *pcxt) |

| void | ReinitializeParallelWorkers (ParallelContext *pcxt, int nworkers_to_launch) |

| void | LaunchParallelWorkers (ParallelContext *pcxt) |

| void | WaitForParallelWorkersToAttach (ParallelContext *pcxt) |

| void | WaitForParallelWorkersToFinish (ParallelContext *pcxt) |

| void | DestroyParallelContext (ParallelContext *pcxt) |

| bool | ParallelContextActive (void) |

| void | HandleParallelMessageInterrupt (void) |

| void | ProcessParallelMessages (void) |

| void | AtEOXact_Parallel (bool isCommit) |

| void | AtEOSubXact_Parallel (bool isCommit, SubTransactionId mySubId) |

| void | ParallelWorkerReportLastRecEnd (XLogRecPtr last_xlog_end) |

| void | ParallelWorkerMain (Datum main_arg) |

Variables | |

| PGDLLIMPORT volatile sig_atomic_t | ParallelMessagePending |

| PGDLLIMPORT int | ParallelWorkerNumber |

| PGDLLIMPORT bool | InitializingParallelWorker |

Macro Definition Documentation

◆ IsParallelWorker

| #define IsParallelWorker | ( | ) | (ParallelWorkerNumber >= 0) |

Definition at line 62 of file parallel.h.

Typedef Documentation

◆ parallel_worker_main_type

| typedef void(* parallel_worker_main_type) (dsm_segment *seg, shm_toc *toc) |

Definition at line 25 of file parallel.h.

◆ ParallelContext

◆ ParallelWorkerContext

◆ ParallelWorkerInfo

Function Documentation

◆ AtEOSubXact_Parallel()

|

extern |

Definition at line 1262 of file parallel.c.

References DestroyParallelContext(), dlist_head_element, dlist_is_empty(), elog, fb(), pcxt_list, ParallelContext::subid, and WARNING.

Referenced by AbortSubTransaction(), and CommitSubTransaction().

◆ AtEOXact_Parallel()

Definition at line 1283 of file parallel.c.

References DestroyParallelContext(), dlist_head_element, dlist_is_empty(), elog, fb(), pcxt_list, and WARNING.

Referenced by AbortTransaction(), and CommitTransaction().

◆ CreateParallelContext()

|

extern |

Definition at line 174 of file parallel.c.

References Assert, dlist_push_head(), error_context_stack, ParallelContext::error_context_stack, ParallelContext::estimator, ParallelContext::function_name, GetCurrentSubTransactionId(), IsInParallelMode(), ParallelContext::library_name, MemoryContextSwitchTo(), ParallelContext::node, ParallelContext::nworkers, ParallelContext::nworkers_to_launch, palloc0_object, pcxt_list, pstrdup(), shm_toc_initialize_estimator, ParallelContext::subid, and TopTransactionContext.

Referenced by _brin_begin_parallel(), _bt_begin_parallel(), _gin_begin_parallel(), ExecInitParallelPlan(), and parallel_vacuum_init().

◆ DestroyParallelContext()

|

extern |

Definition at line 958 of file parallel.c.

References ParallelWorkerInfo::bgwhandle, dlist_delete(), dsm_detach(), ParallelWorkerInfo::error_mqh, fb(), ParallelContext::function_name, HOLD_INTERRUPTS, i, ParallelContext::library_name, ParallelContext::node, ParallelContext::nworkers_launched, pfree(), ParallelContext::private_memory, RESUME_INTERRUPTS, ParallelContext::seg, shm_mq_detach(), TerminateBackgroundWorker(), WaitForParallelWorkersToExit(), and ParallelContext::worker.

Referenced by _brin_begin_parallel(), _brin_end_parallel(), _bt_begin_parallel(), _bt_end_parallel(), _gin_begin_parallel(), _gin_end_parallel(), AtEOSubXact_Parallel(), AtEOXact_Parallel(), ExecParallelCleanup(), and parallel_vacuum_end().

◆ HandleParallelMessageInterrupt()

Definition at line 1045 of file parallel.c.

References InterruptPending, MyLatch, ParallelMessagePending, and SetLatch().

Referenced by procsignal_sigusr1_handler().

◆ InitializeParallelDSM()

|

extern |

Definition at line 212 of file parallel.c.

References BUFFERALIGN, current_role_is_superuser, dsm_create(), DSM_CREATE_NULL_IF_MAXSEGMENTS, DSM_HANDLE_INVALID, dsm_segment_address(), ParallelWorkerInfo::error_mqh, EstimateClientConnectionInfoSpace(), EstimateComboCIDStateSpace(), EstimateGUCStateSpace(), EstimateLibraryStateSpace(), EstimatePendingSyncsSpace(), EstimateReindexStateSpace(), EstimateRelationMapSpace(), EstimateSnapshotSpace(), EstimateTransactionStateSpace(), EstimateUncommittedEnumsSpace(), ParallelContext::estimator, fb(), ParallelContext::function_name, GetActiveSnapshot(), GetAuthenticatedUserId(), GetCurrentRoleId(), GetCurrentStatementStartTimestamp(), GetCurrentTransactionStartTimestamp(), GetSessionDsmHandle(), GetSessionUserId(), GetSessionUserIsSuperuser(), GetTempNamespaceState(), GetTransactionSnapshot(), GetUserIdAndSecContext(), i, INTERRUPTS_CAN_BE_PROCESSED, InvalidXLogRecPtr, IsolationUsesXactSnapshot, ParallelContext::library_name, MemoryContextAlloc(), MemoryContextSwitchTo(), mul_size(), MyDatabaseId, MyProc, MyProcNumber, MyProcPid, ParallelContext::nworkers, ParallelContext::nworkers_to_launch, palloc0_array, PARALLEL_ERROR_QUEUE_SIZE, PARALLEL_KEY_ACTIVE_SNAPSHOT, PARALLEL_KEY_CLIENTCONNINFO, PARALLEL_KEY_COMBO_CID, PARALLEL_KEY_ENTRYPOINT, PARALLEL_KEY_ERROR_QUEUE, PARALLEL_KEY_FIXED, PARALLEL_KEY_GUC, PARALLEL_KEY_LIBRARY, PARALLEL_KEY_PENDING_SYNCS, PARALLEL_KEY_REINDEX_STATE, PARALLEL_KEY_RELMAPPER_STATE, PARALLEL_KEY_SESSION_DSM, PARALLEL_KEY_TRANSACTION_SNAPSHOT, PARALLEL_KEY_TRANSACTION_STATE, PARALLEL_KEY_UNCOMMITTEDENUMS, PARALLEL_MAGIC, ParallelContext::private_memory, ParallelContext::seg, SerializeClientConnectionInfo(), SerializeComboCIDState(), SerializeGUCState(), SerializeLibraryState(), SerializePendingSyncs(), SerializeReindexState(), SerializeRelationMap(), SerializeSnapshot(), SerializeTransactionState(), SerializeUncommittedEnums(), ShareSerializableXact(), shm_mq_attach(), shm_mq_create(), shm_mq_set_receiver(), shm_toc_allocate(), shm_toc_create(), shm_toc_estimate(), shm_toc_estimate_chunk, shm_toc_estimate_keys, shm_toc_insert(), SpinLockInit(), start, StaticAssertDecl, ParallelContext::toc, TopMemoryContext, TopTransactionContext, and ParallelContext::worker.

Referenced by _brin_begin_parallel(), _bt_begin_parallel(), _gin_begin_parallel(), ExecInitParallelPlan(), and parallel_vacuum_init().

◆ LaunchParallelWorkers()

|

extern |

Definition at line 582 of file parallel.c.

References Assert, BecomeLockGroupLeader(), BackgroundWorker::bgw_extra, BackgroundWorker::bgw_flags, BackgroundWorker::bgw_function_name, BackgroundWorker::bgw_library_name, BackgroundWorker::bgw_main_arg, BGW_MAXLEN, BackgroundWorker::bgw_name, BGW_NEVER_RESTART, BackgroundWorker::bgw_notify_pid, BackgroundWorker::bgw_restart_time, BackgroundWorker::bgw_start_time, BackgroundWorker::bgw_type, ParallelWorkerInfo::bgwhandle, BGWORKER_BACKEND_DATABASE_CONNECTION, BGWORKER_CLASS_PARALLEL, BGWORKER_SHMEM_ACCESS, BgWorkerStart_ConsistentState, dsm_segment_handle(), ParallelWorkerInfo::error_mqh, fb(), i, ParallelContext::known_attached_workers, MemoryContextSwitchTo(), MyProcPid, ParallelContext::nknown_attached_workers, ParallelContext::nworkers, ParallelContext::nworkers_launched, ParallelContext::nworkers_to_launch, palloc0_array, RegisterDynamicBackgroundWorker(), ParallelContext::seg, shm_mq_detach(), shm_mq_set_handle(), snprintf, sprintf, TopTransactionContext, UInt32GetDatum(), and ParallelContext::worker.

Referenced by _brin_begin_parallel(), _bt_begin_parallel(), _gin_begin_parallel(), ExecGather(), ExecGatherMerge(), and parallel_vacuum_process_all_indexes().

◆ ParallelContextActive()

Definition at line 1032 of file parallel.c.

References dlist_is_empty(), and pcxt_list.

Referenced by AtPrepare_PredicateLocks(), ExitParallelMode(), and ReleasePredicateLocks().

◆ ParallelWorkerMain()

Definition at line 1300 of file parallel.c.

References ALLOCSET_DEFAULT_SIZES, AllocSetContextCreate, Assert, AttachSerializableXact(), AttachSession(), ClientConnectionInfo::auth_method, ClientConnectionInfo::authn_id, BackgroundWorkerInitializeConnectionByOid(), BackgroundWorkerUnblockSignals(), BecomeLockGroupMember(), before_shmem_exit(), BackgroundWorker::bgw_extra, BGWORKER_BYPASS_ALLOWCONN, BGWORKER_BYPASS_ROLELOGINCHECK, CommitTransactionCommand(), CurrentMemoryContext, DatumGetUInt32(), DetachSession(), dsm_attach(), dsm_segment_address(), elog, EndParallelWorkerTransaction(), EnterParallelMode(), ereport, errcode(), errmsg(), ERROR, ExitParallelMode(), fb(), GetDatabaseEncoding(), hba_authname(), InitializeSystemUser(), InitializingParallelWorker, InvalidateSystemCaches(), LookupParallelWorkerFunction(), MyBgworkerEntry, MyClientConnectionInfo, MyFixedParallelState, MyProc, PARALLEL_ERROR_QUEUE_SIZE, PARALLEL_KEY_ACTIVE_SNAPSHOT, PARALLEL_KEY_CLIENTCONNINFO, PARALLEL_KEY_COMBO_CID, PARALLEL_KEY_ENTRYPOINT, PARALLEL_KEY_ERROR_QUEUE, PARALLEL_KEY_FIXED, PARALLEL_KEY_GUC, PARALLEL_KEY_LIBRARY, PARALLEL_KEY_PENDING_SYNCS, PARALLEL_KEY_REINDEX_STATE, PARALLEL_KEY_RELMAPPER_STATE, PARALLEL_KEY_SESSION_DSM, PARALLEL_KEY_TRANSACTION_SNAPSHOT, PARALLEL_KEY_TRANSACTION_STATE, PARALLEL_KEY_UNCOMMITTEDENUMS, FixedParallelState::parallel_leader_pid, PARALLEL_MAGIC, ParallelLeaderPid, ParallelLeaderProcNumber, ParallelWorkerNumber, ParallelWorkerShutdown(), PointerGetDatum(), PopActiveSnapshot(), pq_putmessage, pq_redirect_to_shm_mq(), pq_set_parallel_leader(), PqMsg_Terminate, PushActiveSnapshot(), RestoreClientConnectionInfo(), RestoreComboCIDState(), RestoreGUCState(), RestoreLibraryState(), RestorePendingSyncs(), RestoreReindexState(), RestoreRelationMap(), RestoreSnapshot(), RestoreTransactionSnapshot(), RestoreUncommittedEnums(), SetAuthenticatedUserId(), SetClientEncoding(), SetCurrentRoleId(), SetParallelStartTimestamps(), SetSessionAuthorization(), SetTempNamespaceState(), SetUserIdAndSecContext(), shm_mq_attach(), shm_mq_set_sender(), shm_toc_attach(), shm_toc_lookup(), StartParallelWorkerTransaction(), StartTransactionCommand(), and TopMemoryContext.

◆ ParallelWorkerReportLastRecEnd()

|

extern |

Definition at line 1593 of file parallel.c.

References Assert, fb(), MyFixedParallelState, SpinLockAcquire(), and SpinLockRelease().

Referenced by CommitTransaction().

◆ ProcessParallelMessages()

Definition at line 1056 of file parallel.c.

References ALLOCSET_DEFAULT_SIZES, AllocSetContextCreate, appendBinaryStringInfo(), dlist_iter::cur, StringInfoData::data, data, dlist_container, dlist_foreach, ereport, errcode(), errmsg(), ERROR, ParallelWorkerInfo::error_mqh, fb(), HOLD_INTERRUPTS, i, initStringInfo(), MemoryContextReset(), MemoryContextSwitchTo(), ParallelContext::nworkers_launched, ParallelMessagePending, pcxt_list, pfree(), ProcessParallelMessage(), RESUME_INTERRUPTS, shm_mq_receive(), SHM_MQ_SUCCESS, SHM_MQ_WOULD_BLOCK, TopMemoryContext, and ParallelContext::worker.

Referenced by ProcessInterrupts().

◆ ReinitializeParallelDSM()

|

extern |

Definition at line 510 of file parallel.c.

References ParallelWorkerInfo::error_mqh, fb(), i, InvalidXLogRecPtr, ParallelContext::known_attached_workers, MemoryContextSwitchTo(), MyProc, ParallelContext::nknown_attached_workers, ParallelContext::nworkers, ParallelContext::nworkers_launched, PARALLEL_ERROR_QUEUE_SIZE, PARALLEL_KEY_ERROR_QUEUE, PARALLEL_KEY_FIXED, pfree(), ParallelContext::seg, shm_mq_attach(), shm_mq_create(), shm_mq_set_receiver(), shm_toc_lookup(), start, ParallelContext::toc, TopTransactionContext, WaitForParallelWorkersToExit(), WaitForParallelWorkersToFinish(), and ParallelContext::worker.

Referenced by ExecParallelReinitialize(), and parallel_vacuum_process_all_indexes().

◆ ReinitializeParallelWorkers()

|

extern |

Definition at line 567 of file parallel.c.

References Min, ParallelContext::nworkers, and ParallelContext::nworkers_to_launch.

Referenced by parallel_vacuum_process_all_indexes().

◆ WaitForParallelWorkersToAttach()

|

extern |

Definition at line 701 of file parallel.c.

References Assert, BGWH_STARTED, BGWH_STOPPED, ParallelWorkerInfo::bgwhandle, CHECK_FOR_INTERRUPTS, ereport, errcode(), errhint(), errmsg(), ERROR, ParallelWorkerInfo::error_mqh, fb(), GetBackgroundWorkerPid(), i, ParallelContext::known_attached_workers, MyLatch, ParallelContext::nknown_attached_workers, ParallelContext::nworkers_launched, ResetLatch(), shm_mq_get_queue(), shm_mq_get_sender(), WaitLatch(), WL_EXIT_ON_PM_DEATH, WL_LATCH_SET, and ParallelContext::worker.

Referenced by _brin_begin_parallel(), _bt_begin_parallel(), and _gin_begin_parallel().

◆ WaitForParallelWorkersToFinish()

|

extern |

Definition at line 804 of file parallel.c.

References Assert, BGWH_STOPPED, ParallelWorkerInfo::bgwhandle, CHECK_FOR_INTERRUPTS, ereport, errcode(), errhint(), errmsg(), ERROR, ParallelWorkerInfo::error_mqh, fb(), GetBackgroundWorkerPid(), i, ParallelContext::known_attached_workers, MyLatch, ParallelContext::nworkers_launched, PARALLEL_KEY_FIXED, ResetLatch(), shm_mq_get_queue(), shm_mq_get_sender(), shm_toc_lookup(), ParallelContext::toc, WaitLatch(), WL_EXIT_ON_PM_DEATH, WL_LATCH_SET, ParallelContext::worker, and XactLastRecEnd.

Referenced by _brin_end_parallel(), _bt_end_parallel(), _gin_end_parallel(), ExecParallelFinish(), parallel_vacuum_process_all_indexes(), and ReinitializeParallelDSM().

Variable Documentation

◆ InitializingParallelWorker

|

extern |

Definition at line 122 of file parallel.c.

Referenced by check_client_encoding(), check_role(), check_session_authorization(), check_transaction_deferrable(), check_transaction_isolation(), check_transaction_read_only(), InitializeSessionUserId(), and ParallelWorkerMain().

◆ ParallelMessagePending

|

extern |

Definition at line 119 of file parallel.c.

Referenced by HandleParallelMessageInterrupt(), ProcessInterrupts(), and ProcessParallelMessages().

◆ ParallelWorkerNumber

|

extern |

Definition at line 116 of file parallel.c.

Referenced by _brin_parallel_build_main(), _bt_parallel_build_main(), _gin_parallel_build_main(), BuildTupleHashTable(), ExecEndAgg(), ExecEndBitmapHeapScan(), ExecEndBitmapIndexScan(), ExecEndIndexOnlyScan(), ExecEndIndexScan(), ExecEndMemoize(), ExecHashInitializeWorker(), ExecParallelGetReceiver(), ExecParallelHashEnsureBatchAccessors(), ExecParallelHashJoinSetUpBatches(), ExecParallelHashRepartitionRest(), ExecParallelReportInstrumentation(), ExecSort(), parallel_vacuum_main(), ParallelQueryMain(), and ParallelWorkerMain().